Test Cases, Goldens, and Datasets

Overview

Test cases, goldens, and datasets are three one of the most important primitives to learn about for LLM evaluation. They outline how interactions with your LLM app is represented in Confident AI, which is imparative for applying metrics for evaluation.

In summary:

- Test cases represents either single or multi-turn interactions with your LLM app, which metrics will use for evaluation

- Goldens are precursor to test cases - when you edit datasets on Confident AI, you are editing goldens, containing not just the

inputthat will kickstart your LLM app but also any other custom metadata that’s required to invoke your app - Datasets is a list of goldens and orchestrates the entire evaluation process, may it be single, multi-turn, e2e or component-level testing

These primitives are standardized in Confident AI and are used for all forms of evals.

You ought to understand test cases to understand what are metrics evaluating in the next section.

Test Cases

Test cases capture your LLM app’s runtime inputs and outputs, which metrics use for evaluation. Test cases are:

- Only found in test runs, produced after evaluation

- Contains a pass/fail status, determined by their metric scores, and

- Are immutable, meaning they cannot be edited once created

As a developer, you need to map these arguments into the test case format—either single-turn or multi-turn.

Single-Turn

Multi-Turn

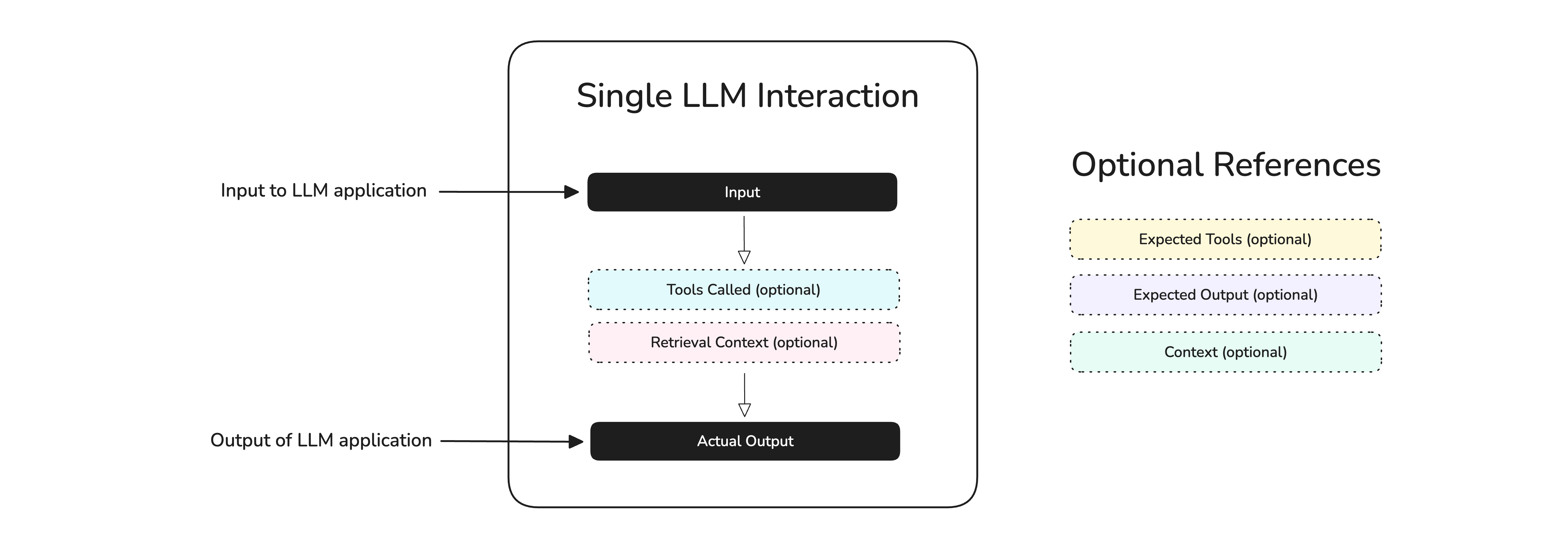

A single-turn test case represents a single, atomic interaction with your LLM app:

In the diagram above, we see that an interaction can include an input, actual_output, retrieval_context (for RAG), tools_called, etc. An interaction can live in both the:

- End-to-end level: The “observable” system inputs and outputs are piped into a test case

- Component-level: An individual component’s interactions are piped into a test case

In deepeval, a single-turn test case is represented by an LLMTestCase:

Each parameter represents different aspects of an interaction:

- Input: The input to your LLM app. This is usually not the entire prompt, and if you’re using the OpenAI API for example this is be the contents of the last user message.

- Actual output: The output of your LLM app for a given input.

- Retrieval Context: The dynamic text chunks that were retrieved, especially relevant for RAG use cases.

- Tools Called: Any tools that were called for the given input.

- Expected Output: The ideal output of your LLM app for a given input.

- Context: Any static supporting context that is relevant for your use case.

- Expected Tools: The ideal list of tools that should be called for a given input.

Here’s a quick example of how you would populate the input and actual_output fields of an LLMTestCase during evaluation:

In fact, the input will very unlikely be orphaned as shown in the example, and most definitely come from single-turn goldens in your dataset.

When we run regression tests in later sections, we will be matching either the inputs or name of test cases to see if their performance has regressed across test runs.

Goldens

Goldens are extremely similar to test cases - in fact almost identical - for both single and multi-turn. However, goldens are edit-heavy and contains extra fields that provides you more flexibility to kickstart your LLM app for evaluation.

When you edit datasets on Confident AI, you are editing goldens, not test cases. Another important thing to remember is, single-turn goldens create single-turn test cases, and vice versa.

Single-Turn

Multi-Turn

A single-turn golden is represented by the Golden class in deepeval:

It is highly not recommended to pre-populate the actual output, retrieval context, and tools called in goldens, as these are meant to be populated dynamically and doing so will defeat the purpose of evaluation.

You’ll notice that goldens are more opinionated and contains a custom_column_key_values field that you can edit either on the platform or via code.

Datasets

Lastly, a dataset is a collection of goldens. A dataset is either multi-turn or single-turn, and cannot be both at the same time. Datasets can be created either:

- On the platform directly under Project > Datasets, or

- Via Confident AI’s Evals API (also available in

deepeval)

To create a single-turn dataset, you need to use single-turn goldens, and vice versa. At evaluation time, you will need to:

- Loop through goldens in your dataset to invoke your LLM app using the input of each golden

- Map the correct arguments from your golden and LLM app to create test cases

- Add these test cases back to your dataset

- Run evaluation on these test cases

This workflow is extremely important and stays the same no matter whether you are running end-to-end, component-level, single, or multi-turn evals.

Users often ignore the importance of step 3, but it is extremely important as it will tell Confident AI which test run belongs to which dataset, which makes it possible for you to compare prompts and models later on.

Quick example of an end-to-end evaluation:

Single-Turn

Multi-Turn

Looking at both single and multi-turn examples, it should now be clear why multi-turn is much more challenging to evaluate, since we not only have to do the necessary ETL to format goldens into test cases, but also generate a long list of turns as well.

Next Steps

Now that you know what single-turn, multi-turn, end-to-end, and component-level testing is, as well as the primitives involved in evaluation, it’s time to understand:

- What are LLM-as-a-Judge metrics

- Which metrics are suitable for your use case