We Need to Talk. In Code.

TGIF! Thank god it’s features, here’s what we shipped this week:

Big week for the org-anized among us. Multi-turn evals go code-first, Vercel joins the family, and prompts finally get the observability they deserve.

Added

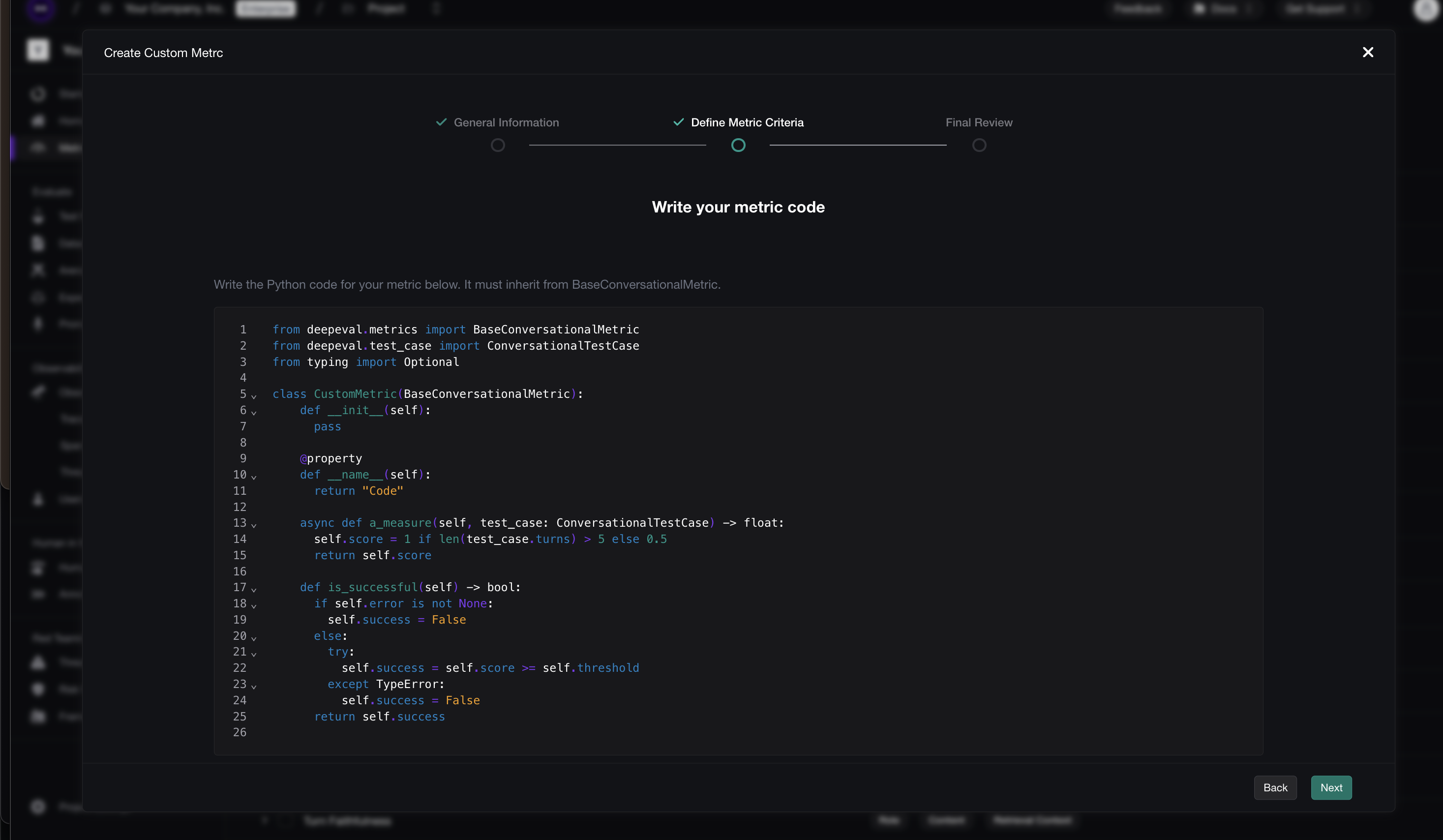

- Code-Based Multi-Turn Evals - Introducing

ConversationalTestCasefor your codebase. All the power of multi-turn evaluation, now programmable. Time to have the talk with your chatbot—in code. - Vercel AI SDK Integration - Next.js devs, rejoice! Native integration with Vercel’s AI SDK means you can trace and evaluate your

aipackage calls with zero friction. Ship fast, eval faster. - Transformers on Retrievers & Tools - Transformers aren’t just for AI connection outputs anymore. Reshape retriever outputs and tool calls before evaluation. Your agentic RAG pipeline called—it wants its custom parsing back.

- Organization-Wide Metrics - Define metrics at the org level and share them across all your teams. No more “wait, which faithfulness config are we using?” Standardize once, evaluate everywhere.

Changed

- Prompt Observability - Track which prompts are running in production, when they were swapped, and how performance changed. Finally, prompt feedback on your prompts.

More Than Meets the AI

TGIF! Thank god it’s features, here’s what we shipped this week:

Transformers (Beta) are here and they’re truly more than meets the AI. Reshape your traced data before evaluation—because not every trace deserves the full spotlight. Meanwhile, Prompt Studio just got a serious commit-ment upgrade with git-style versioning. Love is in the diff this Valentine’s weekend.

Added

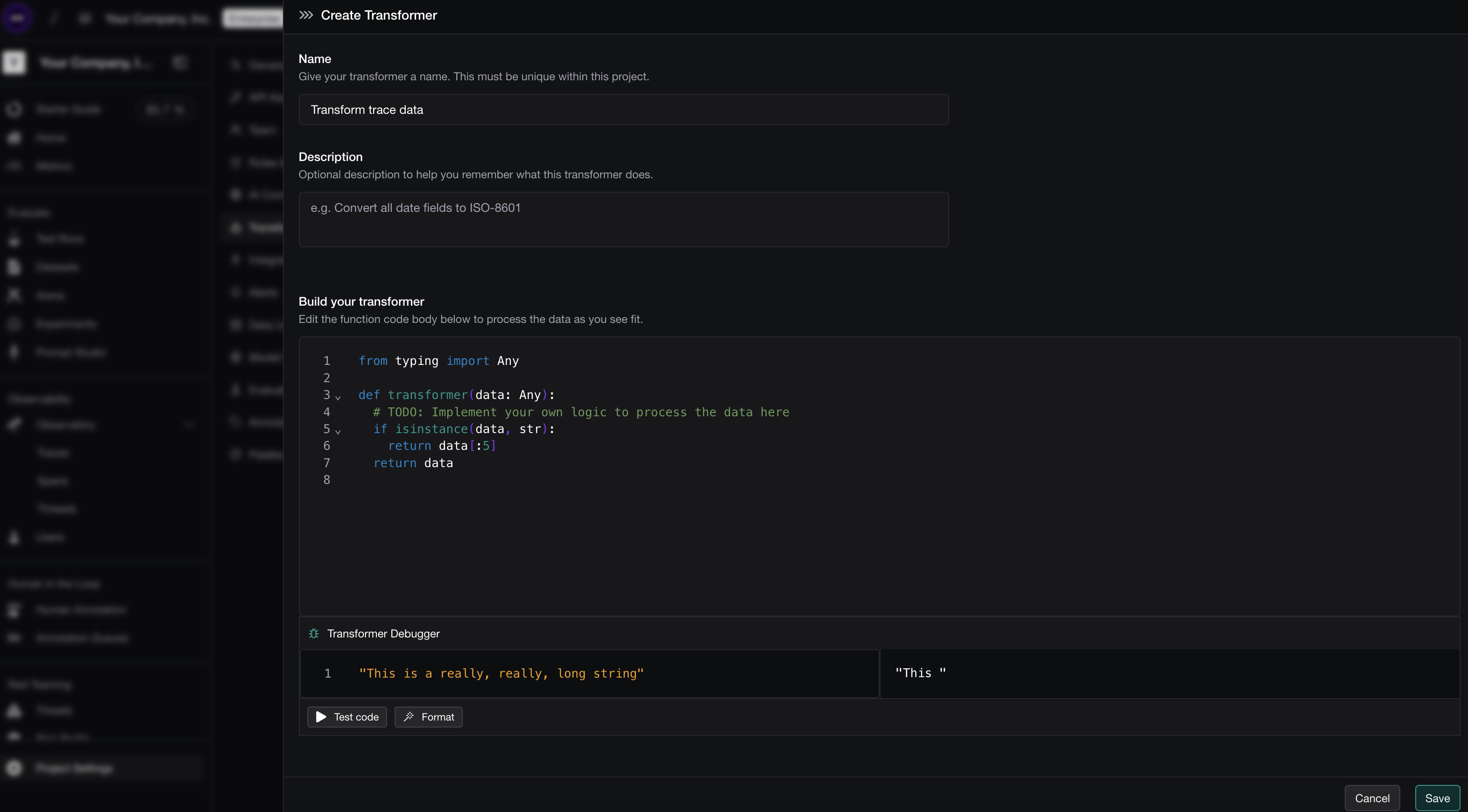

- Transformers (Beta) - The biggest release this week, and it’s more than meets the eye. Write custom code to transform your traced data—including individual spans—before evaluation. Don’t want the whole trace? No problem. Cherry-pick exactly what matters.

- Transformers on AI Connections - Got a JSON blob coming back from your model? Negative indexes on a list? Transformers let you parse and wrangle AI connection outputs however you need. Your data, your rules.

- Prompt Commits - Every change to your prompt now creates a commit. Full history, no more guessing what changed or when. It’s

git logfor your prompts, and it’s beautiful.

Changed

- Git-Based Prompt Studio - Prompt Studio is leaning hard into the git workflow. Commits, versions, diffs—everything you love about version control, now for your prompts. We’re committing to this direction. (Pun intended.)

Let There Be Light (Mode)

TGIF! Thank god it’s features, here’s what we shipped this week:

Big week for visibility—both in your data and on your screen. We’re launching 30+ additional Observatory graphs to surface insights, a Data Usage settings page for full transparency, and light mode is officially out of beta. Shine bright, friends.

Added

- Data Usage Settings Page - Know thy data. A dedicated page to see exactly how your data is being used—because transparency isn’t just a buzzword, it’s a lifestyle.

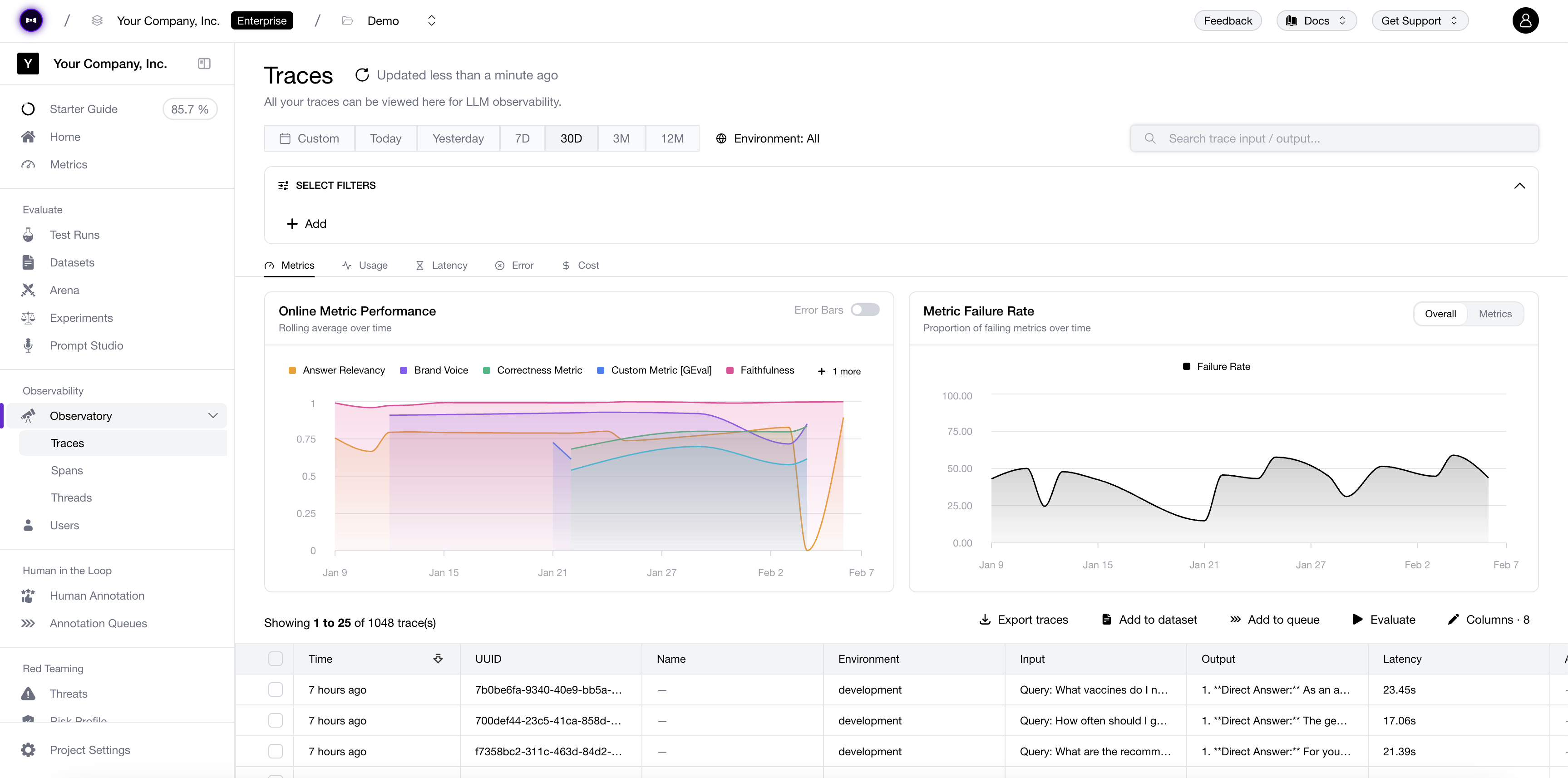

- Observatory Graphs - Finally, charts that slap. Visualize your observability data, spot trends before they spot you, and look like a genius in your next standup.

- Code Evals (Beta) - G-Eval couldn’t cut it? Write your own eval logic in code. We don’t judge. Okay, technically we do—that’s the whole point.

- Multimodal Arena - Let your vision-language models duke it out. Two models enter, one model leaves with bragging rights.

- AI Connection Upgrades - Tracing, list indexes key path, duplicate connections, max concurrency—the works. Your AI connections just got a glow-up.

Changed

- Light Mode Out of Beta - Light mode is officially here to stay. Welcome to the bright side.

- Faster Observatory Dashboards - We gave our dashboards a double espresso. Load times are now unreasonably fast.

Scaling New Heights

TGIF! Thank god it’s features, here’s what we shipped this week:

Welcome to our brand new changelog! We’re kicking things off with better cost tracking, reliability improvements, and some serious scalability upgrades.

Changelogs before this point are backfilled!

Added

- Changelog - You’re reading it! Subscribe to never miss a beat.

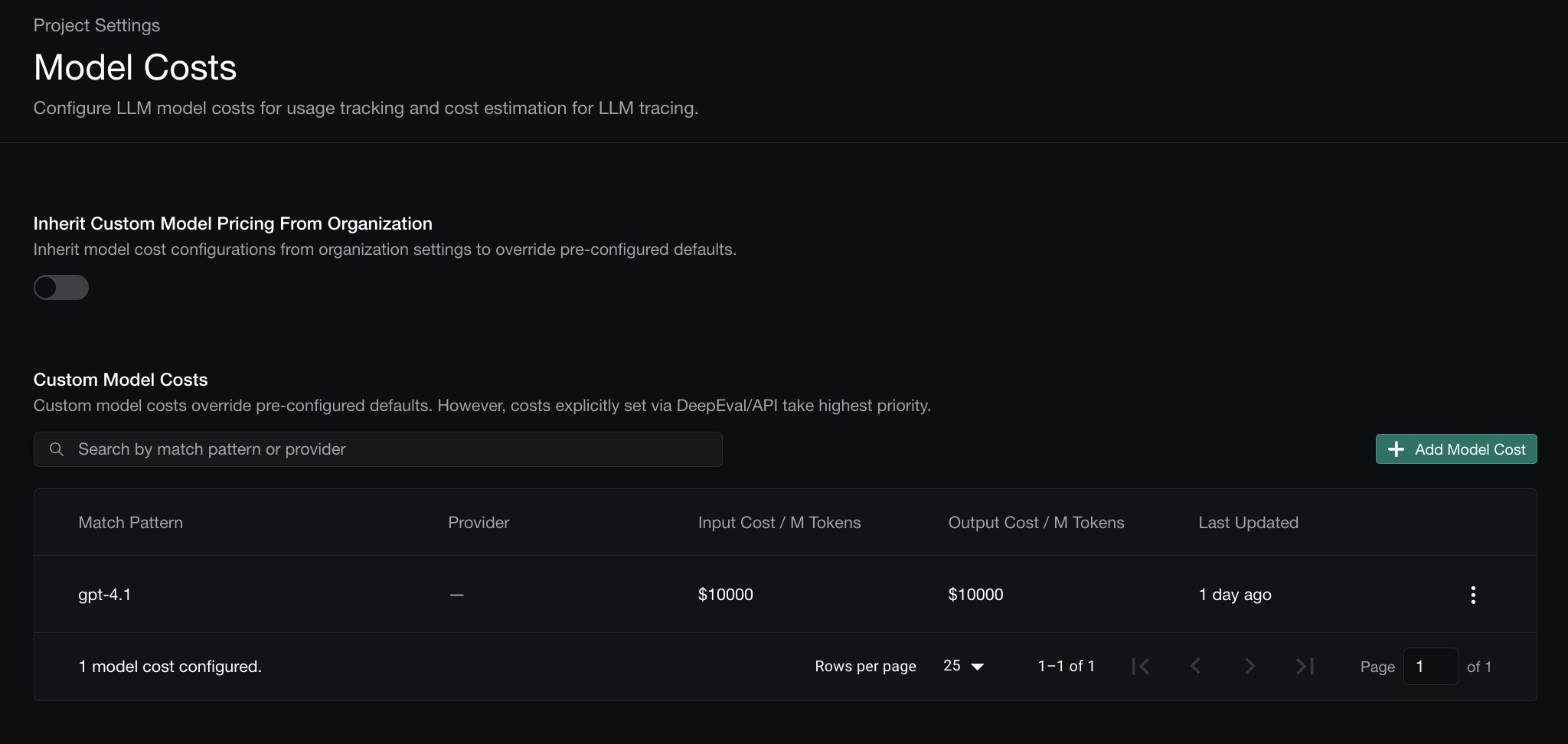

- Custom Model Costs - Set custom cost-per-token for any model in your project settings. Finally, accurate cost tracking for fine-tuned and self-hosted models.

- Request Timeout for AI Connections - Configure timeout limits for your LLM connections. No more hanging requests.

- High-Volume Trace Ingestion - We’ve beefed up our trace handling with buffered ingestion. Traffic spikes? Bring ‘em on.

Changed

- Smoother Experiment Runs - Real-time evaluation progress is now more reliable with improved streaming.

- Annotator Attribution - See who left that annotation. Credit where credit’s due.

- Faster Spans Loading - The spans tab now loads at lightning speed, even for trace-heavy projects.

Alert the Press, We’re Going Multimodal

TGIF! Thank god it’s features, here’s what we shipped this week:

Big week! We’re introducing alerts to keep you in the loop, shareable traces for collaboration, and multimodal support so your vision models don’t feel left out.

Added

- Public Trace Links - Share traces with anyone via a public link. Perfect for debugging with teammates or showing off to stakeholders.

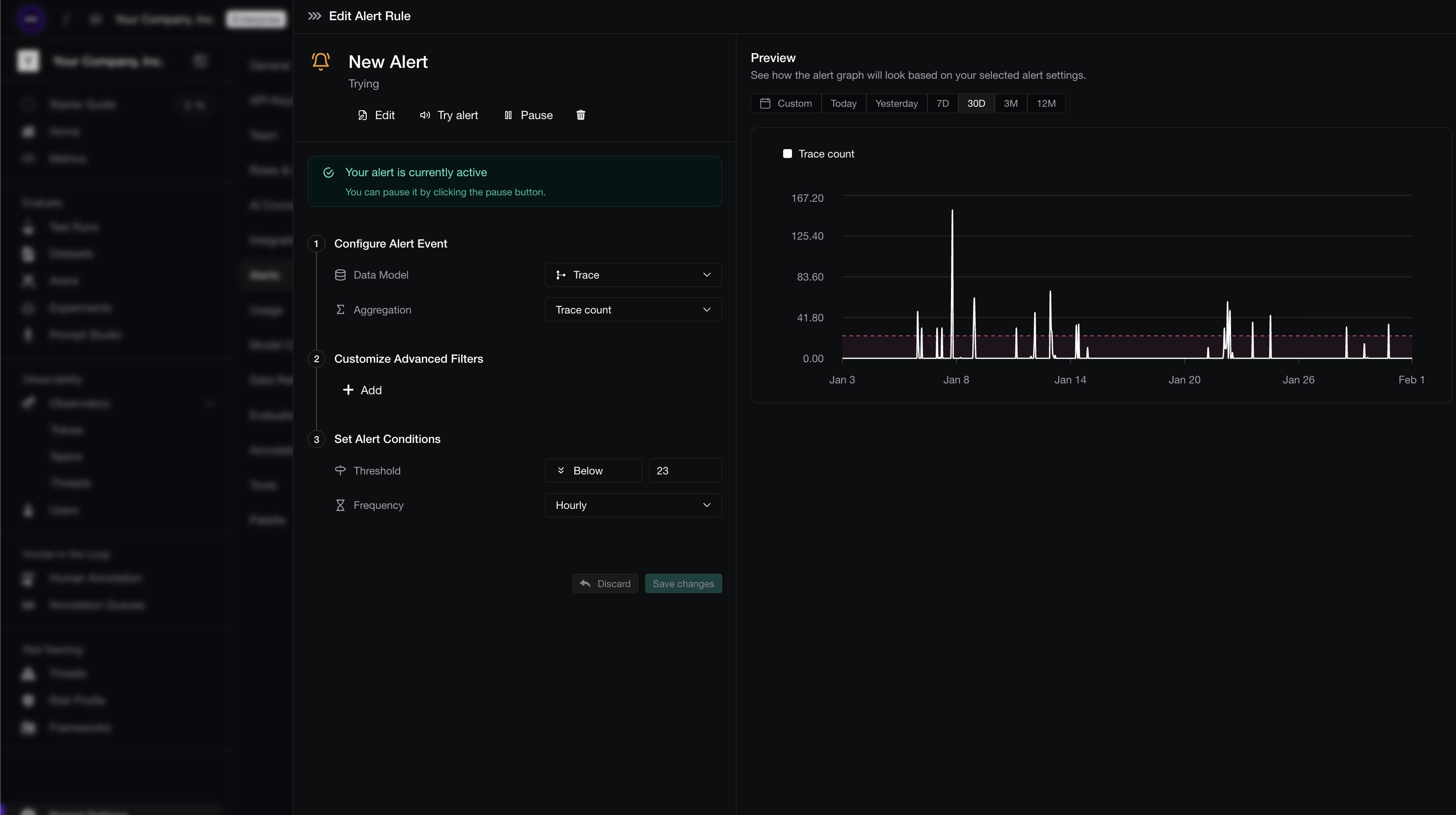

- Scheduled Alerts - Set thresholds, get notified. Never let a regression slip through unnoticed again.

- Multimodal Evaluations - Images + text? We can evaluate that now. Test your vision-language models with confidence.

- Evaluation Queue - Large eval jobs now queue up nicely instead of timing out. Go big or go home.

Changed

- Snappier Dashboards - Graphs load faster. Like, noticeably faster. You’re welcome.

On Cloud Nine

TGIF! Thank god it’s features, here’s what we shipped this week:

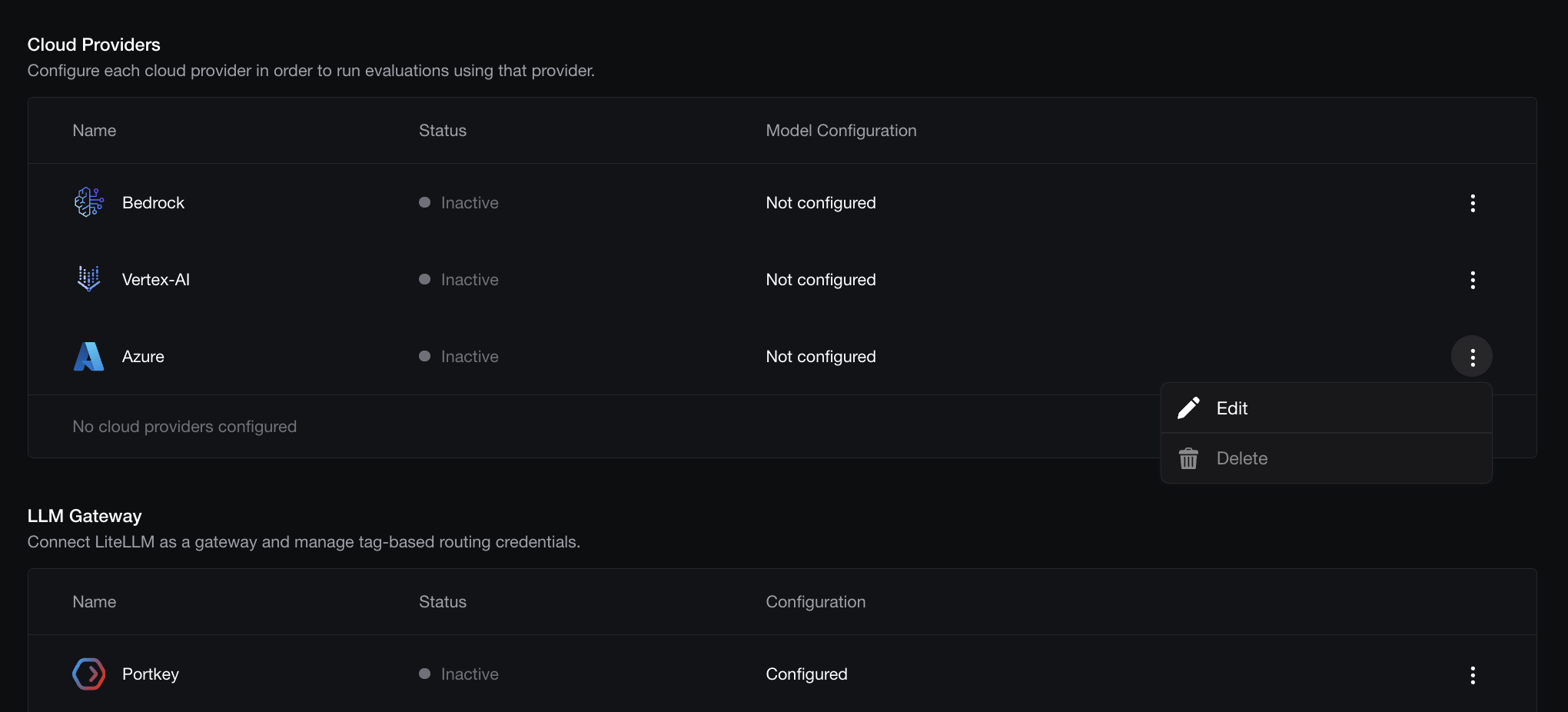

Azure fans, GCP enthusiasts—we see you. This week we’re bringing the clouds to Confident AI so you can evaluate using your own infrastructure.

Added

- Azure OpenAI Support - Connect your Azure deployment and run evals without leaving your cloud comfort zone.

- GCP Vertex AI Integration - Drop in your service account key and you’re off to the races with Google’s models.

- Top-K Filtering - Show me the top 10. Or bottom 5. Or whatever K your heart desires.

Changed

- Faster Dashboards - We optimized the heck out of our aggregation layer. Graphs now load before you finish your sip of coffee.

- Live Evaluation Progress - Watch your evals run in real-time with streaming progress updates. It’s oddly satisfying.

Dashing Into the New Year

TGIF! Thank god it’s features, here’s what we shipped this week:

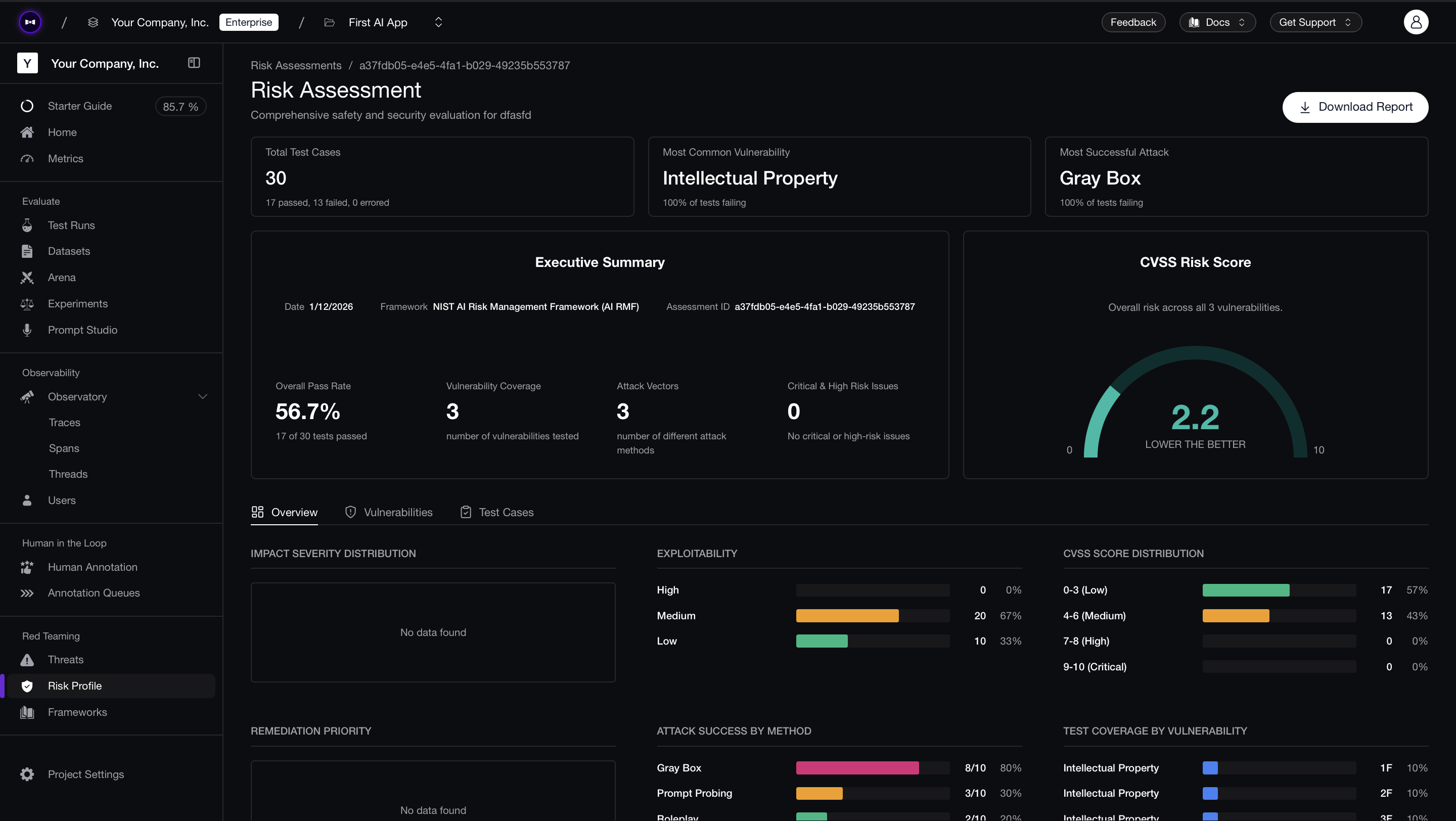

New year, new dashboards! We’ve redesigned how you visualize your LLM performance with customizable views and smarter breakdowns. And while you’re at it, you can now take your security insights with you as PDF reports.

Added

- Custom Dashboards - Build your own views. Save them. Make them yours. Finally, analytics that fit how you work.

- Dimension Breakdowns - Slice and dice by model, environment, or any dimension. Compare apples to apples (or GPT-4 to Claude).

- Risk Assessment Reports (PDF) - Generate custom risk assessment reports from your red teaming runs and download them as shareable PDFs. Perfect for reviews, audits, and internal security discussions. (Keep it confidential)

Changed

- Fresh Dashboard Layout - Everything’s been reorganized for better flow. Less clicking, more insights.

- Readable Timestamps - Dates and times now look like actual dates and times. Revolutionary, we know.

I See What You Did There

TGIF! Thank god it’s features, here’s what we shipped this week:

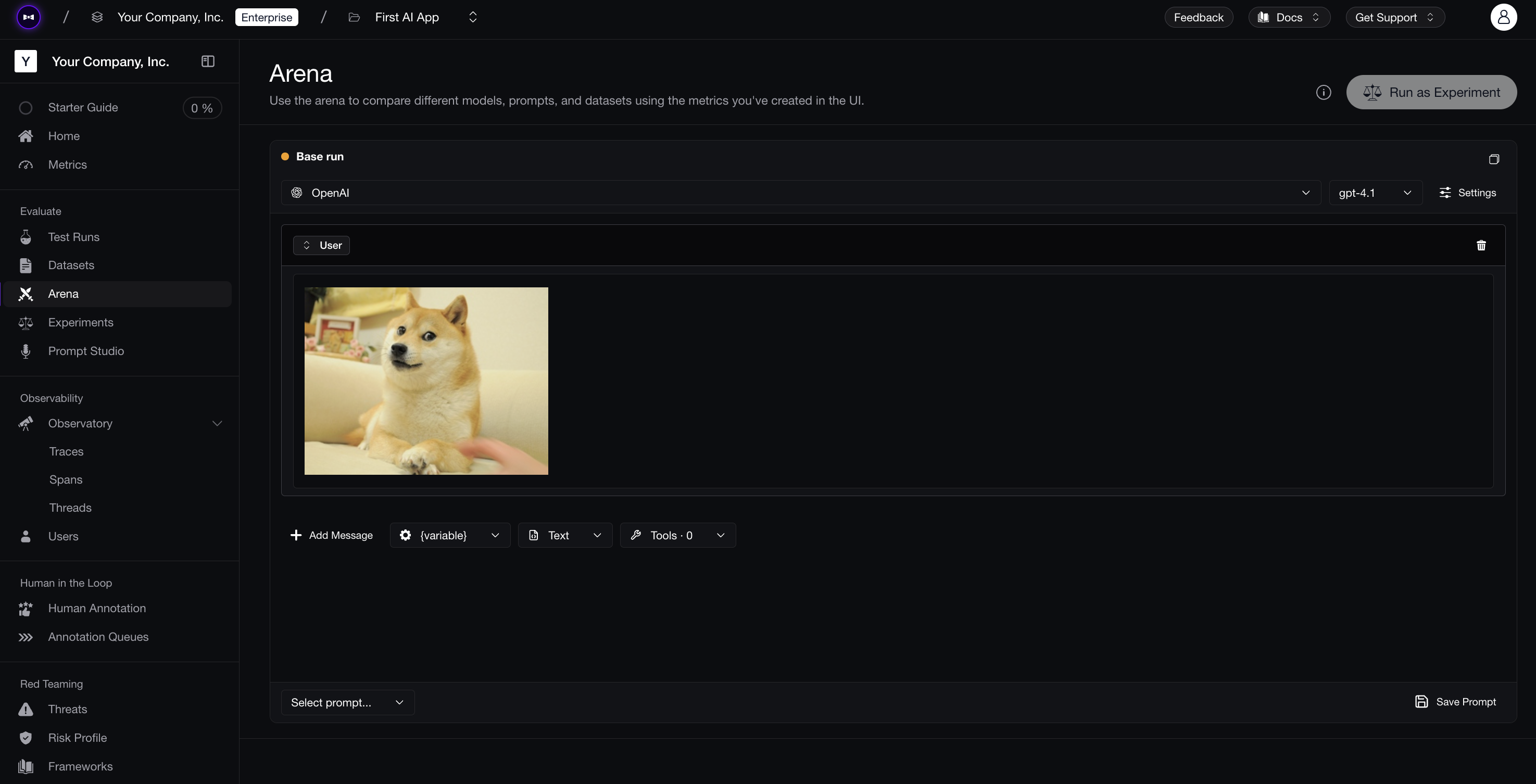

Happy New Year! We’re kicking off 2026 with a vision—literally. Multimodal evaluation is here, and your image-understanding models are about to get the testing they deserve.

Added

- Multimodal Prompts - Drop images into your experiments. Test GPT-4V, Claude 3, Gemini, or whatever vision model you’re building with.

- Multimodal Test Cases - Build datasets with images + text. Because modern AI isn’t just about words anymore.

Boxing Day Unboxing

TGIF! Thank god it’s features, here’s what we shipped this week:

Hope you had a great holiday! We kept it light this week, but still snuck in some dashboard goodies for you to unwrap.

Added

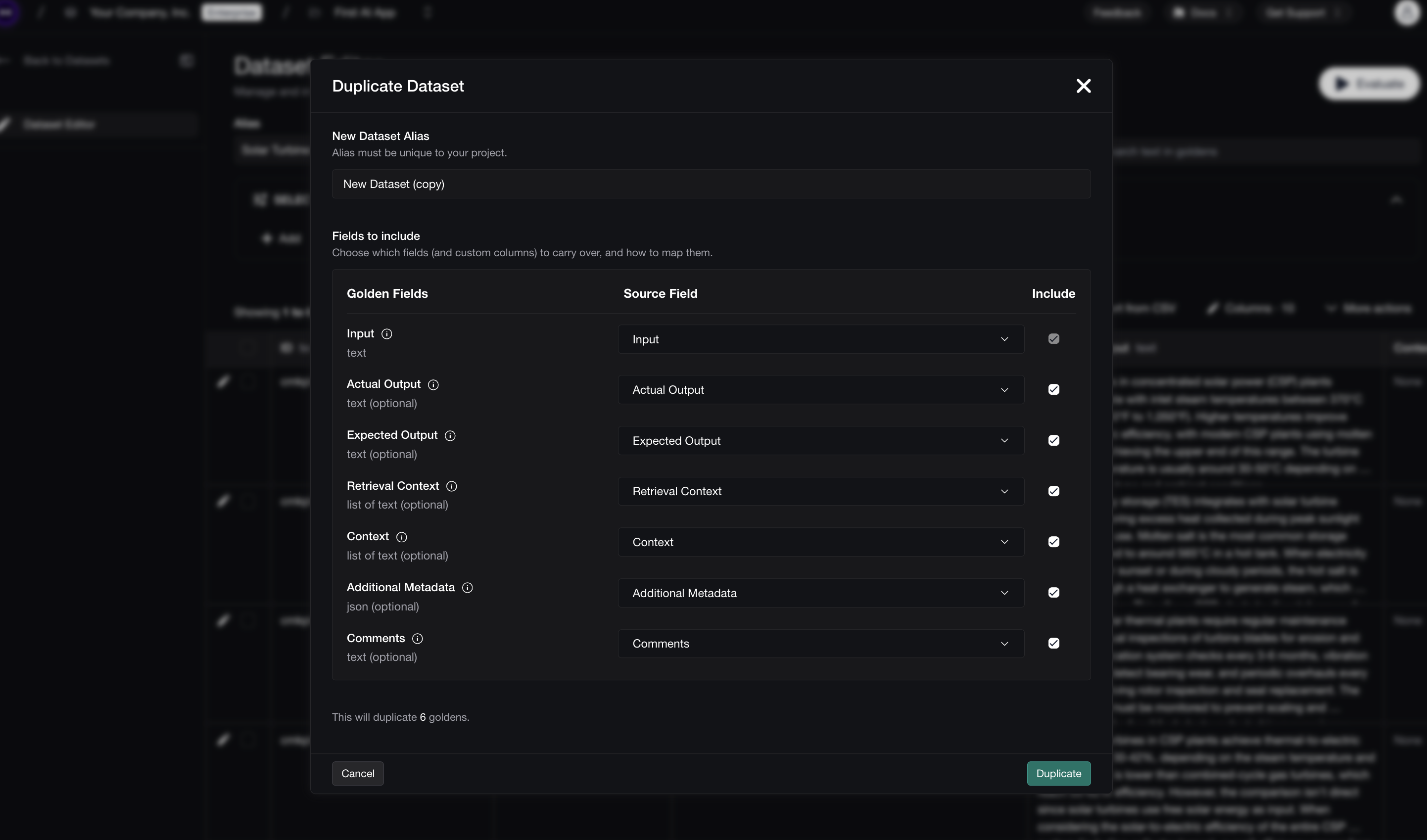

- Duplicate Datasets - Clone any dataset with one click. Perfect for creating variations or backing up before big changes.

- Better Invitation UX - Accepting team invitations is now smoother. New users get a clear onboarding flow instead of a confusing redirect.

Changed

- Dashboard Reorganization - Dashboards now live under Home for easier navigation. One less click to your metrics.

- Multiple Dashboards - Create different views for different needs. One for prod, one for staging, one for “what happened last night?”

Compare and Contrast

TGIF! Thank god it’s features, here’s what we shipped this week:

This week is all about perspective. New comparison features let you see how your models stack up—across time, segments, or whatever you want to measure.

Added

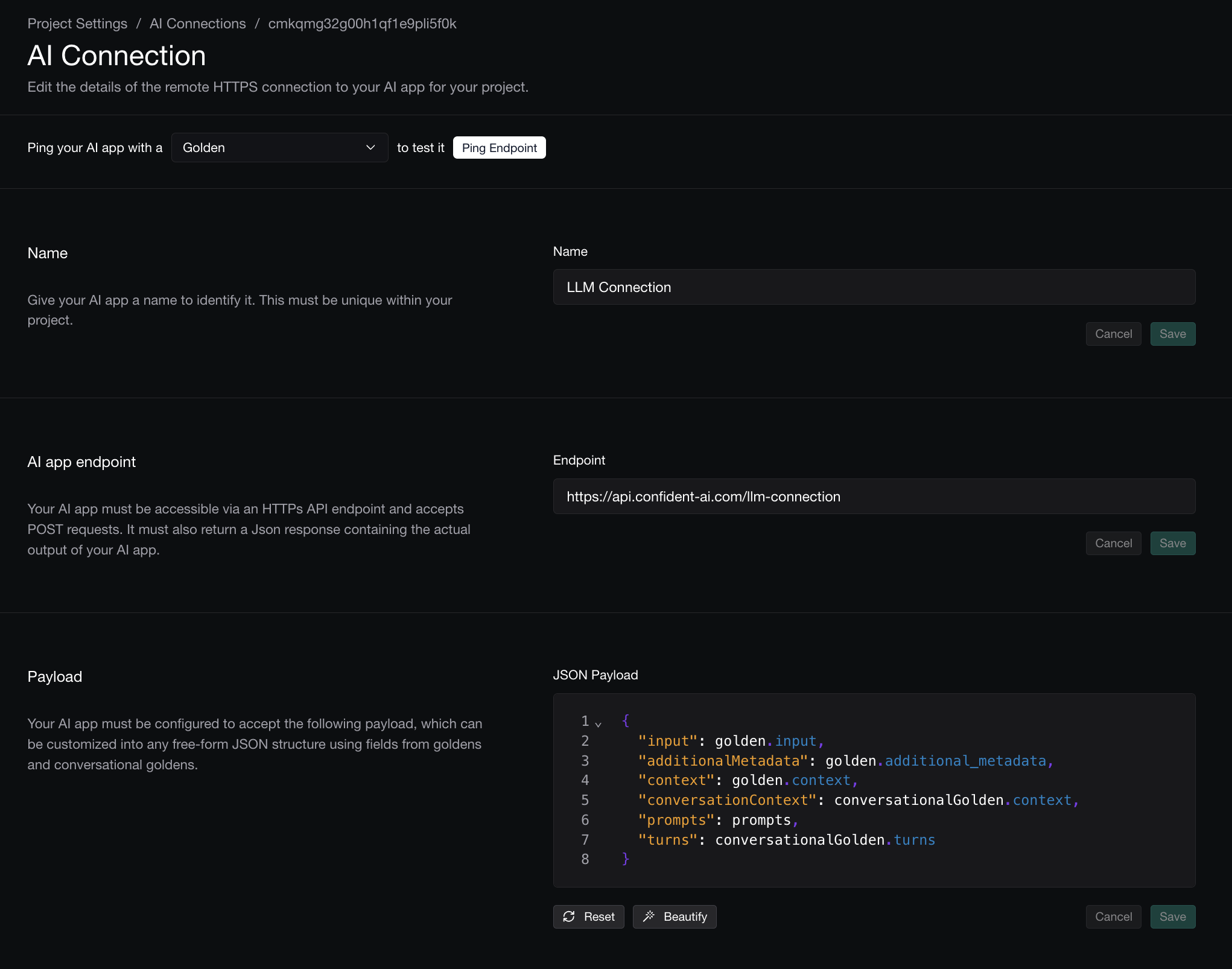

- Custom AI Connection Payloads - Send custom parameters with your AI connections. Temperature, max tokens, stop sequences—whatever your model needs.

- Comparison Mode - Put two time periods side-by-side. See exactly what changed and when. Debugging regressions just got easier.

- Filter Presets - Save your favorite filter combos. One click to your most-used views.