I want you to meet Johnny. Johnny’s a great guy — LLM engineer, did MUN back in high school, valedictorian, graduated summa cum laude. But Johnny had one problem at work: no matter how hard he tried, he couldn’t get his manager to care about LLM evaluation.

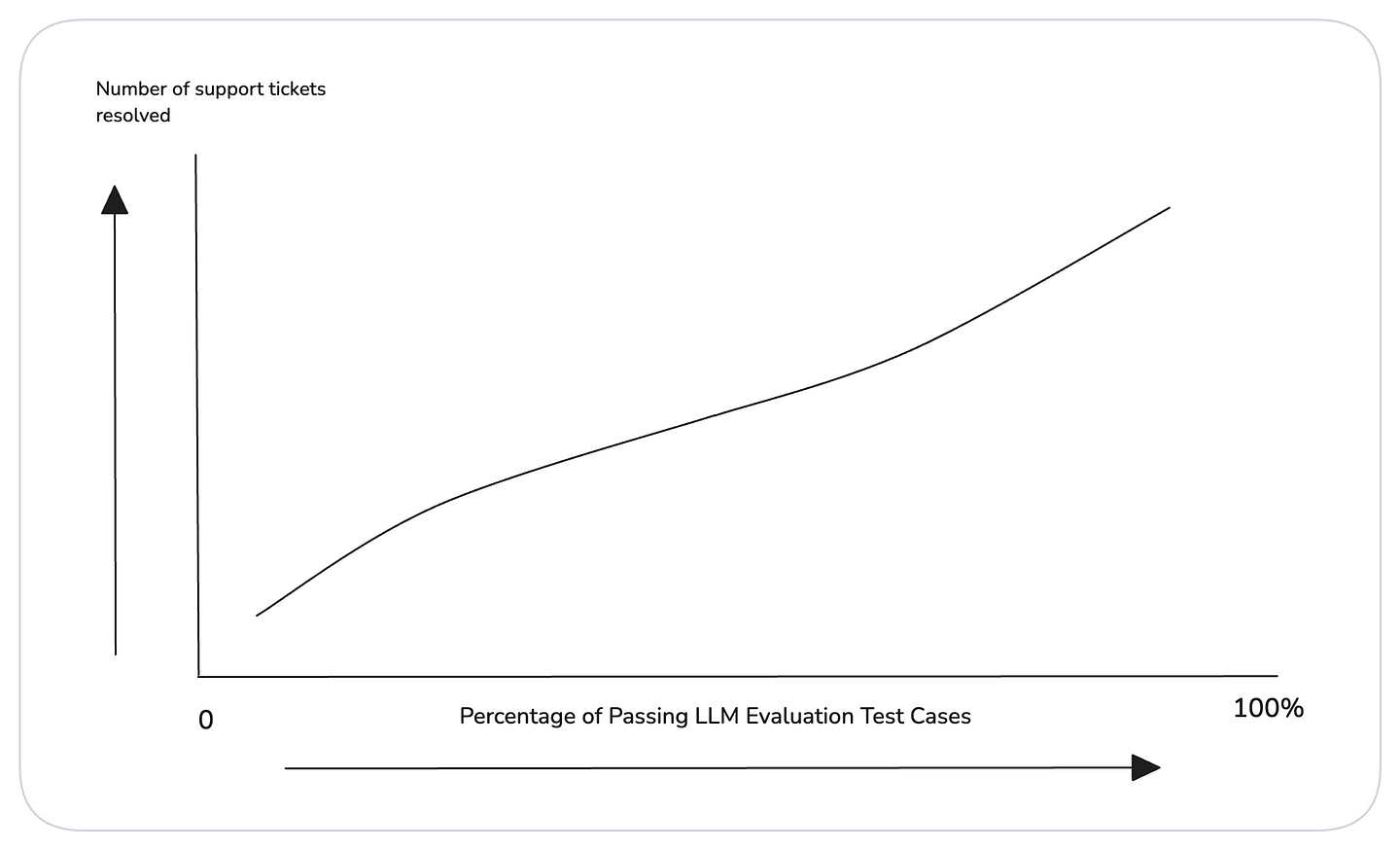

Imagine being able to say, “This new version of our LLM support chatbot will increase customer ticket resolutions by 15%,” or “This RAG QA’s going to save 10 hours per week per analyst starting next sprint.” That was Johnny’s dream — using LLM evaluation results to forecast real-world impact before shipping to production.

But like most dreams, Johnny’s too, fell apart.

Johnny's problem isn't unique. Across the industry, LLM evals efforts are disconnected from business outcomes. Teams run evaluations, hit 80% pass rates, and still can't answer: 'So what?'

Most evaluation efforts fail because:

The metrics didn’t work — they weren’t reliable, meaningful, or aligned with your use case.

Even if the metrics worked, they didn’t map to a business KPI — you couldn’t connect the scores to real-world outcomes.

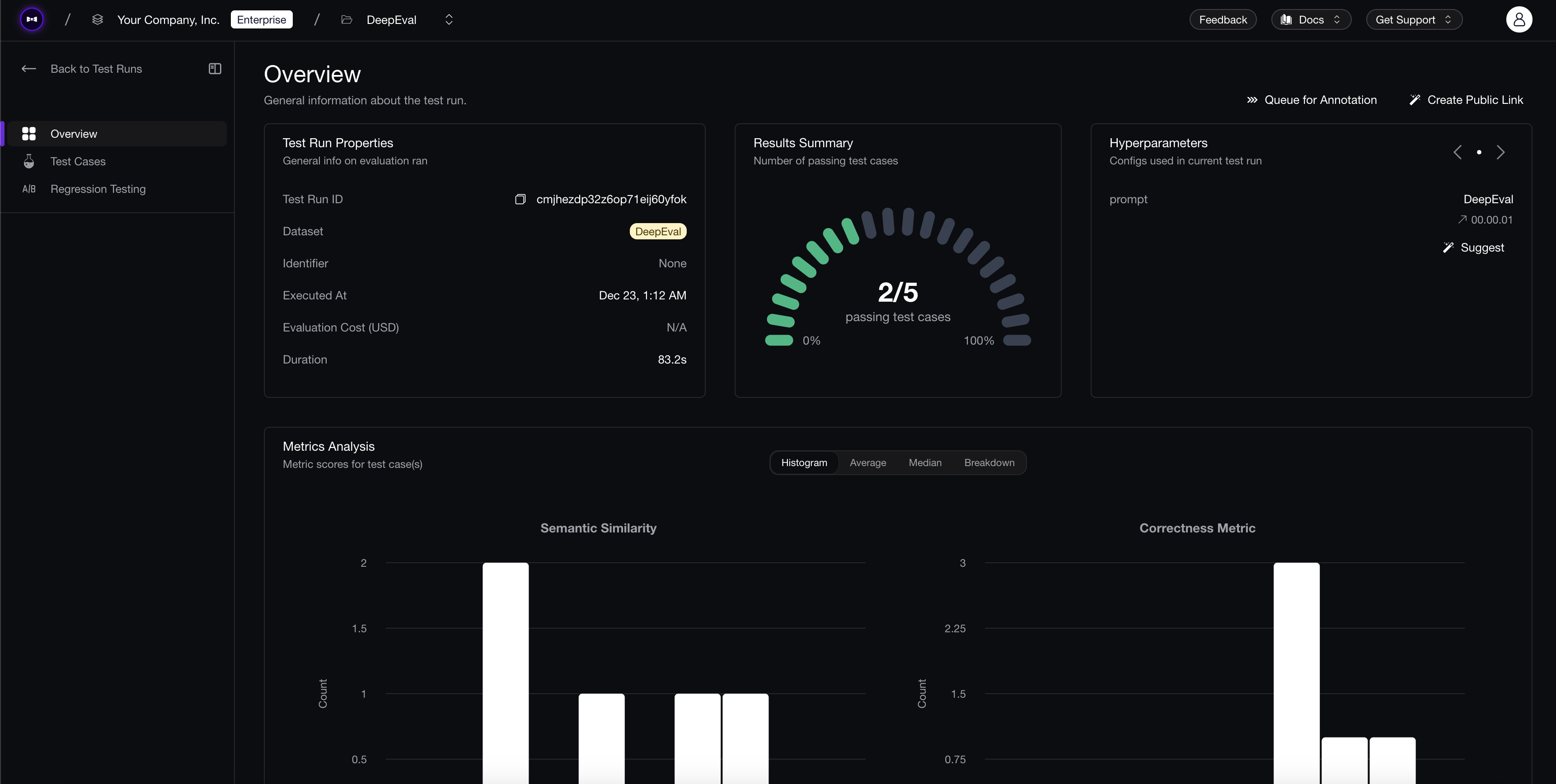

This LLM evaluation playbook is about fixing that. By the end, you’ll know how to design an outcome-based, LLM testing process that drive decisions — and confidently say, “Our pass rate just jumped from 70% to 85%, which means we’re likely to cut support tickets by 20% once this goes live”. This way, your engineering sprint goals can start becoming as simple as optimizing metrics.

You’ll learn:

What is LLM evaluation, why 95% of LLM evaluation efforts fail, and how not to become a victim of pointless LLM evals

How to connect LLM evaluation results to production impact, so your team can forecast improvements in user satisfaction, cost savings, or other KPIs before shipping.

How to build an outcome driven LLM evaluation process, including curating the right dataset, choosing meaningful metrics, and setting up a reliable testing workflow.

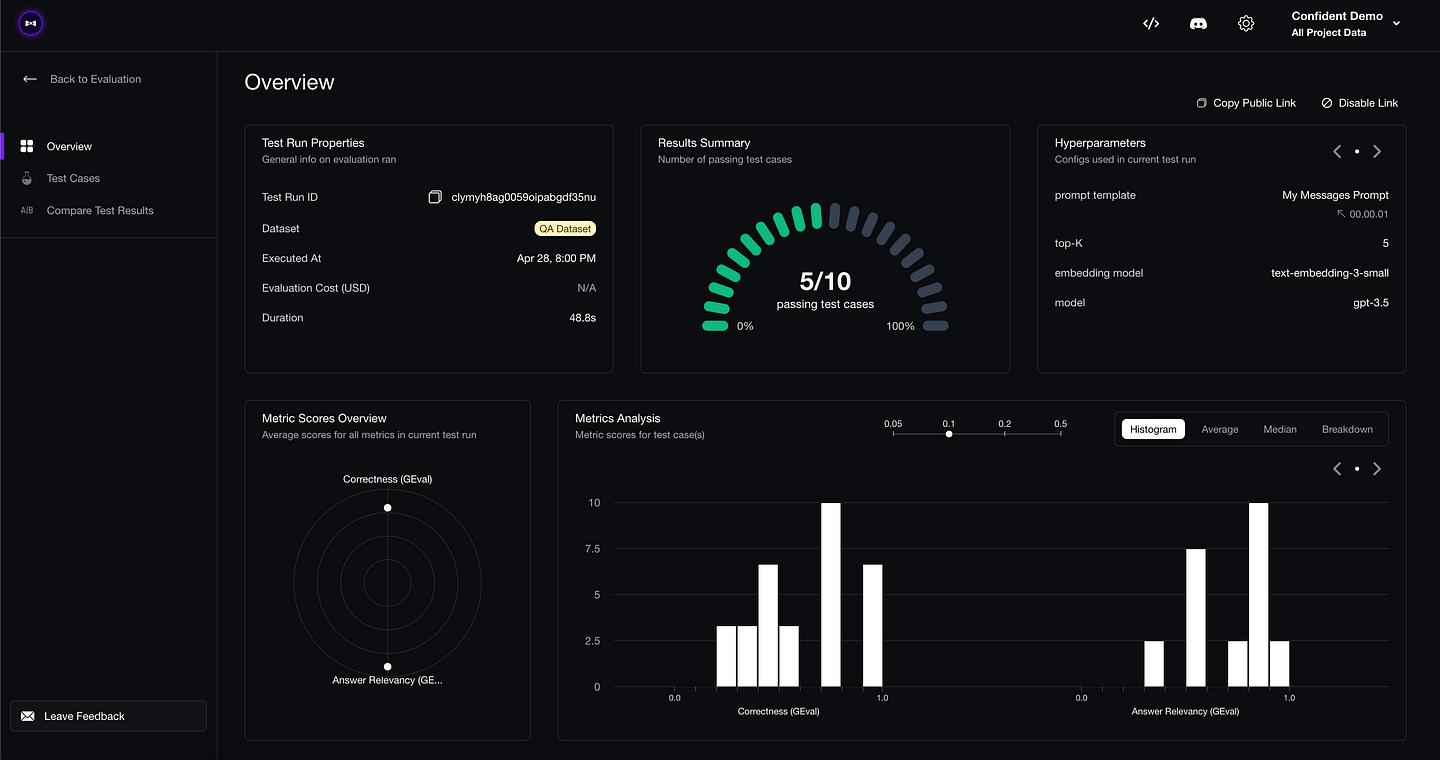

How to create a production-grade testing suite using DeepEval to scale LLM evaluation, but only after you've aligned your metrics.

I'll also include code samples for you to take action on.

TL;DR

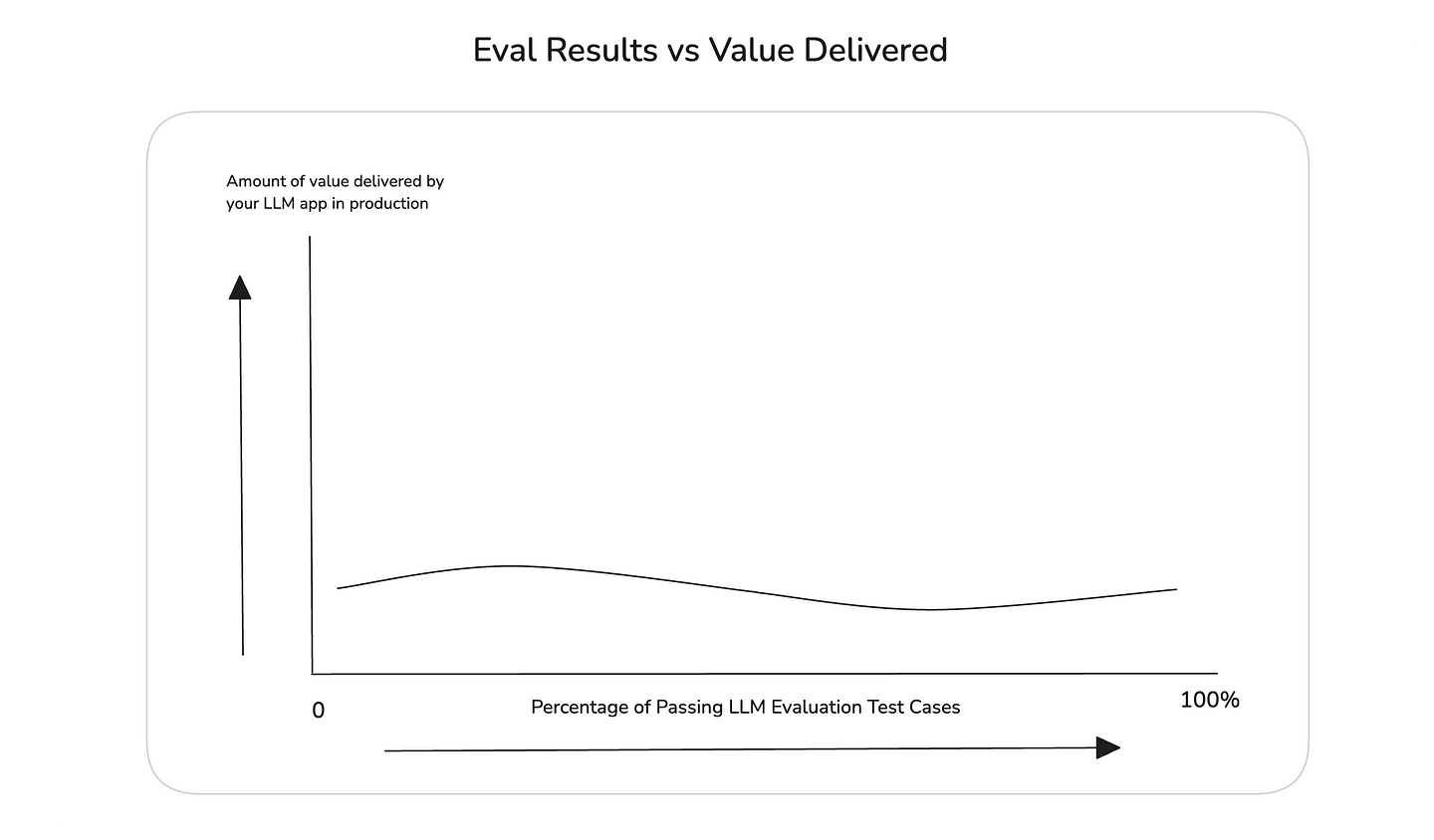

The problem: Most LLM evals fail because they don't correlate to measurable business outcomes. Teams optimize metrics that don't predict production impact.

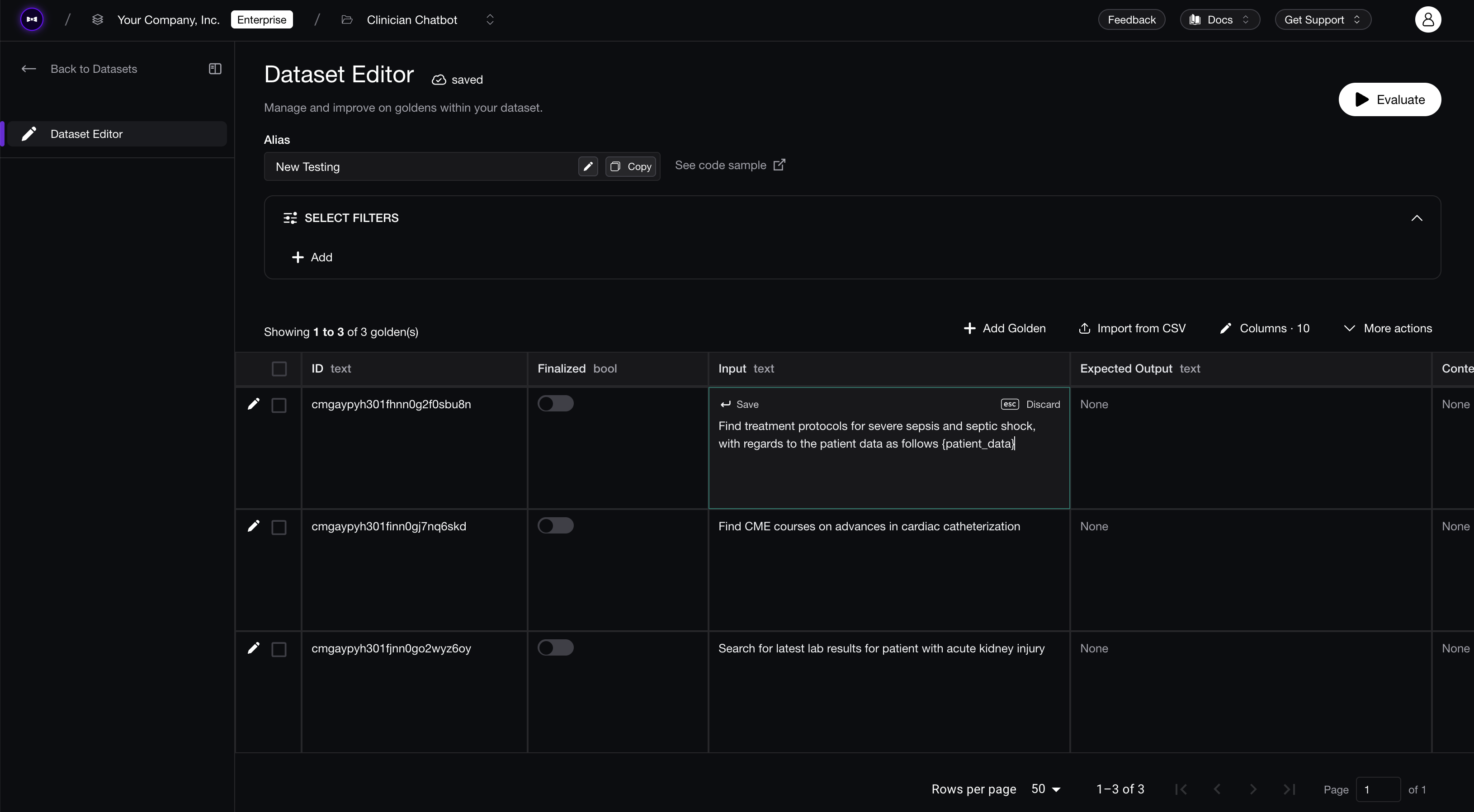

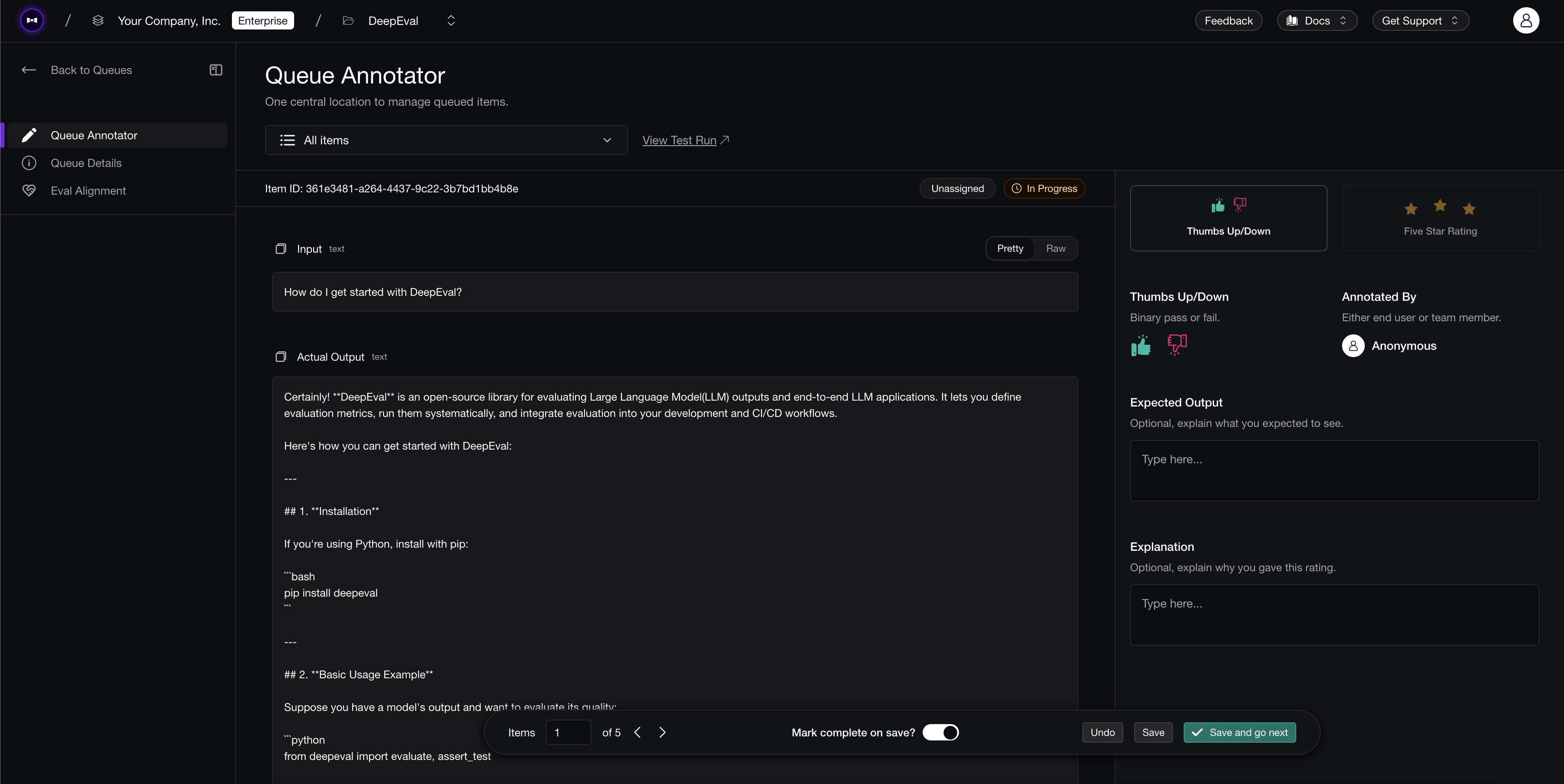

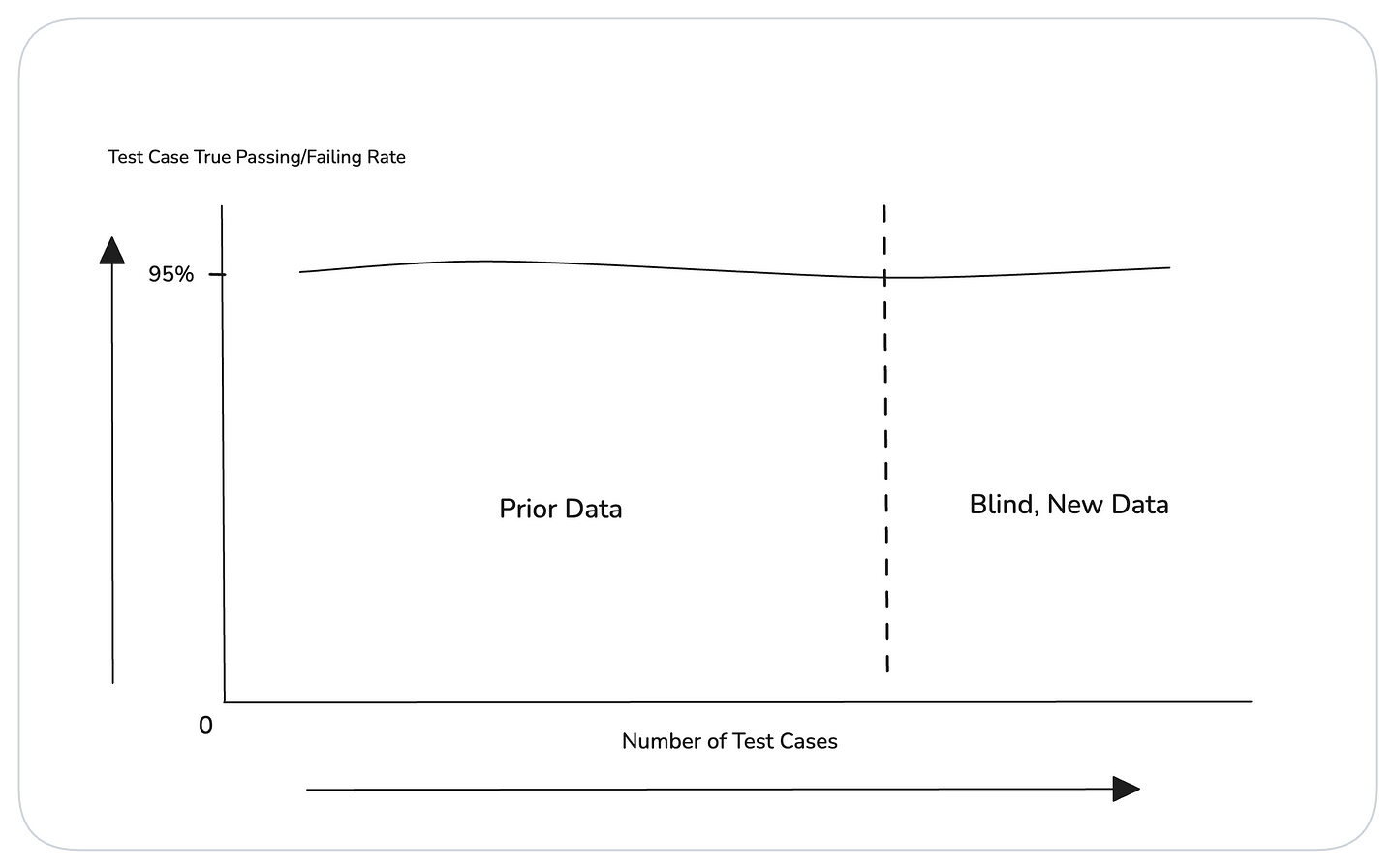

The fix: Curate 25-50 human-labeled test cases with "good" vs "bad" outcomes (not expected metric scores). Then align your evaluation metrics so test pass rates correlate with real-world KPIs.

Timeline: 1-8 weeks to establish metric-outcome fit. Don't scale beyond 100 test cases initially.

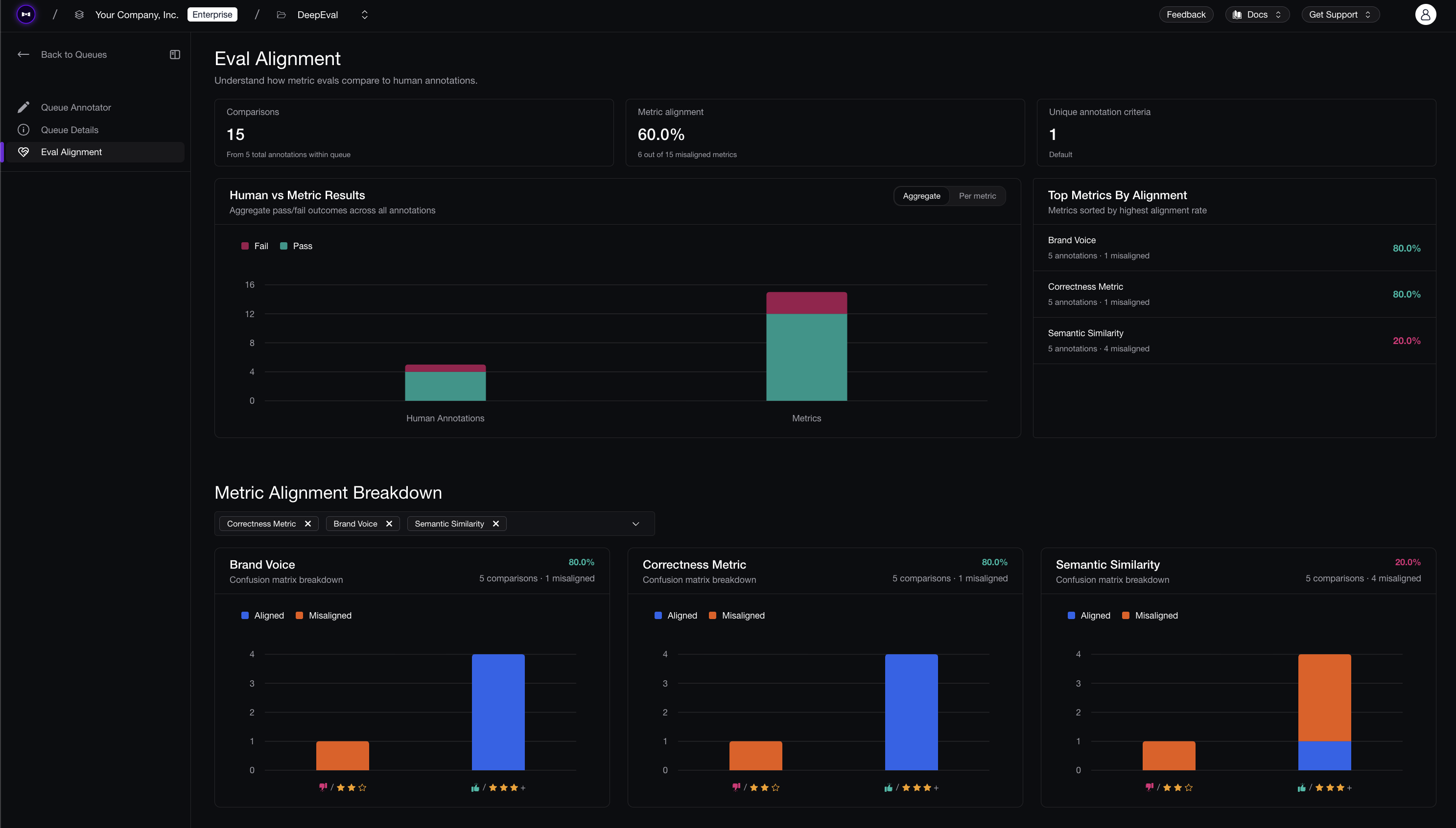

Tools: DeepEval (100% open source ⭐ https://github.com/confident-ai/deepeval) lets you implement aligned metrics in 5 lines of code, while platforms like Confident AI help you validated metric-outcome fit, and scales to provide collaboration and tracking infrastructure for scaling evals across your team.

What Is LLM Evals and Why Do 95% of Them Fail?

There's good news and bad news: LLM evals work — but only if you build them correctly. And 95% of teams don't.

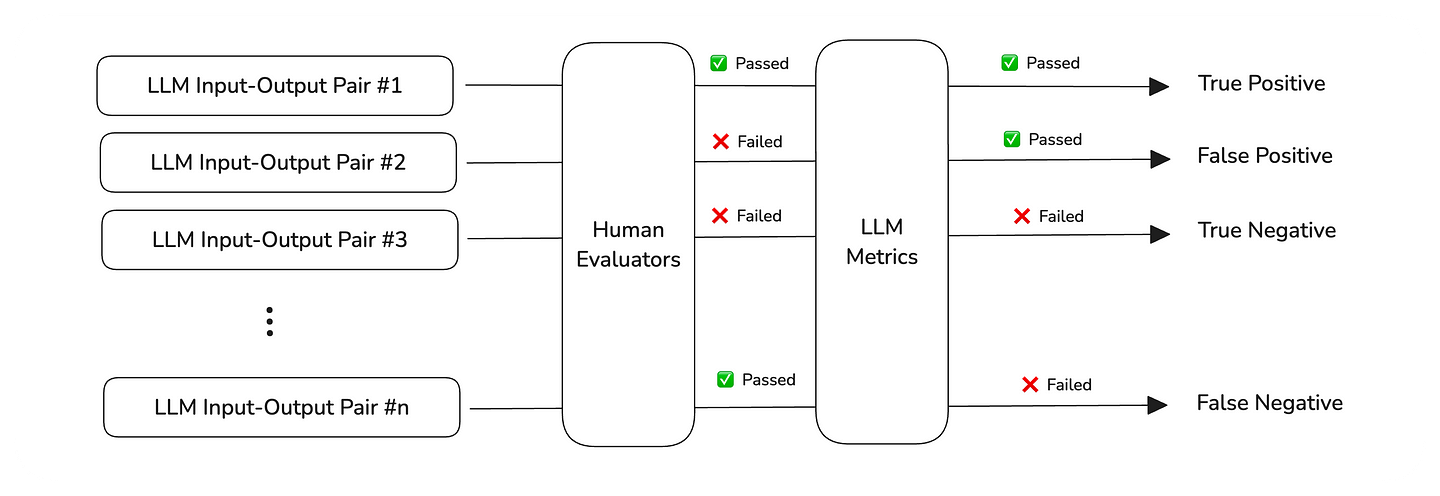

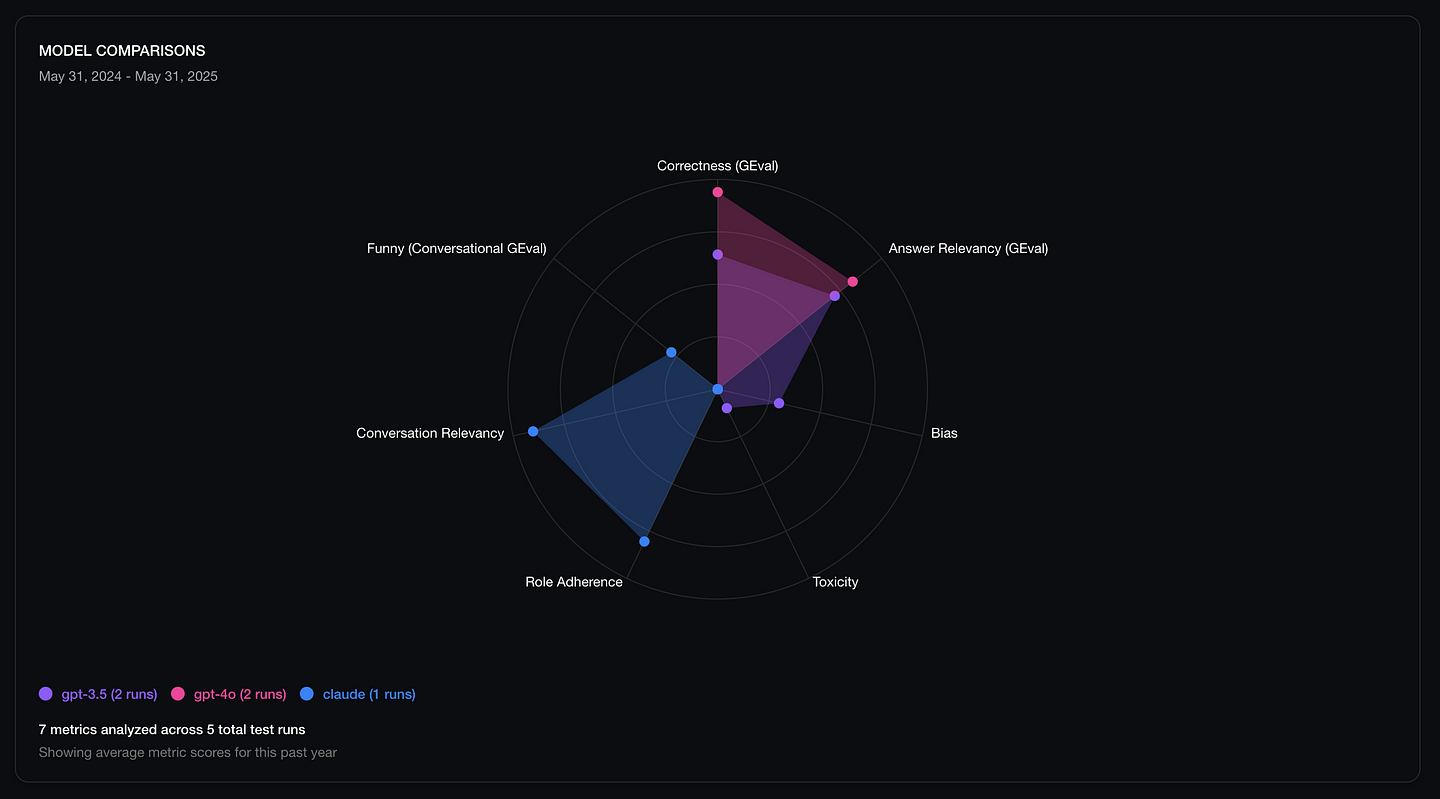

LLM evaluation (often called "evals") is the process of systematically testing Large Language Model applications using metrics like answer relevancy, G-Eval, task completion, and similarity. The core idea is straightforward: define diverse test cases covering your use case, then use metrics to determine how many pass when you tweak prompts, models, or architecture.

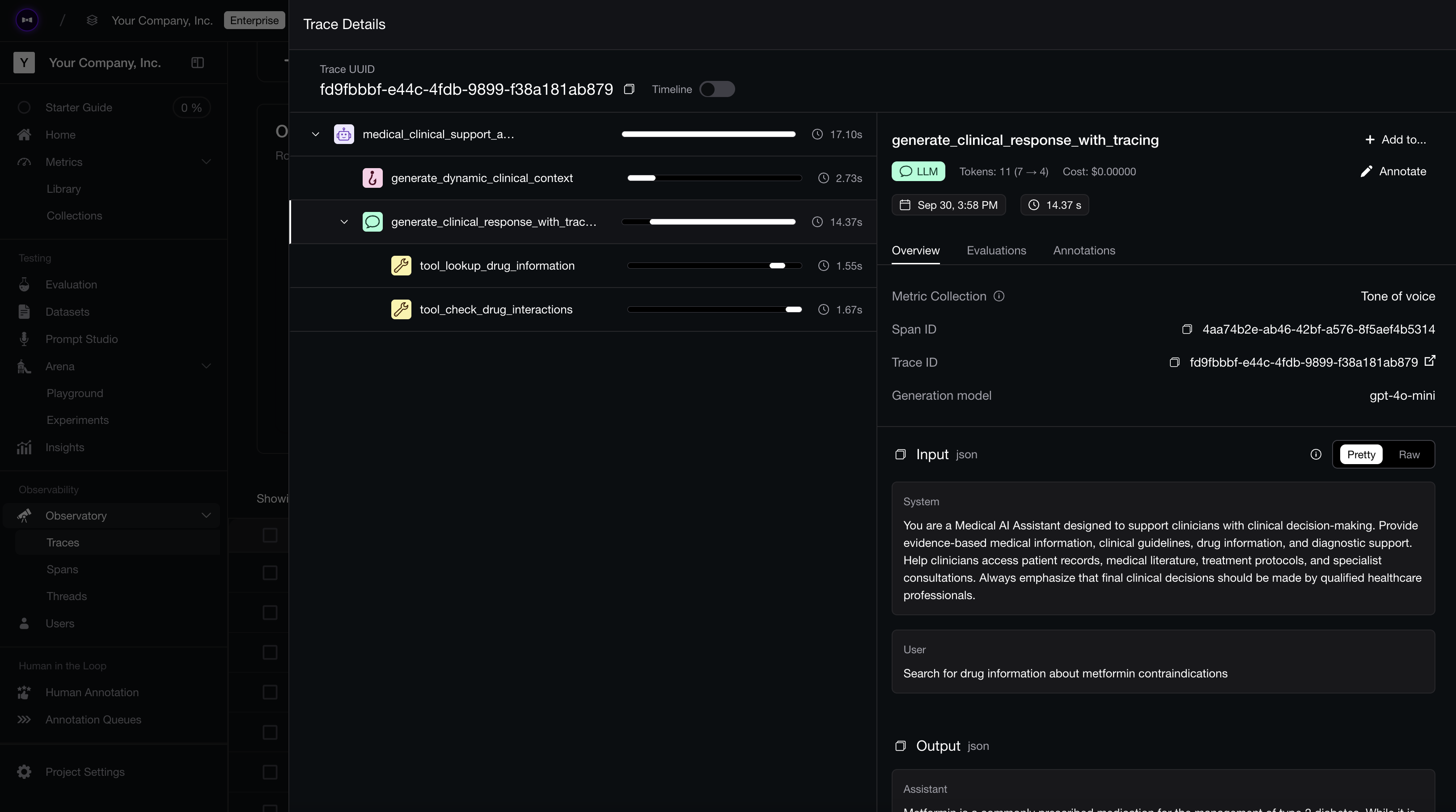

This is what a test case looks like, which evaluates an individual LLM interaction:

](https://images.ctfassets.net/otwaplf7zuwf/5u1uXQOZ1jWFb7yciTZUse/8144195009da4aa859383bd0448ee68a/image.png)

There’s an input to your LLM application, the generated “actual output” based on this input, and other dynamic parameters such as the retrieval context in a RAG pipeline or reference-based parameters like the expected output that represents labelled/target outputs. But fixing the process isn’t as simple as defining test cases and choosing metrics.

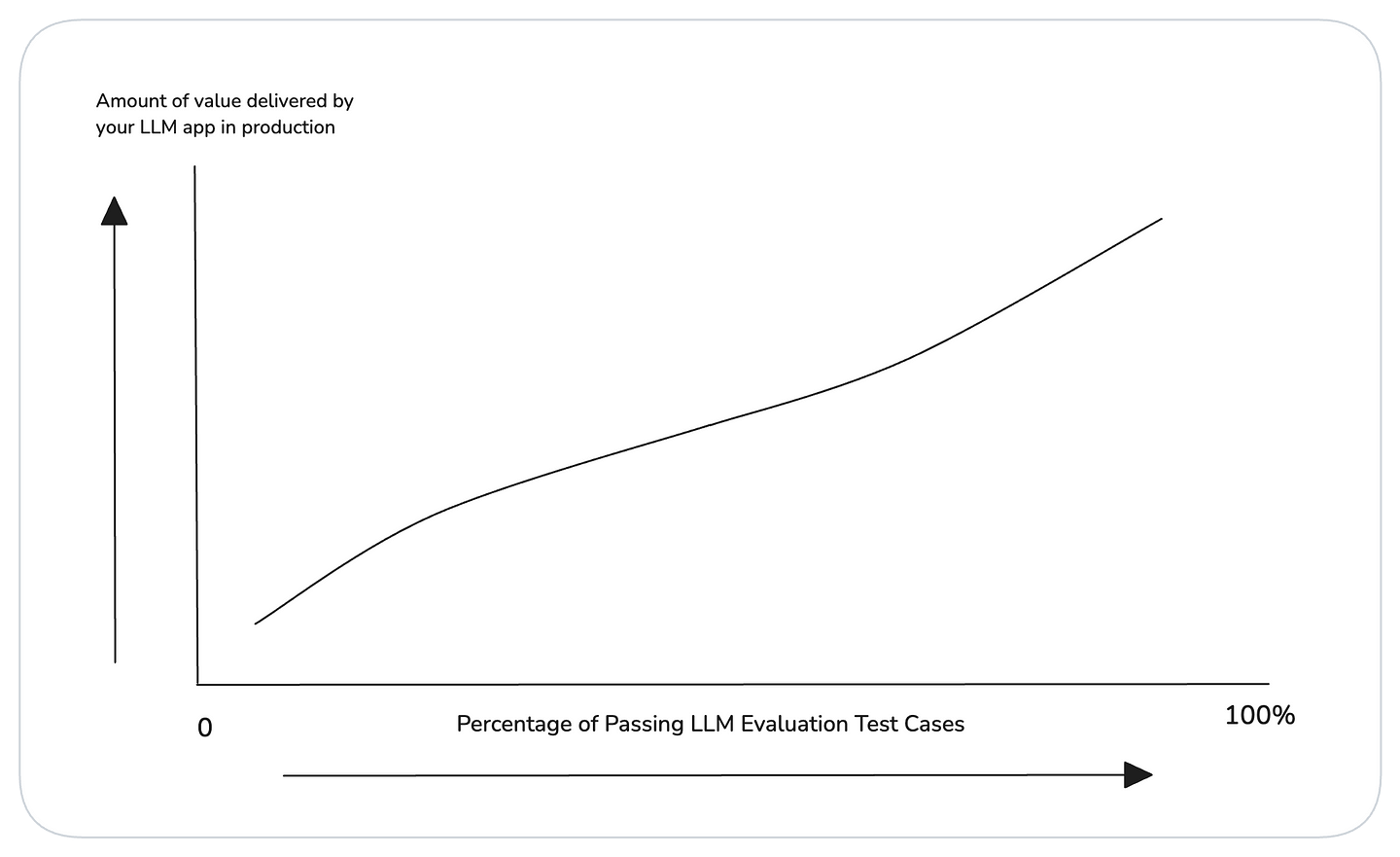

Here's why LLM evals feel broken: they don't predict production outcomes. You can't point to improved test results and confidently say they'll drive measurable ROI. Without that connection, there's no clear direction for improvement.

You can’t point to improved test results and confidently say they’ll drive a measurable increase in ROI, and without a clear objective, there’s no real direction to improve. To address this, let’s look at the two modes of evaluation — and why focusing on end-to-end evaluation is key to staying aligned with your business goals.

Component-Level vs End-to-End Evaluation

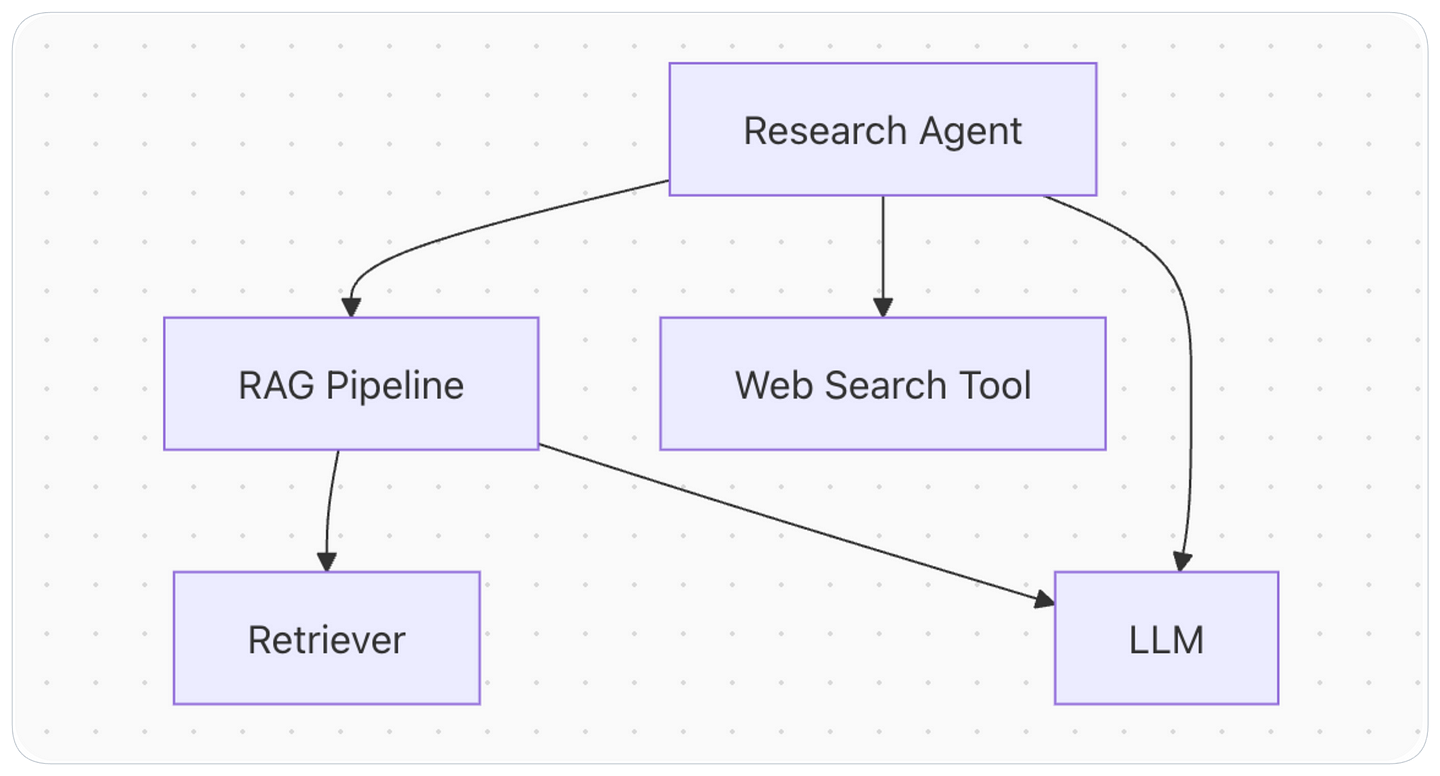

LLM applications — especially with the rise of agentic workflows — are undoubtedly complex. Understandably, there can be many interactions across different components that are potential candidates for evaluation: embedding models interact with LLMs in a RAG pipeline, different agents may have sub-agents, each with their own tools, and so on. In fact, for those interested we've written a whole other piece on evaluating AI agents.

But for our objective of making LLM evaluation meaningful, we ought to focus on end-to-end evaluation instead, because that’s what users see.

End-to-end evaluation involves assessing the overall performance of your LLM application by treating it as a black box — feeding it inputs and comparing the generated outputs against expectations using chosen metrics. We’re focusing on end-to-end evaluation not because it’s simpler, but because these results are the ones that actually correlate with business KPIs.

Think about it: how can increasing the "performance" of a nested RAG component in your AI agent possibly explain a 15% increase in support ticket resolution? It can't. That's why this framework focuses on end-to-end LLM evals — the only type that correlates with business KPIs.

The Eval Platform for AI Quality & Observability

Confident AI is the leading platform to evaluate AI apps on the cloud, powered by DeepEval.

](https://images.ctfassets.net/otwaplf7zuwf/2R2Rqrx5efw8MPaWIpFvHv/b39736209b53117494e7265936f4eaa9/image.png)