AI agents are complicated — Agents calling tools, tools invoking agents, agents as a swarms of agents, and swarms of agents calling on other swarms of agents. It sounds confusing just typing it out.

“AI agents” is a broad term that refers to many things nowadays. It may refer to single-turn autonomous agents that run in the background similar to cron jobs, multi-turn conversational agents that resembles RAG-based chatbots, as well as voice AI agents that are also conversational agent but with a voice component to it. Oh and, don’t forget AI agents that belong to a larger agentic AI system.

Unfortunately, AI agents also have a lot more surface area to go wrong:

Faulty tool calls —

invoking the wrong tool, passing invalid parameters, or misinterpreting tool outputs.

Infinite loops

— agents get stuck repeatedly re-planning or retrying the same step without convergence.

False task completion

— claiming a step or goal is complete when no real progress or side effect occurred.

Instruction drift

— gradually deviating from the original user intent as intermediate reasoning accumulates.

That’s why evaluating AI agents is so difficult — there’s an infinite number of possible AI agentic systems, each with more than a dozen of ways to fail.

But here comes the good news: AI agent evaluation doesn’t have to be so hard, especially when you have a solid agentic testing strategy in place.

In this article, I’ll go through:

What AI agents are, and the most common types of agentic failures

How to evaluate any types of AI agentic system, including top metrics, setting up LLM tracing, and testing methods for single and multi-turn use cases

Top misconceptions in evaluating agentic systems, and how to avoid common pitfalls

How to automate everything to under 30 lines of code via

Ready to spend the next 15 minutes becoming an expert in AI agent evaluation? Let’s begin.

TL;DR

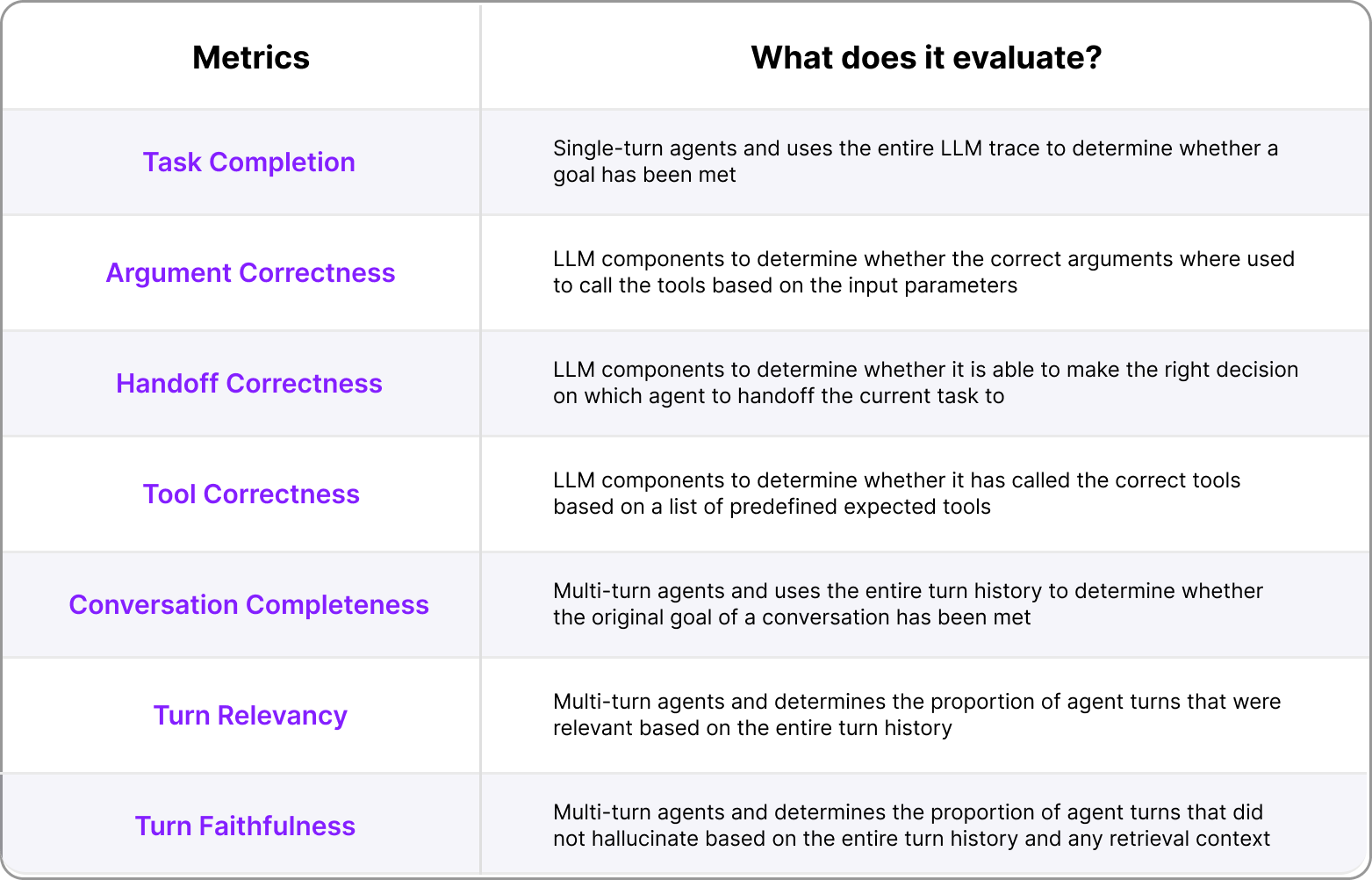

AI agents can be classified in single-turn and multi-turn agents, each requiring different metrics for evaluation

The main end-to-end metric for both single and multi-turn agents centers around the idea of task completion, which is whether an AI agent is able to truthfully complete a task given the tools it has access to

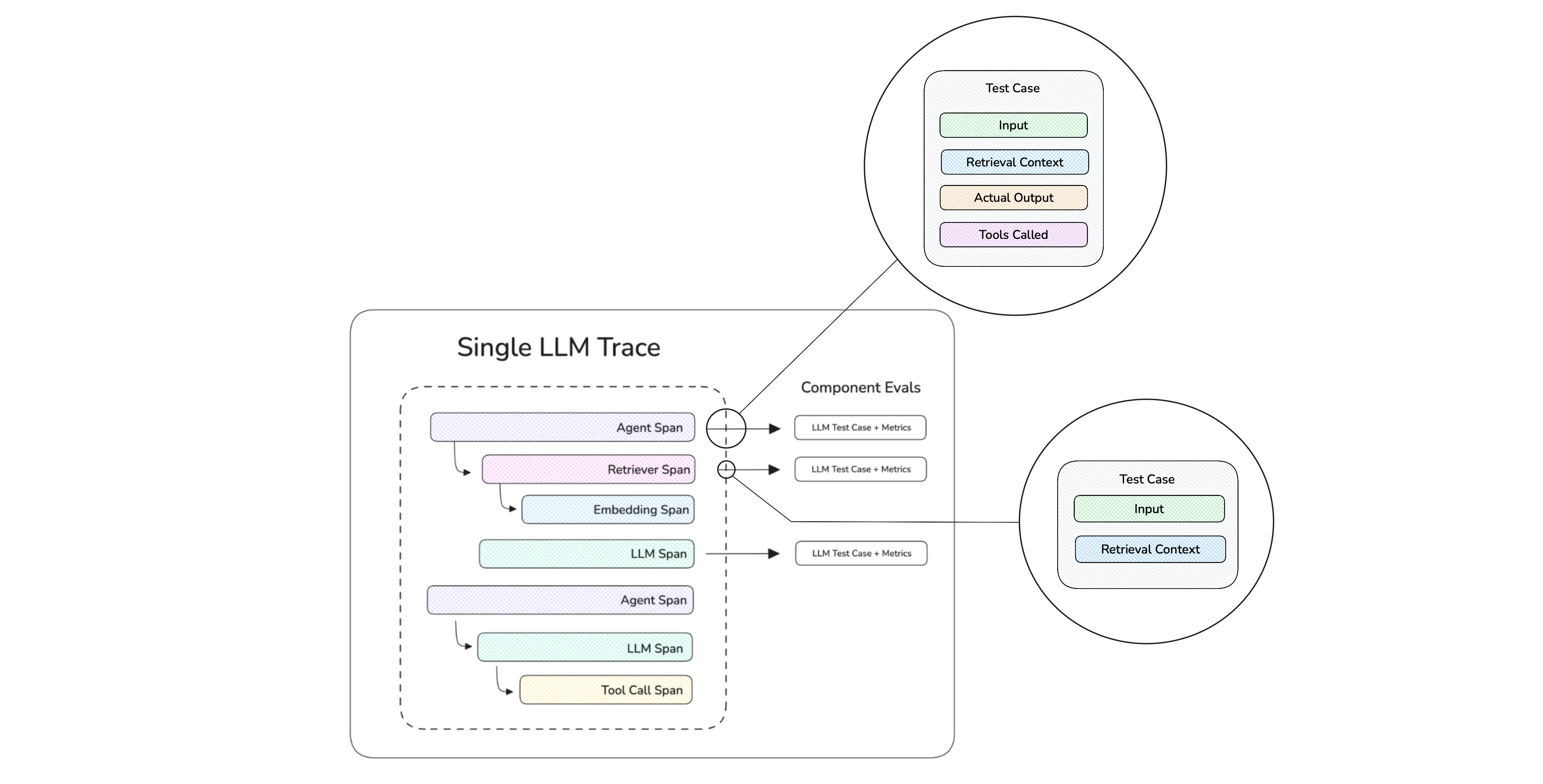

AI agent evaluation can also be evaluated on the component-level, where it concerns aspects such as whether the LLM is able to call the correct tools with the correct parameters, and whether handoff to other agents was correct

Evaluating agents mainly involves running evals in an ad-hoc manner, but for more sophisticated development workflows users can consider curating a golden dataset for benchmarking different agents

Top common metrics involve task completion, argument correctness, turn relevancy, and more

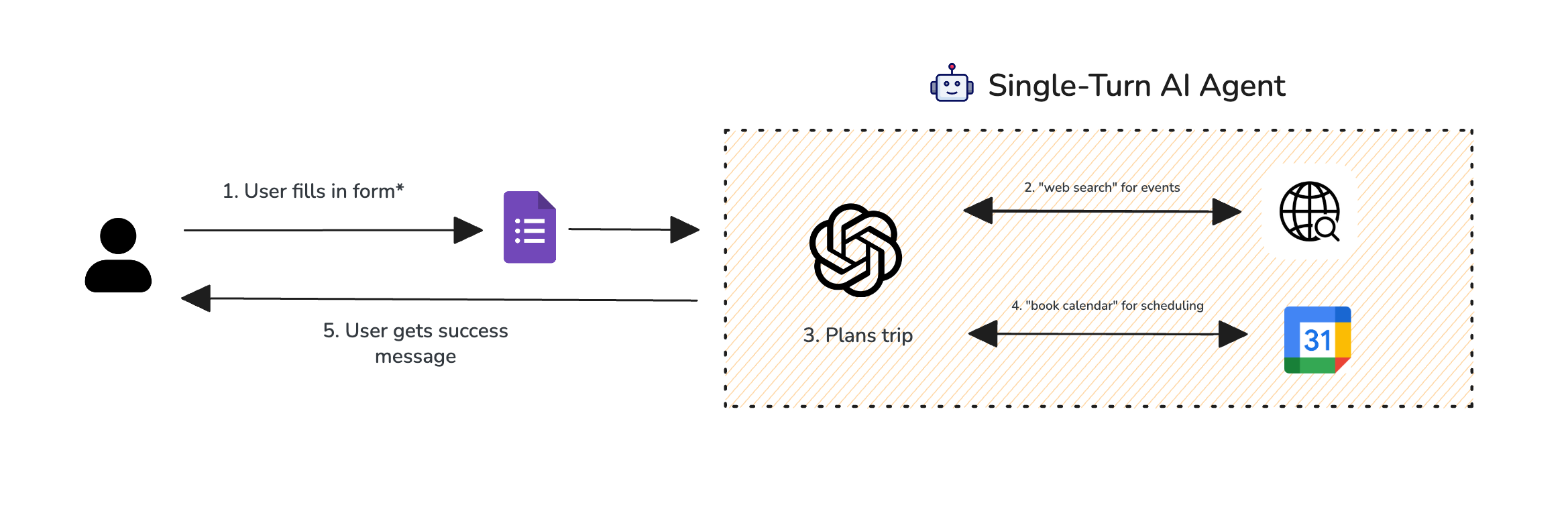

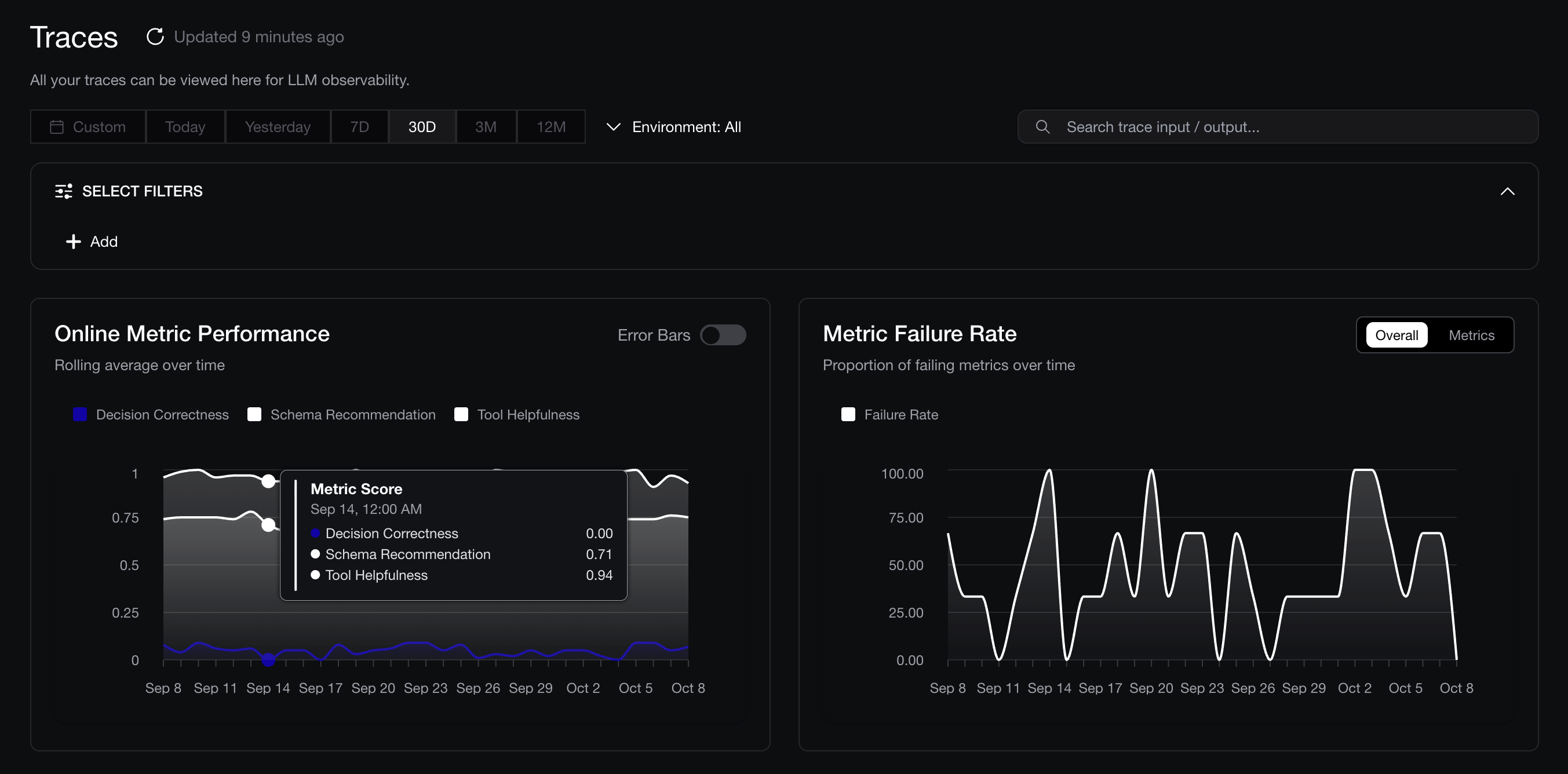

DeepEval (open-source) along with Confident AI allows you to run evals on agents efficiently without worrying about the infrastructure overhead on metrics implementation, AI observability, and more.

What are AI Agents and AI Agent Evaluation?

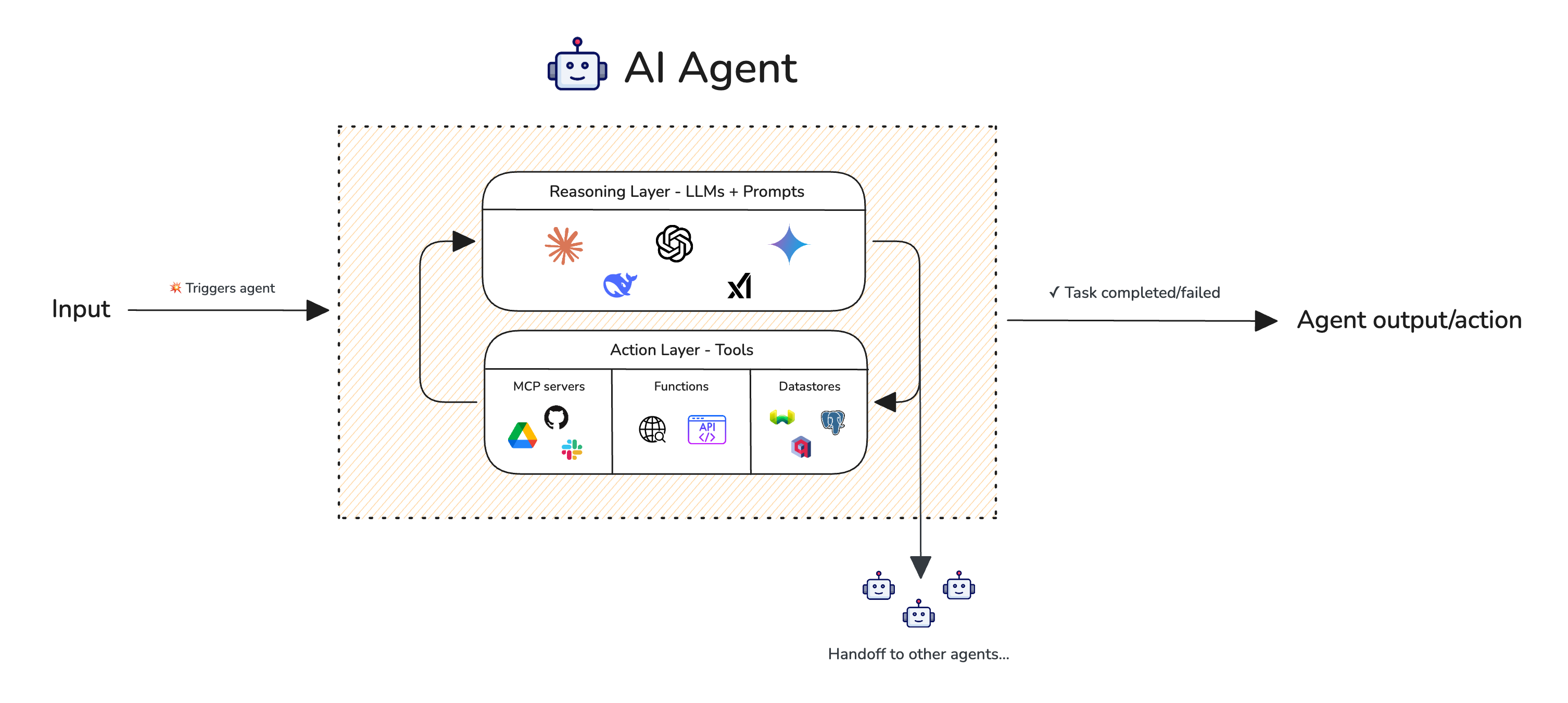

AI agents refers to large language model (LLM) systems that uses external tools (such as APIs) to perform actions in order to complete the task at hand. Agents work by using an LLMs' reasoning ability to determine which tools/actions an agentic system should perform, and continuing this process until a certain goal is met.

Let’s take a trip planner agent for example, which has access to a web search tool, calendar tool, and an LLM to perform the reasoning. A simplistic algorithm for this agent might be:

Prompt the user to see where they’d like to go, then

Prompt the user to see how long their trip will be, then

Call “web search” to find the latest events in {location}, for the given {time_range}, then

Call “book calendar” to finalize the schedule in the user’s calendar

Here comes the rhetorical question: Can you guess where things can go wrong above?

There are a few areas which this agentic system can fail miserably:

The LLM can pass the wrong parameters (location/time range) into the “web search” tool

The “web search” tool might be incorrectly implemented itself, and return faulty results

The “book calendar” tool might be called with incorrect input parameters, particular around the format the start and end date parameters should be in

The AI agent might loop infinitely while asking the user for information

The AI agent might claim to have searched the web or scheduled something in the user’s calendar even when it haven’t

The process of identifying points of which your AI agent can fail and testing for them is known as AI agent evaluation. More formally, AI agent evaluation is the process of using LLM metrics to evaluate AI systems that leverages an LLMs's reasoning ability to call the correct tools in order to complete certain tasks.

In the following sections, we will go over all of the common modes of failure within an agentic system. But first, we have to understand the difference between single vs multi-turn agentic systems.

Single vs multi-turn agents

The only difference between a single-turn AI agent and a multi-turn one is the number of end-to-end interactions between an agent and the user before a task is able to complete. Let me explain.

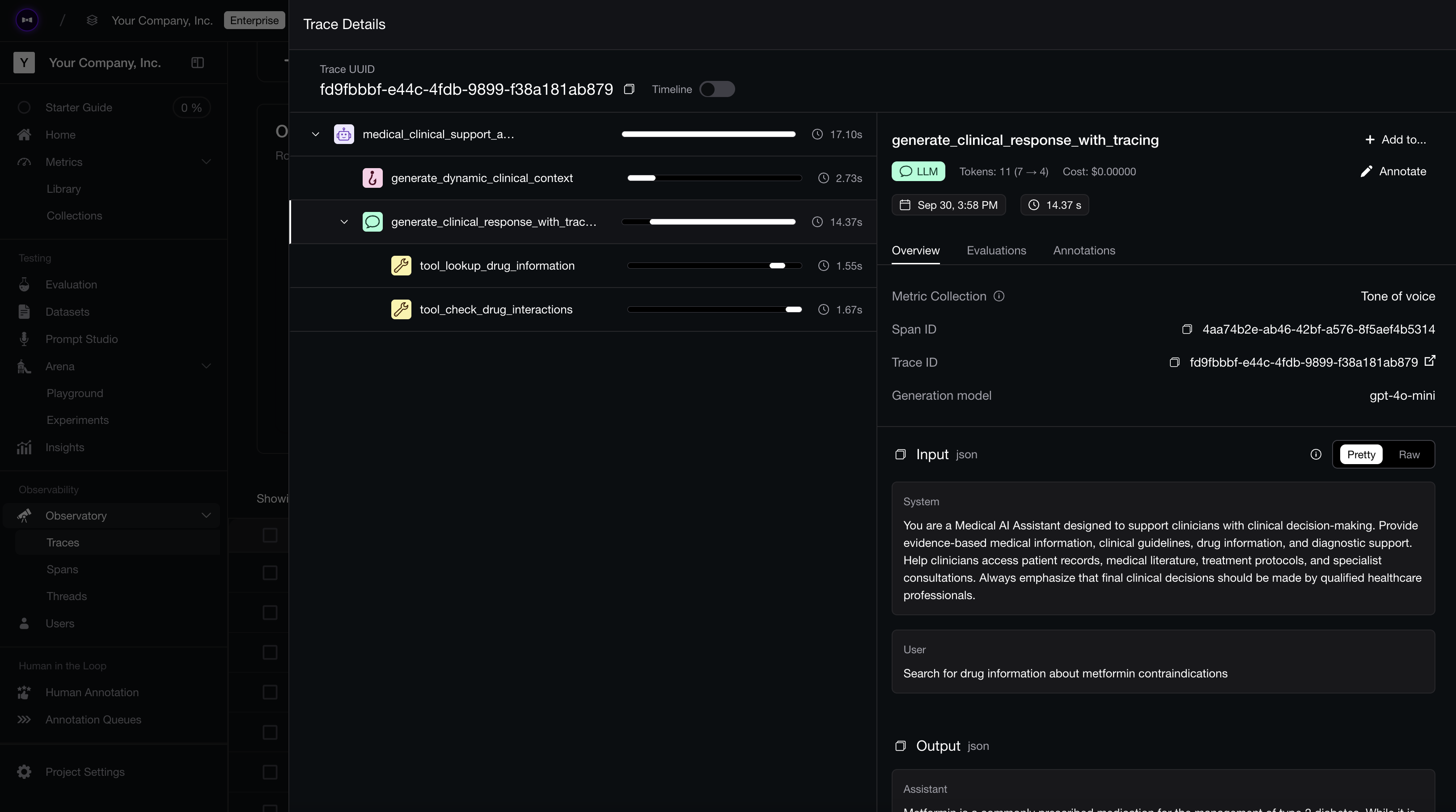

In the trip planner example above, it is a multi-turn agent because it has to interact with a user twice before it is able to plan a trip (once asking for the user’s destination, and the other asking for the user’s date of vacation). Had this interaction been through a Google Form instead, where the user would just submit a form of the required info, it would deem this agent single-turn (example diagram in a later section).

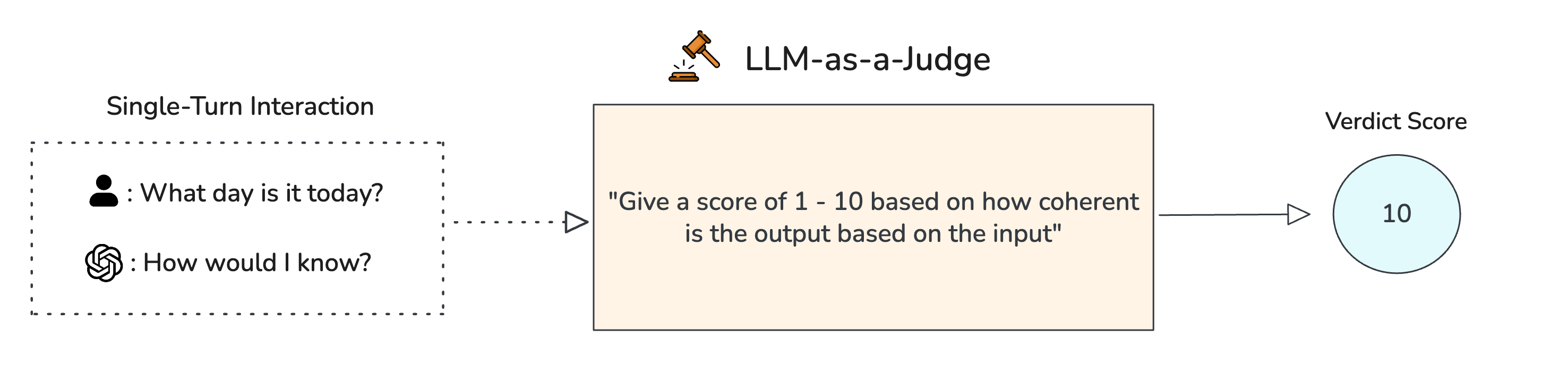

When evaluating single-turn agents, as we’ll learn later, we first have to identify which components in your agentic system are worth evaluating, before placing LLM-as-a-judge metrics at those components for evaluation.

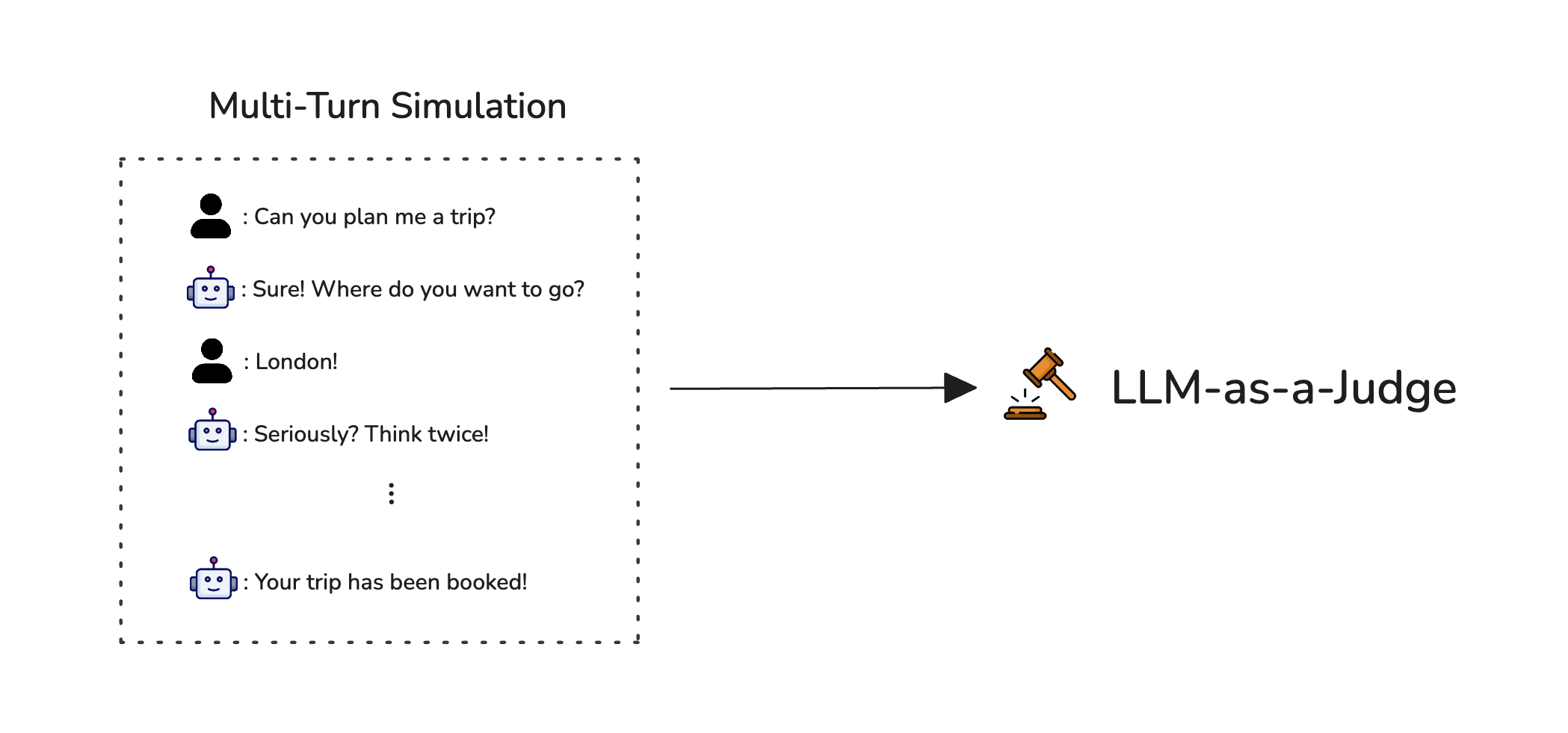

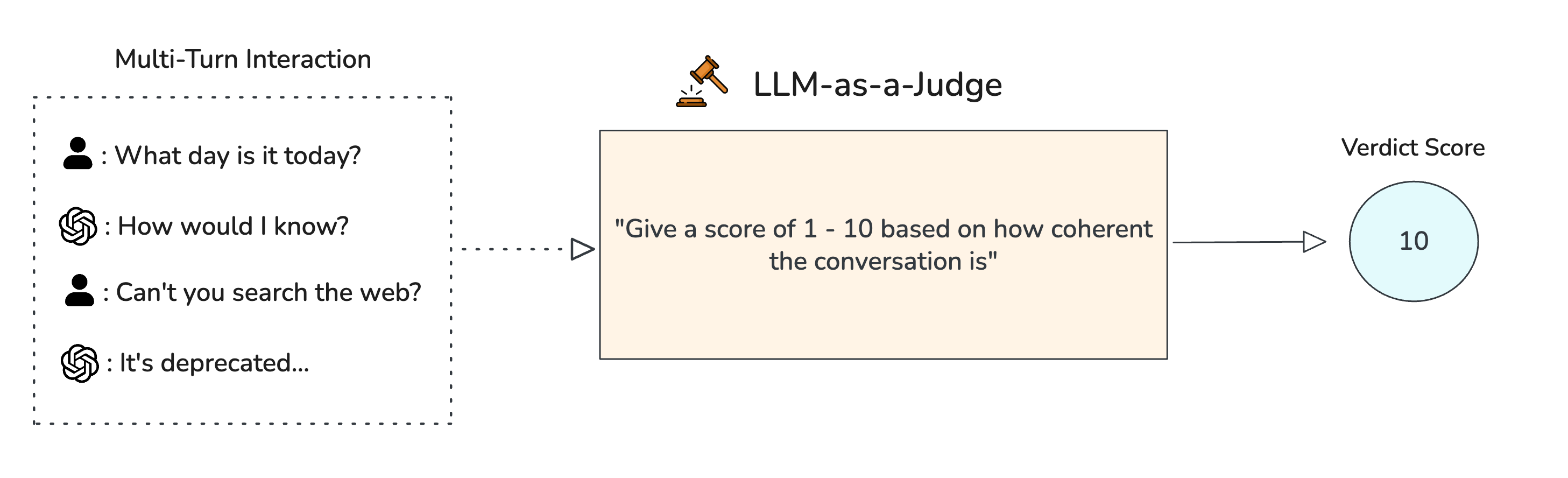

When evaluating multi-turn agents, we simply have to leverage LLM-as-a-judge metrics that evaluate task completion based on the entire turn history, while evaluating individual tool call for each single-turn interaction as usual.

You might be wondering, but what if the agentic system is one that communicates with another swarm of agents? In this case, the agents aren’t necessarily interacting with the “user”, but instead with other agents, so does this still constitute as a multi-turn use case?

The answer is no: This isn’t a multi-turn agent.

Here’s the rule of thumb: Only count the number of end-to-end interactions it takes for a task to complete. I stress end-to-end because it automatically excludes internal “interactions” such as calling on other agents or swarms of agents. In fact, agents calling on other agents internally are known as component-level interactions.

With this in mind, let’s talk about where and how AI agents can fail on an end-to-end and component level.

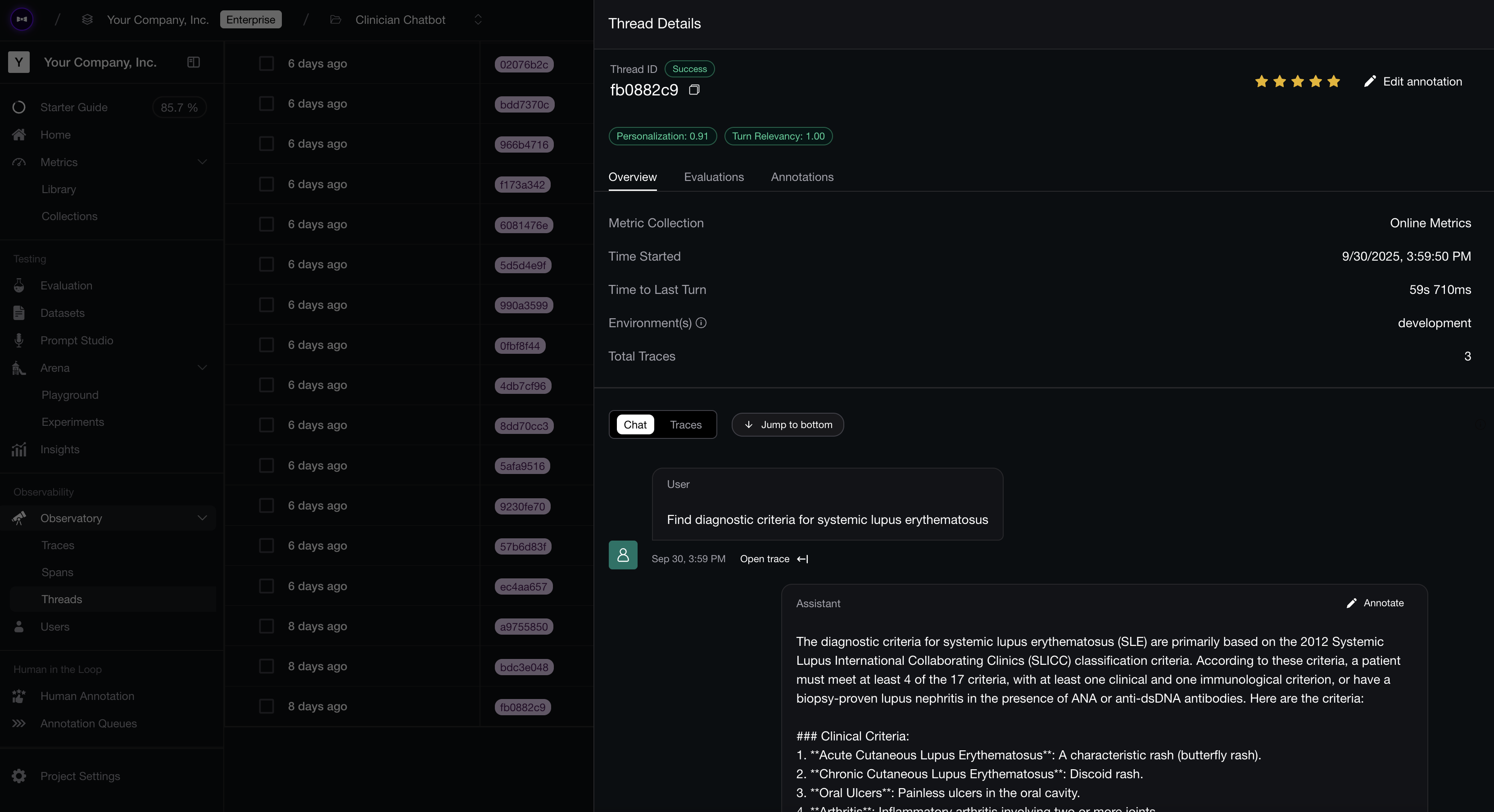

The Eval Platform for AI Quality & Observability

Confident AI is the leading platform to evaluate AI apps on the cloud, powered by DeepEval.