When talking to a user of DeepEval last week, here’s what I heard:

“We [a team of 7 engineers] just sit in a room for 30 minutes in silence to prompt for half an hour while entering the results into a spreadsheet before giving the thumbs up for deployment”

For many LLM engineering teams, pre-deployment checks still involve eyeballing outputs, “vibe checks,” and a big reason for this is because Large Language Model (LLM) applications are unpredictable which makes testing LLM applications a significant challenge.

While it’s essential to run quantitative evaluations through unit tests to catch regressions in CI/CD pipelines before deployment, the subjective and variable nature of LLM outputs makes principles in traditional software testing difficult to transfer.

But what if there were a way to address this unpredictability to enable unit-testing for LLMs?

This is exactly why we need to discuss LLM evaluators, which tackle this challenge by using LLMs to evaluate other LLMs. In this article, we’ll cover:

What LLM evaluators are, why they are important, and how to choose them

Common LLM evaluators for different use cases and systems (RAG, agents, etc.)

How to tailor evaluators for your specific use case

Practical code implementations for these evaluators in DeepEval (github⭐), including in CI/CD testing environments

After reading this article, you’ll know exactly how to choose, implement, and optimize LLM evaluators for your LLM testing workflows.

Let’s dive right in.

TL;DR

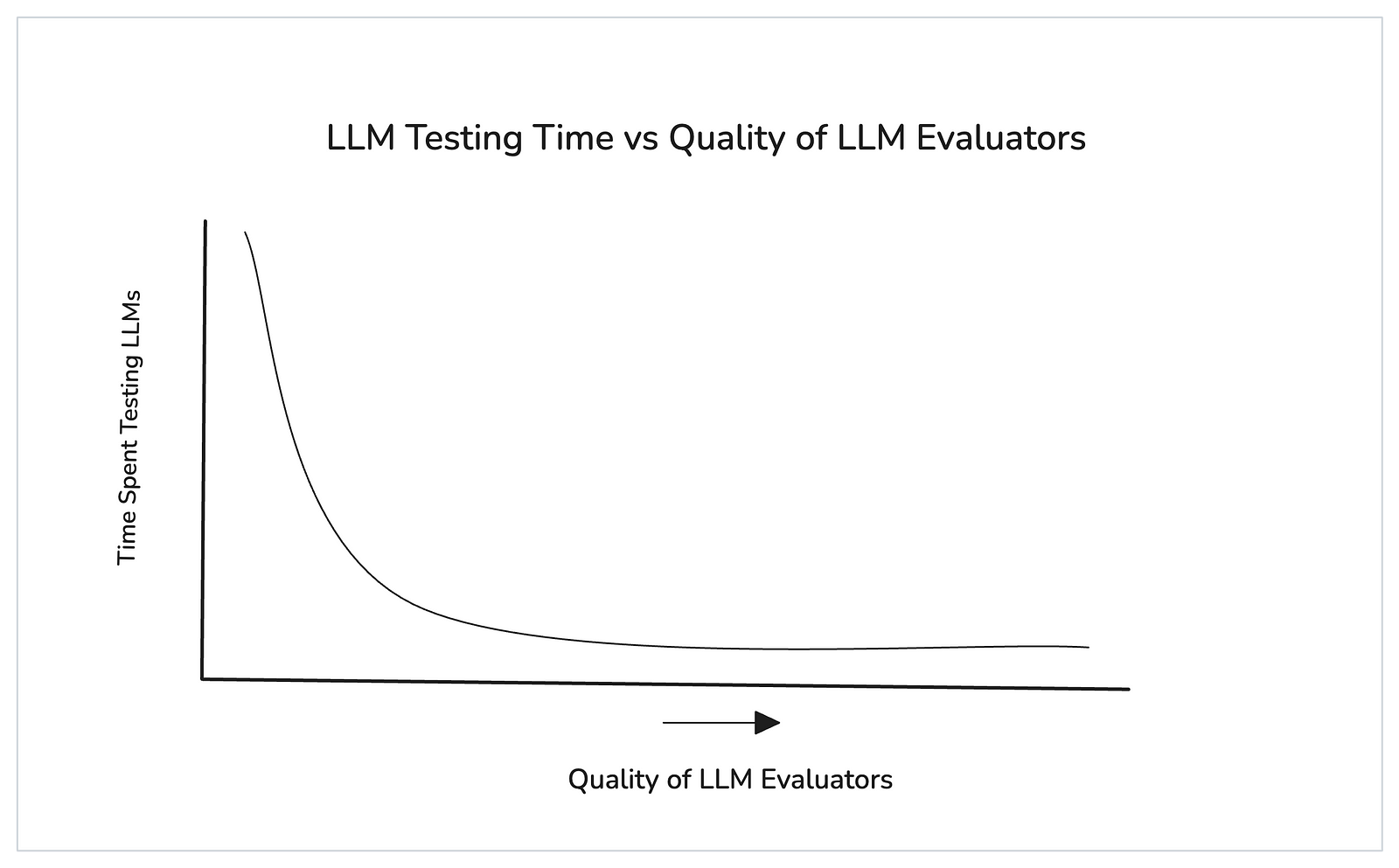

LLM evaluators (aka. LLM-as-a-judge) is the most effective way to score LLM outputs for unit-testing LLM apps, outperforming BLEU & ROUGE for accuracy and humans for scalability.

They can be used for scoring agents, RAG, chatbots, and typically outputs a score from between 0 - 1.

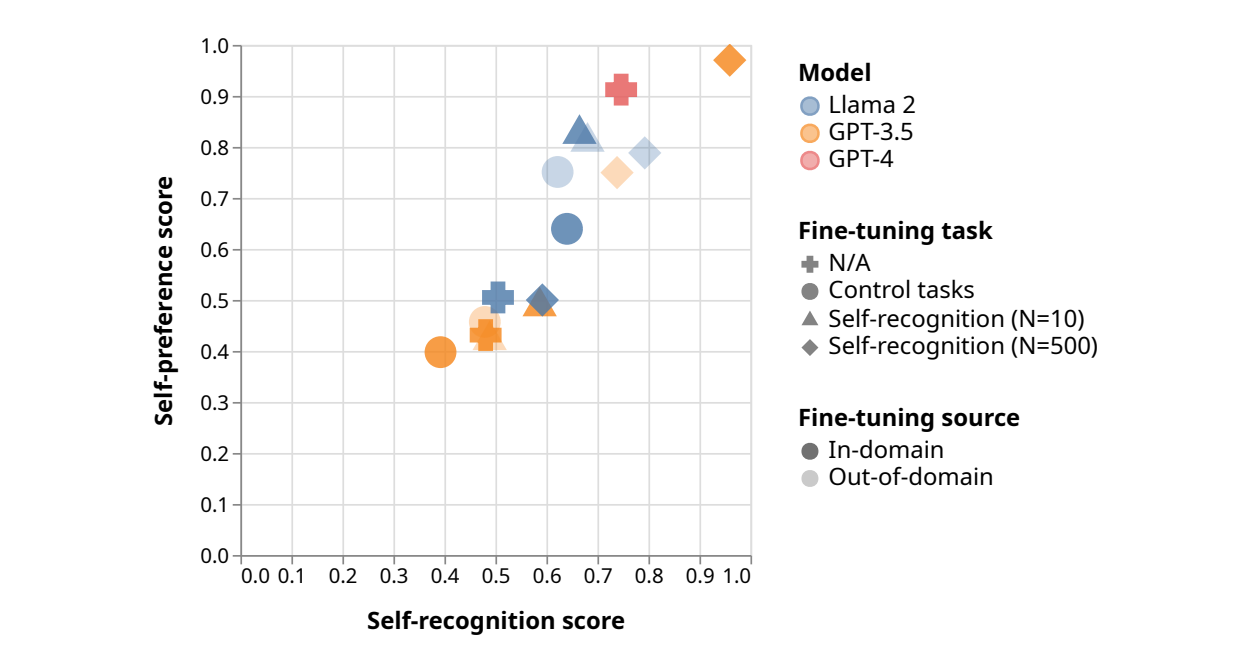

LLM evaluators can suffer from biases and unreliability, but can be fixed using CoT prompting, in-context learning, and fine-tuning models (more rare).

The most effective/SOTA LLM evaluators include G-Eval, DAG, and QAG, which should be employed based on the criteria at hand to evaluate.

DeepEval (100% OS ⭐ https://github.com/confident-ai/deepeval) allows anyone to implement LLM evaluators that can be customized to any unique use case.

What are LLM Evaluators?

LLM evaluators are LLM-powered scorers that help quantify how well your LLM system is performing on criteria such as relevancy, answer correctness, faithfulness, and more. Unlike traditional statistical scores like recall, precision, or F1, LLM evaluators use LLM-as-a-judge, which involves feeding the inputs and outputs of your LLM system into a prompt template, and having an LLM judge score a single interaction based on your chosen evaluation criteria.

evaluation_prompt = """You are an expert evaluator. Your task is to rate how factually correct the following response is, based on the provided input and optional context. Rate on a scale from 1 to 5, where:

1 = Completely incorrect

2 = Mostly incorrect

3 = Somewhat correct but with noticeable issues

4 = Mostly correct with minor issues

5 = Fully correct and accurate

Input:

{input}

Context:

{context}

LLM Response:

{output}

Please return only the numeric score (1 to 5) and no explanation.

Score:"""Evaluators are typically used as part of metrics that test your LLM app in the form of unit tests. Many of these unit tests together form a benchmark for your LLM application. This benchmark allows you to run regression tests by comparing each unit test side-by-side across different versions of your system.

There are two main types of LLM evaluators, which was first introduced in the “Judging LLM-as-a-Judge with MT-Bench and Chatbot Arena” paper:

Single-output evaluation (both referenceless and reference-based): A judge LLM is given a scoring rubric and asked to evaluate one output at a time. It considers factors like the system input, retrieved context (e.g. in RAG pipelines), and optionally a reference answer, then assigns a score based on the criteria you define. If provided a “labelled” output it is a reference-based evaluation, else referenceless.

Pairwise comparison: The judge LLM is shown two different outputs generated from the same input and asked to choose which one is better. Like single-output evaluation, this also relies on clear criteria to define what “better” means — whether that’s accuracy, helpfulness, tone, or anything else.

Although pairwise comparison is possible, the trend we’re seeing at DeepEval is most teams today primarily use single-output evaluation first, then compare the scores between test runs to measure improvement or regressions.

Here are the most common metrics powered by LLM evaluators that you could use to capture both subjective and objective evaluation criteria:

Correctness — Typically a reference-based metric that compares the correctness of an LLM output against the expected output. (and in fact the most used in G-Eval)

Answer Relevancy — Can be either referenceless or reference-based; it measures how relevant the LLM output is to the input.

Faithfulness — A referenceless metric used in RAG systems to assess whether the LLM output contains hallucinations when compared to the retrieved text chunks.

Task completion — A referenceless, agentic metric that evaluates how well the LLM completed the task based on the given input.

Summarization — Can be either referenceless or reference-based; it evaluates how effectively the LLM summarizes the input text.

Which uses these LLM evaluators under the hood:

G-Eval — A framework that uses LLMs with CoT to evaluate LLMs on any criteria of your choice.

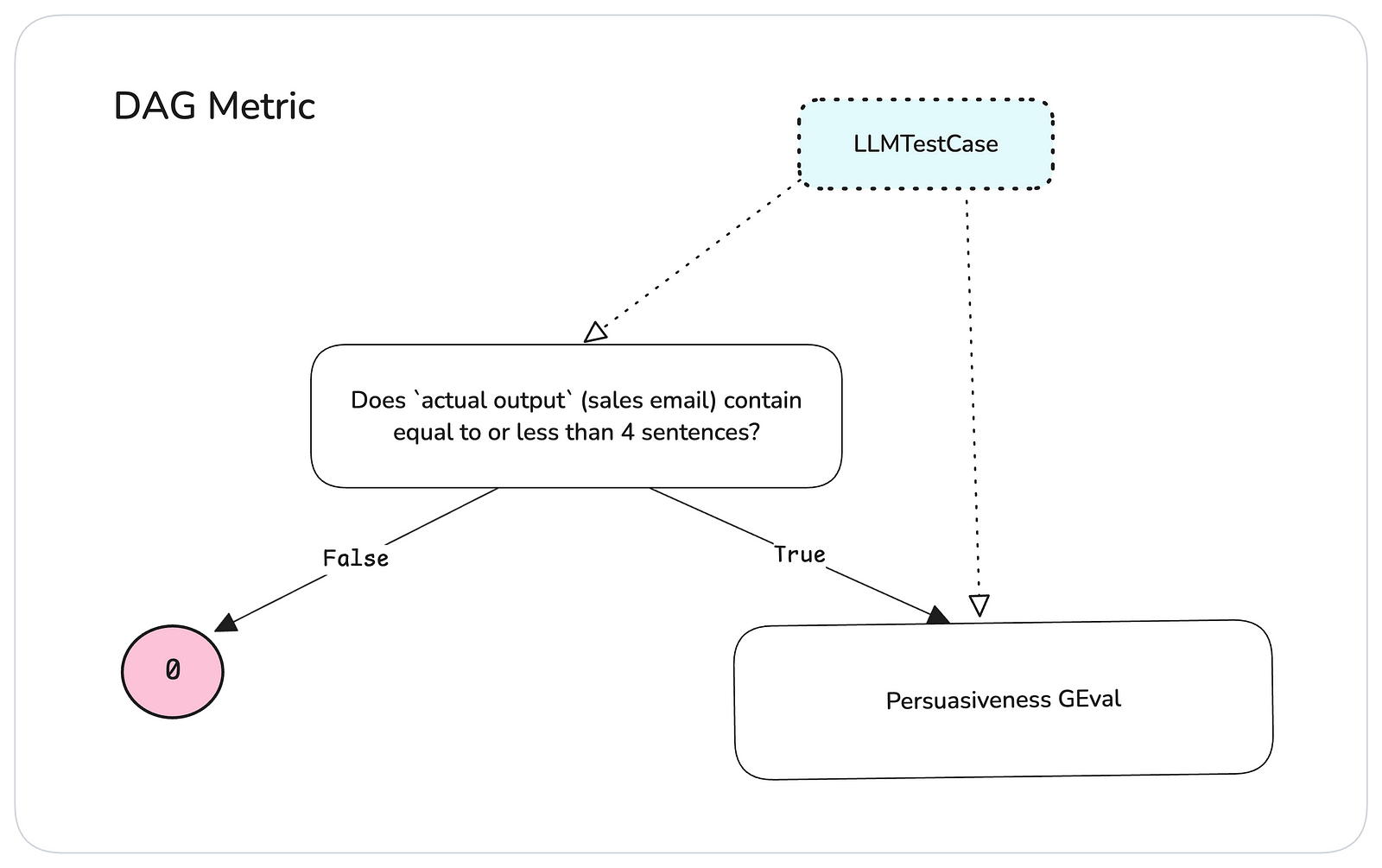

DAG (deep acyclic graph) — A framework that uses LLM powered decision trees to evaluate LLMs on any criteria of your choice.

QAG (question-answer-generation) — A framework that uses LLMs to first generate a series of close-ended questions before using binary yes/no answers to these questions as the final score.

Prometheus — A purely model based evaluator that relies on a fine-tuned Llama2 model as an evaluator (Prometheus) and an evaluation prompt. Prometheus is strictly reference-based.

These evaluators can either be algorithms in the form of prompt engineering, or just the LLM itself as is the case with Prometheus.

We’ll go through each of these, figure out which ones make the most sense for your use case and system — but first, let’s take a step back and understand why we’re using LLM evaluators to test LLM applications in the first place.

The Eval Platform for AI Quality & Observability

Confident AI is the leading platform to evaluate AI apps on the cloud, powered by DeepEval.

)](https://images.ctfassets.net/otwaplf7zuwf/2M0aWFG0ymGk6nm5UwmAQ4/8fac89d41fb08ebfec3e40ab0f043c91/image.png)

](https://images.ctfassets.net/otwaplf7zuwf/14jOXAwJxSbWRnuTnbfyhI/34971ca0a0e2067add44d7209cc918bf/image.png)

Documentation](https://images.ctfassets.net/otwaplf7zuwf/2lQtH2J5o4xnA8Z4ECmkU3/c3c002654a4df3f7a2819f4671b4f53e/image.png)