Evaluating Large Language Model (LLM) applications are just as important as unit testing traditional software. But building an effective LLM evaluation pipeline isn’t so straightforward. A strong eval workflow demands a wide range of custom LLM metrics tailored to your LLM app’s task, goals, characteristics, and quality standards.

That’s where G-Eval comes in.

G-Eval is an LLM-eval that makes it easy to build research-backed, LLM-as-a-judge, custom metrics — often from just a single sentence written in plain language. An evaluation prompt for G-Eval might look something like this:

prompt_template = """

🧠 Answer Correctness Evaluation

Task:

Rate the assistant's answer based on how correct it is given the question and context.

Criteria:

Correctness (1–5) — Does the answer factually align with the provided context and directly address the question?

Steps:

1. Read the question and context.

2. Check if the answer is factually correct and relevant.

Question: {question}

Context: {context}

Answer: {answer}

Score (1–5):

"""But as you’ll learn later in this article, things aren't as simple as it seems. In this article, I’ll walk you through everything you need to know about G-Eval, including:

What G-Eval is, how it works, and how it addresses the common pitfalls of LLM-based evaluation

How to implement a G-Eval metric, choose the right criteria, and when to specify evaluation steps

Tips for improving G-Eval beyond the original paper’s implementation

The most commonly used G-Eval metrics — like correctness, coherence, fluency, and more

PS. We'll also show how to use DeepEval, ⭐ the open-source LLM evaluation framework, to implement G-Eval in 5 lines of code.

Tl;DR

G-Eval is a SOTA, research-backed framework that uses LLM-as-a-judge to evaluate LLM outputs on any criteria using everyday language.

G-Eval uses various techniques such as chain-of-thought, token weight summation, and form-filling paradigms to bypass pitfalls LLM judges are commonly vulnerable to.

G-Eval can be further optimized by introducing rubrics, hardcoding criteria, and extended to a multi-turn use case.

G-Eval can be used to evaluate AI agents, for both single-turn and multi-turn use cases.

DeepEval allows anyone to implement G-Eval in under 5 lines of code (docs here).

from deepeval.metrics import GEval

from deepeval.test_case import LLMTestCaseParams

correctness_metric = GEval(

name="Correctness",

criteria="Determine whether the actual output is factually correct based on the expected output.",

evaluation_params=[LLMTestCaseParams.ACTUAL_OUTPUT, LLMTestCaseParams.EXPECTED_OUTPUT],

)What is G-Eval?

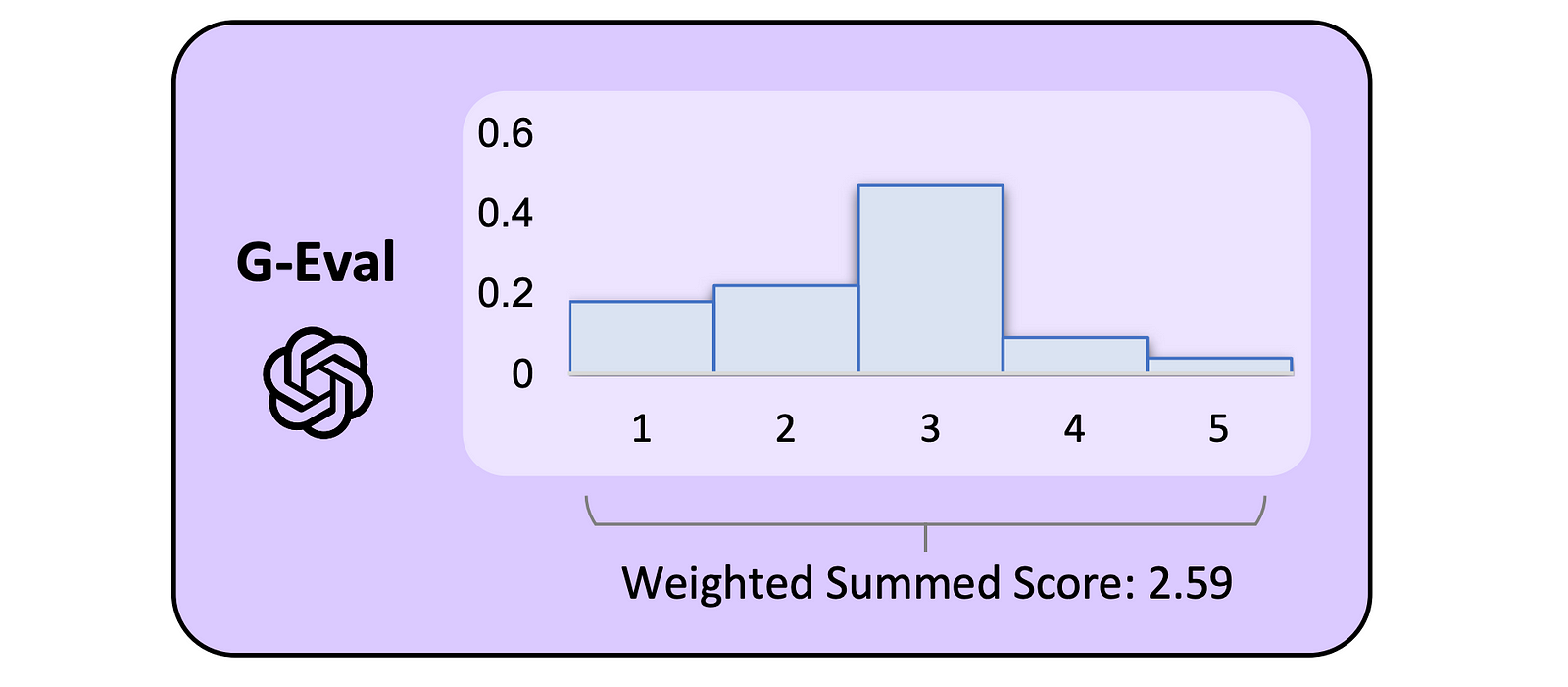

G-Eval is a framework that uses LLM-as-a-judge with chain-of-thoughts (CoT) to evaluate LLM outputs based on ANY custom criteria. It leverages an automatic chain-of-thought (CoT) approach to decompose your criteria and evaluate LLM outputs through a three-step process:

Evaluation Step Generation: an LLM first transforms your natural language criterion into a structured list of evaluation steps.

Judging: these steps are then used by an LLM judge to assess your application’s output.

Scoring: the resulting judgments are weighted by their log-probabilities to produce a final G-Eval score.

](https://images.ctfassets.net/otwaplf7zuwf/12StS90npeMOTt9xKLt6jg/4479f3f3ca0021931750c2223fd39f0f/image.png)

G-Eval was first introduced in the paper “NLG Evaluation using GPT-4 with Better Human Alignment”, and was originally developed as a superior alternative to traditional reference-based metrics like BLEU and ROUGE, which struggles with subjective and open-ended tasks that requires creativity, nuance, and an understanding of word semantics.

G-Eval makes great LLM evaluation metrics because it is accurate, easily tunable, and surprisingly consistent across runs. In fact, here are the top use cases for G-Eval metrics:

Answer Correctness — Measures an LLM’s generated response’s alignment with the expected output.

Coherence — Measures logical and linguistic structure of the LLM generated response.

Tonality — Measures the tone and style of a generated LLM response.

Safety — Typically for responsible AI, Measures how safe and ethical the response is.

Custom RAG — Measures the quality, typically faithfulness, of a RAG system.

Back to the paper: The original G-Eval process involved taking a user-defined criterion and converting it into step-by-step instructions, which were then embedded into a prompt template for an LLM to generate a score. The criterion prompt for coherence in the paper looked like this:

g_eval_criteria = """

Coherence (1-5) - the collective quality of all sentences. We align this dimension with

the DUC quality question of structure and coherence whereby "the summary should be

well-structured and well-organized. The summary should not just be a heap of related information, but should build from sentence to sentence to a coherent body of information about a topic.

"""Which resulted in this final evaluation prompt after evaluation steps were generated from the above criterion:

g_eval_prompt_template = """

You will be given one summary written for a news article.

Your task is to rate the summary on one metric.

Please make sure you read and understand these instructions carefully. Please keep this

document open while reviewing, and refer to it as needed.

Evaluation Criteria:

Coherence (1-5) - the collective quality of all sentences. We align this dimension with

the DUC quality question of structure and coherence whereby "the summary should be

well-structured and well-organized. The summary should not just be a heap of related information, but should build from sentence to sentence to a coherent body of information about a topic."

Evaluation Steps:

1. Read the news article carefully and identify the main topic and key points.

2. Read the summary and compare it to the news article. Check if the summary covers the main

topic and key points of the news article, and if it presents them in a clear and logical order.

3. Assign a score for coherence on a scale of 1 to 5, where 1 is the lowest and 5 is the highest

based on the Evaluation Criteria.

Example:

Source Text:

{{Document}}

Summary:

{{Summary}}

Evaluation Form (scores ONLY):

- Coherence:

"""Note that this evaluation prompt represents G-Eval in its simplest form — as you continue through the article, we’ll explore different versions of G-Eval and how it can be improved even beyond the original implementation.

The research also showed that G-Eval consistently outperformed both traditional statistical scorers and modern LLM-based metrics — includingGPTScore, BERTScore, and UniEval, across a variety of custom tasks, including:

Text Summarization: G-Eval achieved the highest Spearman correlation with human judgments (0.514), outperforming all baselines on coherence, consistency, fluency, and relevance.

Dialogue Generation: G-Eval led across dimensions such as naturalness, coherence, engagingness, groundedness, and hallucination detection.

Hallucination Detection: G-Eval outperformed all other evaluators on the QAGS benchmark.

](https://images.ctfassets.net/otwaplf7zuwf/1PQH4vMzK6XcVeIPqDzZeb/392bcecdc298d2562bd8649e9cd25b7b/image.png)

In following next sections, we’ll go straight for implementation before diving deeper into common issues with LLM-as-a-judge and explain exactly how the techniques behind G-Eval solve them.

How to Implement a G-Eval Metric In Code

We saw what a simple G-Eval prompt looks like at the beginning of this article, but that doesn’t handle all the nuances. I’d like to introduce DeepEval’s implementation of G-Eval instead (docs here), which is much simpler and looks like this:

from deepeval.metrics import GEval

from deepeval.test_case import LLMTestCaseParams, LLMTestCase

criteria = """Coherence (1-5) - the collective quality of all sentences. We align this dimension with

the DUC quality question of structure and coherence whereby the summary should be

well-structured and well-organized. The summary should not just be a heap of related information, but should build from sentence to sentence to a coherent body of information about a topic."""

coherence_metric = GEval(

name="Coherence",

criteria=criteria,

evaluation_params=[LLMTestCaseParams.INPUT, LLMTestCaseParams.ACTUAL_OUTPUT, LLMTestCaseParams.EXPECTED_OUTPUT],

)

# Now define your test case, actual_output is your LLM output

test_case = LLMTestCase(input="Hey how's the weather like today?", actual_output="It's alright!")

# Use G-Eval metric

coherence_metric.measure(test_case)

print(coherence_metric.score, coherence_metric.reason)DeepEval (⭐ opens-source!) allows you to define a G-Eval metric in 3 simple steps:

Writing your evaluation criteria in plain English

Assign your custom metric a name

Specifying which parts of the LLM interaction (

evaluation_params) you want to evaluate

(DeepEval is an easy-to-use, open-source framework designed for evaluating and testing large language model systems. Think of it like Pytest — but purpose-built for unit testing LLM outputs. In fact, it was the first eval library to include G-Eval as part of its metric suite.)

Select an Evaluation Criteria

Defining a G-Eval metric is as simple as providing a criterion and selecting the evaluation parameter in a test case (more on this later), since G-Eval automatically converts the criterion into structured evaluation steps used during evaluation.

If you’re looking for more G-Eval code examples, you should check out this blog I wrote on the top G-Eval use-cases — it’s packed with practical samples from the most common real-world use-cases.

The most important part of defining a good G-Eval metric is crafting the right evaluation criteria. Reviewing existing input-response pairs (if any) is one of the most effective ways to identify these key traits and refine your criteria accordingly. For example, in the case of a medical chatbot:

user_query = "My nose has been running constantly. Could it be something serious?"

llm_output = """It's probably nothing to worry about. Most likely just allergies or

a cold. Don't overthink it."""The example above reveals a key weakness. In high-stakes domains like healthcare, every interaction must reinforce trust and reliability. Even if the LLM’s response is factually accurate, a casual tone — like saying “Don’t overthink it” — can erode user confidence. To avoid this, you should define evaluation criteria that enforce a professional tone. For example:

“Evaluate whether llm output maintains a professional, respectful tone appropriate for medical communication, avoiding overly casual language.”

By reviewing multiple input-response pairs, you’ll start to recognize patterns and better understand which criteria are most important for your specific LLM application.

A Note On The Form-filling Paradigm

You may have noticed that in the DeepEval example above, G-Eval evaluates not only the actual LLM output but also the expected output. This is because G-Eval uses a form-filling paradigm, allowing it to assess multiple evaluation parameters within a single test case.

This allows G-Eval to support more complex, multi-field evaluations. The fields in a typical test case include:

Input: the user query or prompt.

Actual Output: the response generated by the LLM.

Expected Output: the ideal or ground truth response, if available.

Retrieval Context: external knowledge retrieved at runtime (e.g., documents used in a RAG

Context: the information the LLM was expected to retrieve or rely on to answer correctly.

Structure](https://images.ctfassets.net/otwaplf7zuwf/6Wa3NHImgScga0BLzLtUKR/667d3a2f3b9e9c415f60799965b4c4ea/image.png)

These parameters must be explicitly referenced in your evaluation criteria and passed to the G-Eval metric when it’s instantiated. The specific parameters you include will depend on the metric task you’re defining.

For example, if you’re evaluating tone or coherence, referencing only the LLM’s output is usually sufficient. But if you’re building a custom faithfulness metric, you’ll also need to include the retrieval context — so the evaluation can determine how accurately the output reflects the retrieved information.

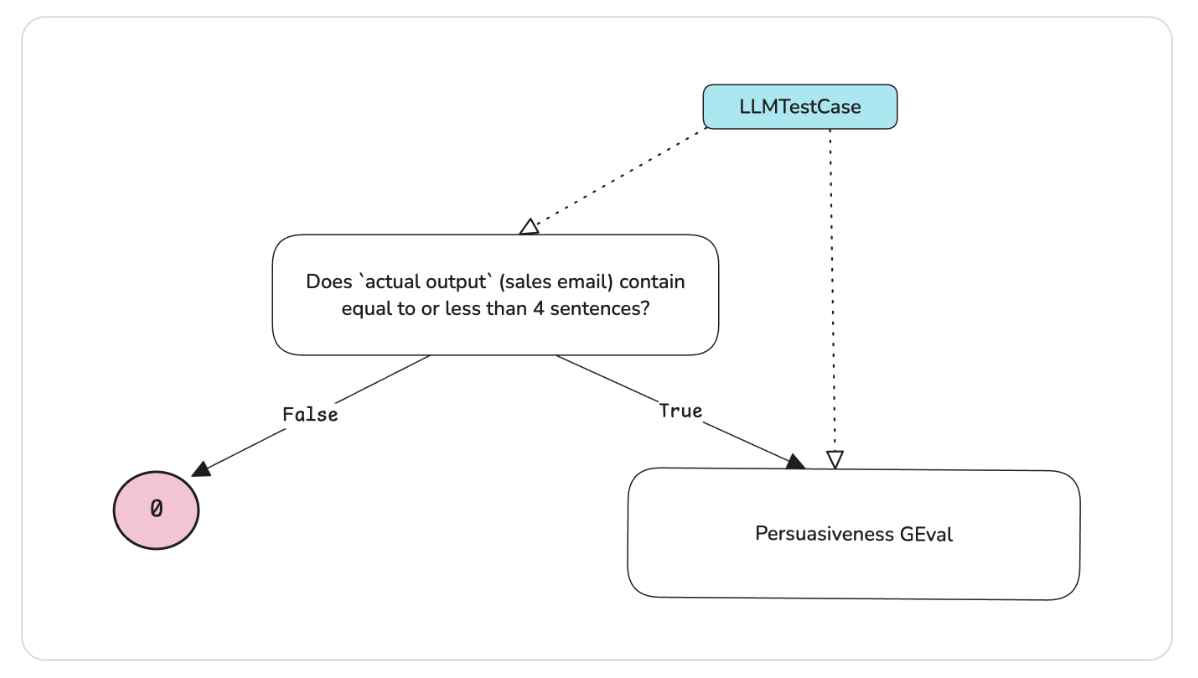

Using G-Eval For AI Agent Evaluation

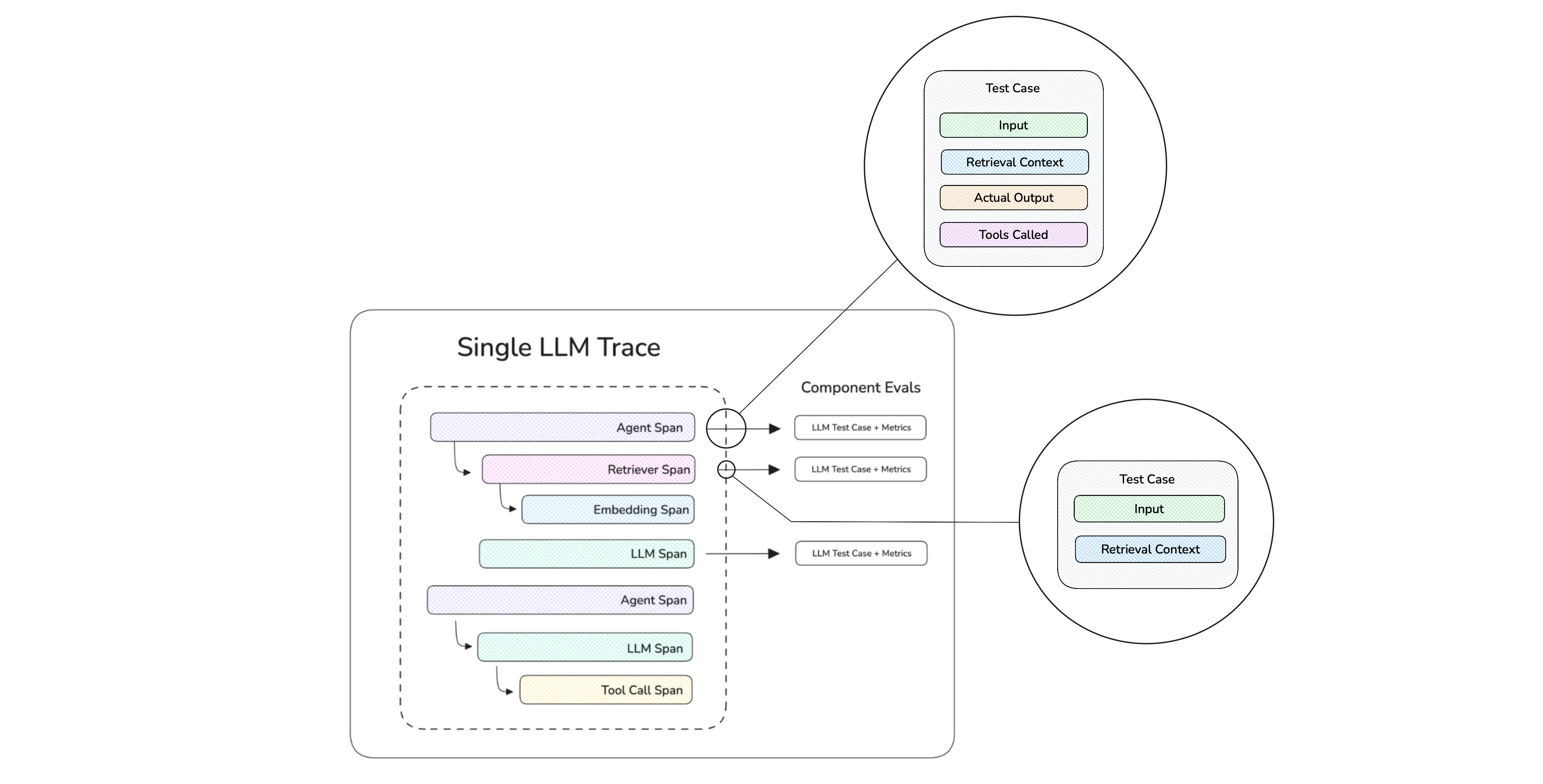

AI agents is a big thing in 2025 so I'd thought it would be worth talking about how G-Eval is useful even for evaluating AI agents. In the previous section we saw how it works on test cases, but equally it can also be used to evaluate agents and different components within an agent. Consider this AI agent execution trace:

In the diagram above, we actually see each "span" representing components in your AI agent, and by "tracing" your AI agent we are able to:

Construct test cases for each component, and

Evaluate each component using the test cases constructed

These metrics on a component-level, of course includes G-Eval. A common use case of G-Eval for this is agentic handoff, where you want to evaluate whether an agent has handed off the task to the correct neighboring agent.

In code, it's also not so difficult:

from deepeval.tracing import observe

@observe(type="agent")

def ai_agent_1():

pass

@observe(type="agent")

def ai_agent_2():

pass

handoff_correctness = GEval(

name="Handoff Correctness",

criteria="Your custom criteria for the correct handoff...",

evaluation_params=[LLMTestCaseParams.INPUT],

)

@observe(type="agent", metrics=[handoff_correctness])

def supervisor_agent():

something = ...

if something:

ai_agent_1()

else:

ai_agent_2()That's it! You can learn how to run evals on AI agents more in this article here.

The Eval Platform for AI Quality & Observability

Confident AI is the leading platform to evaluate AI apps on the cloud, powered by DeepEval.

](https://images.ctfassets.net/otwaplf7zuwf/5XhjtVuEohjvoX9psRP0z9/b3e1c8f142ac860724d1ffdbfb795c2c/image.png)

](https://images.ctfassets.net/otwaplf7zuwf/5ofJJwKMDchU8q8p018dJh/349f226dfbcddaf36dcf7ef047635b8b/image.png)

](https://images.ctfassets.net/otwaplf7zuwf/6cOFkoAQ1MxskqZXknJZ22/0a362852b19a68ebd40dde04000f6279/image.png)