It is no secret that evaluating the outputs of Large Language Models (LLMs) is essential for anyone building robust LLM applications. Whether you're fine-tuning for accuracy, enhancing contextual relevance in a RAG pipeline, or increasing task completion rate in an AI agent, choosing the right evaluation metrics is critical. Yet, LLM evaluation remains notoriously difficult—especially when it comes to deciding what to measure and how.

Having built one of the most adopted LLM evaluation framework myself, this article will teach you everything you need to know about LLM evaluation metrics, with code samples included. Ready for the long list? Let’s begin.

(Update: For metrics evaluating AI agents, heck out this new article)

TL;DR

Key takeaways:

LLM metrics measures output quality across dimensions like correctness and relevance.

Common mistakes: relying on traditional scorers like BLEU/ROUGE, where semantic nuance in LLM outputs is not captured.

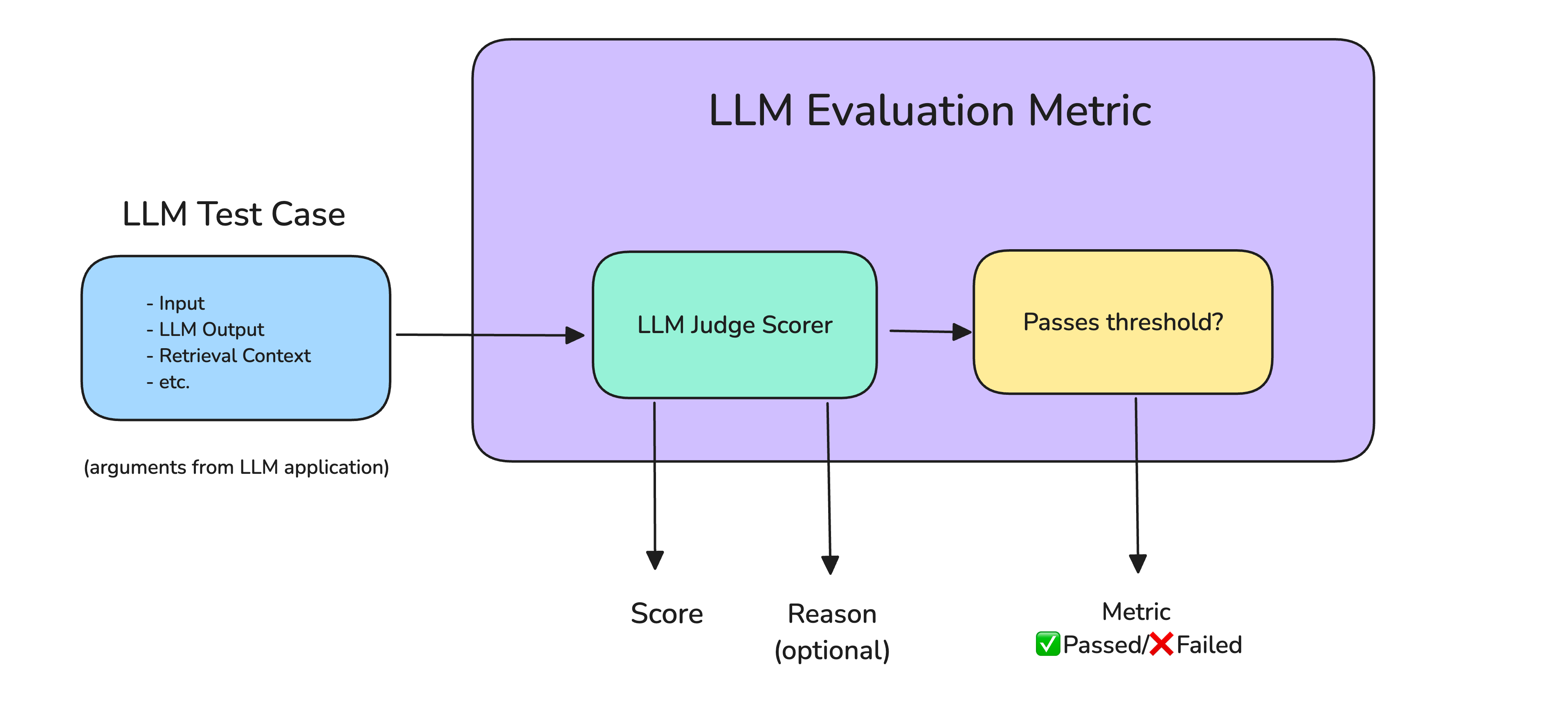

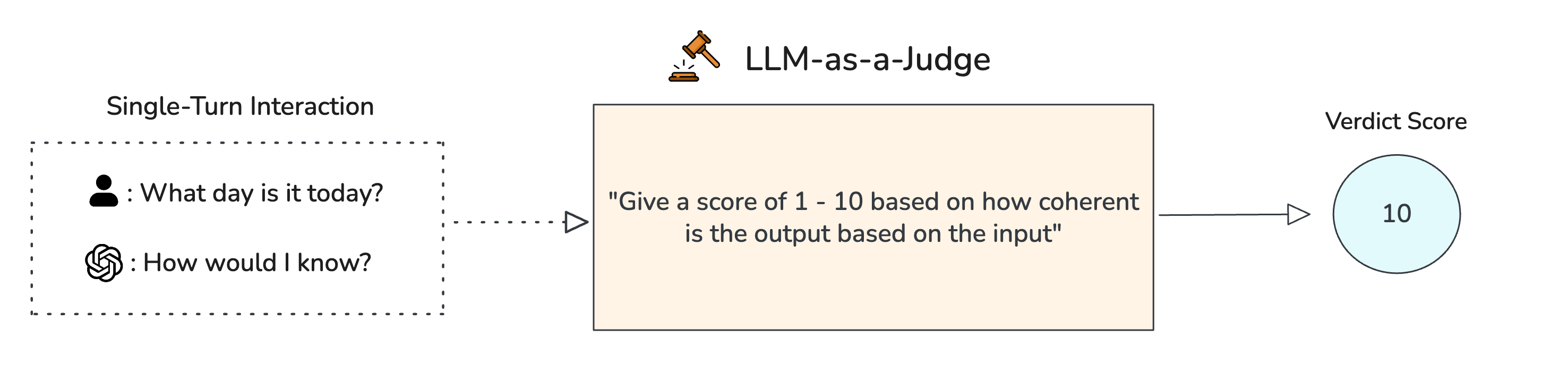

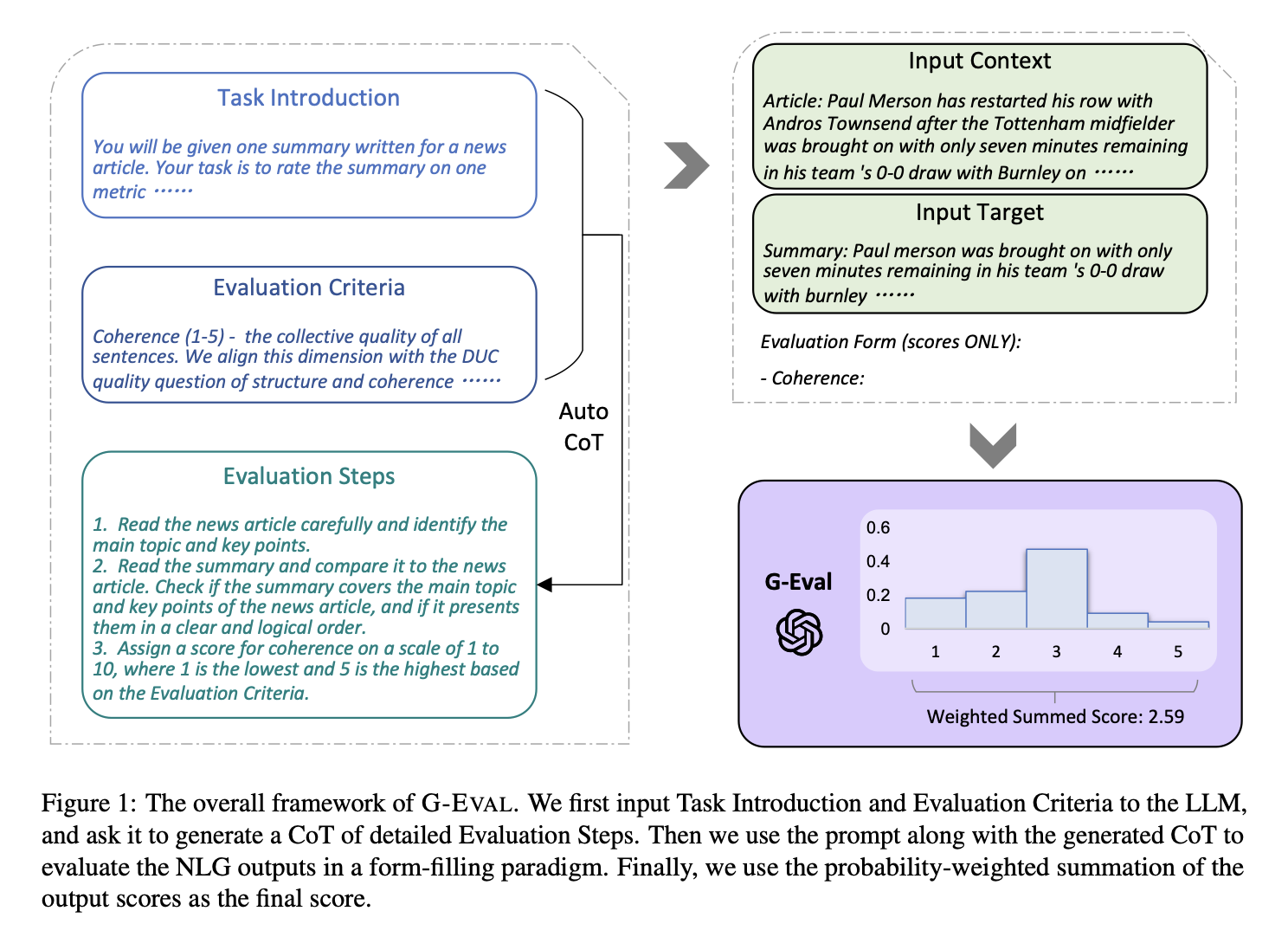

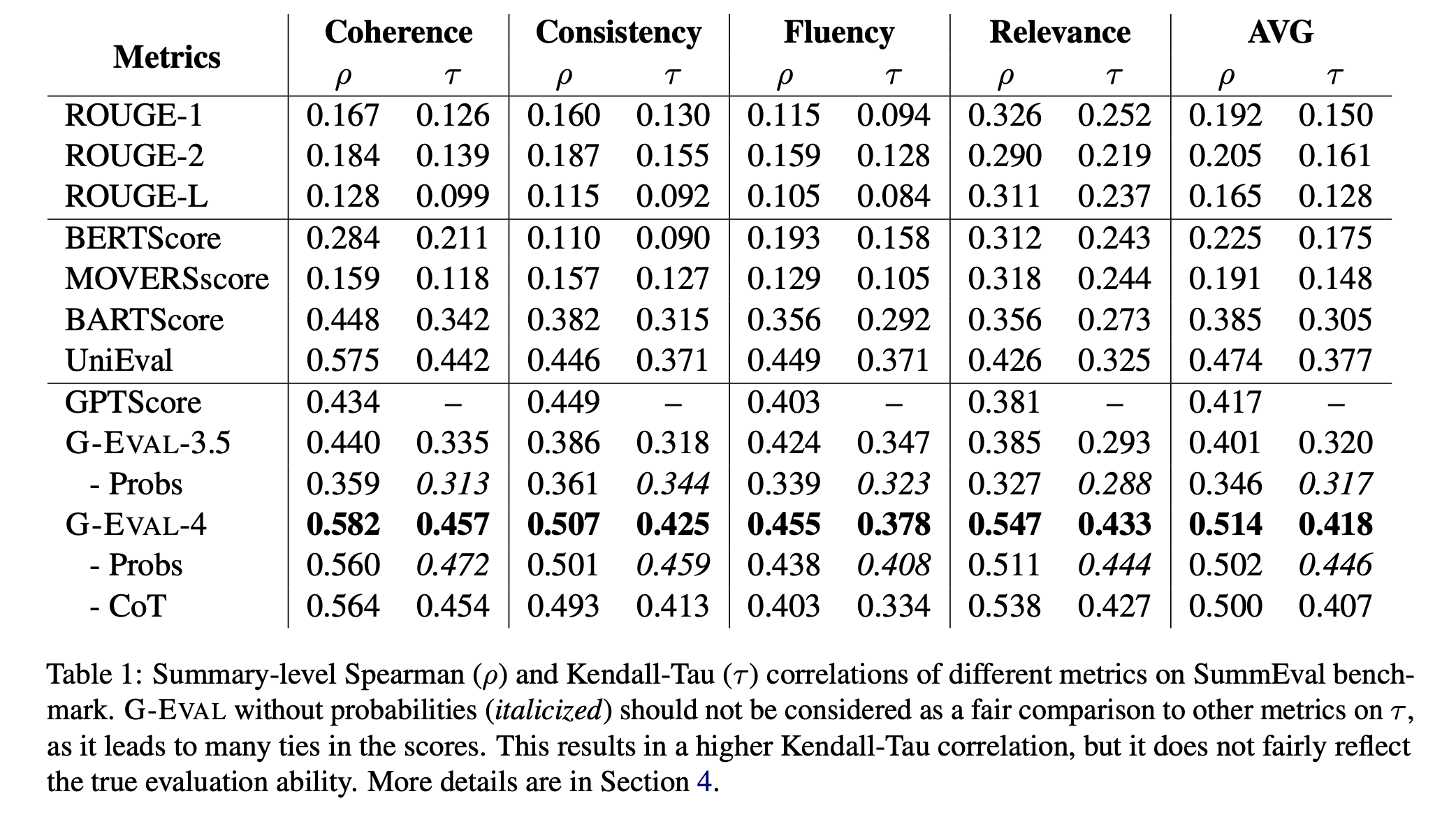

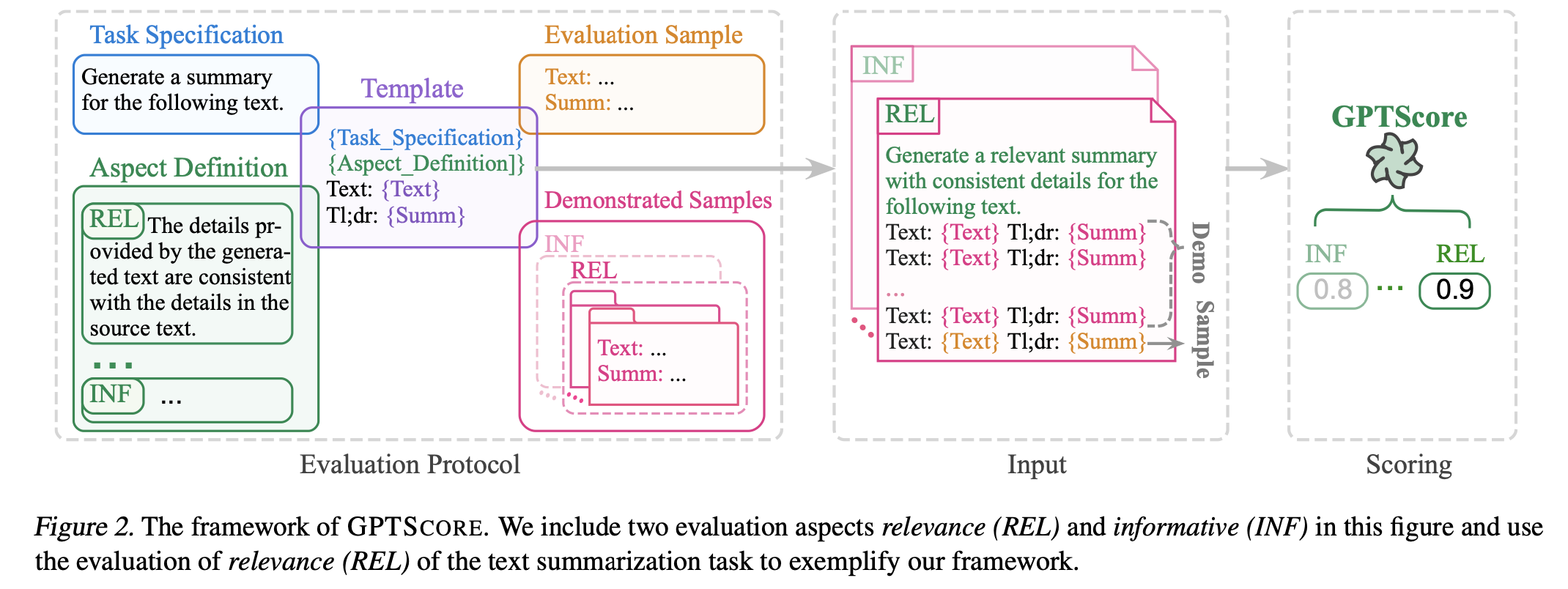

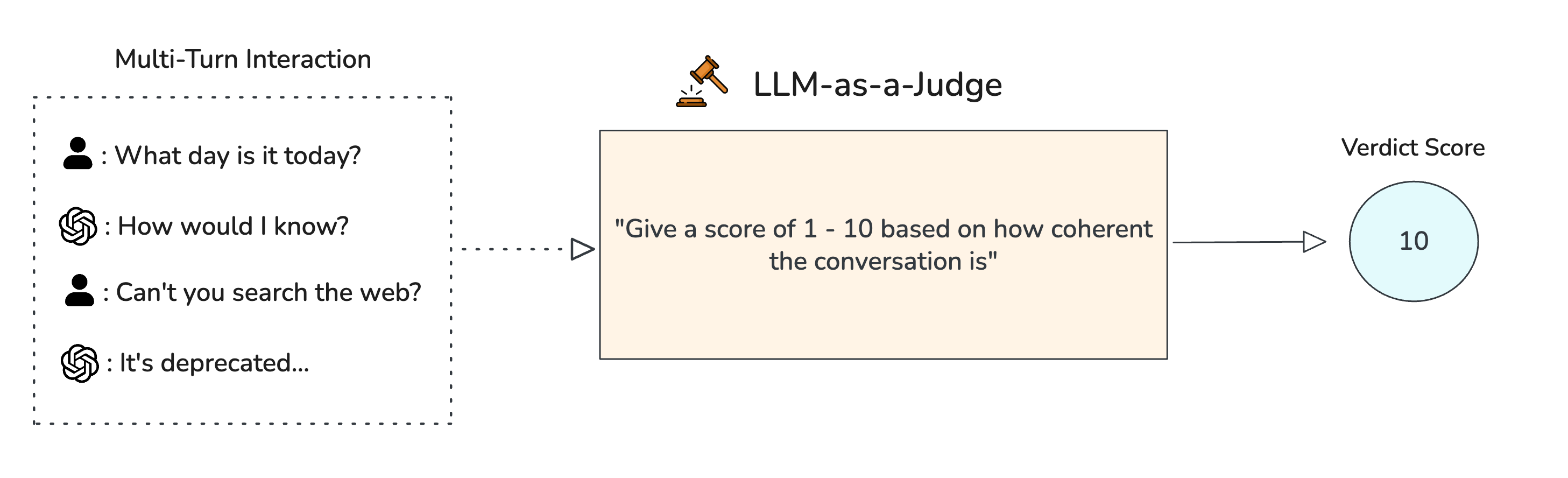

LLM-as-a-judge is the most reliable method—using an LLM to evaluate with natural language rubrics, but requires various techniques like G-Eval.

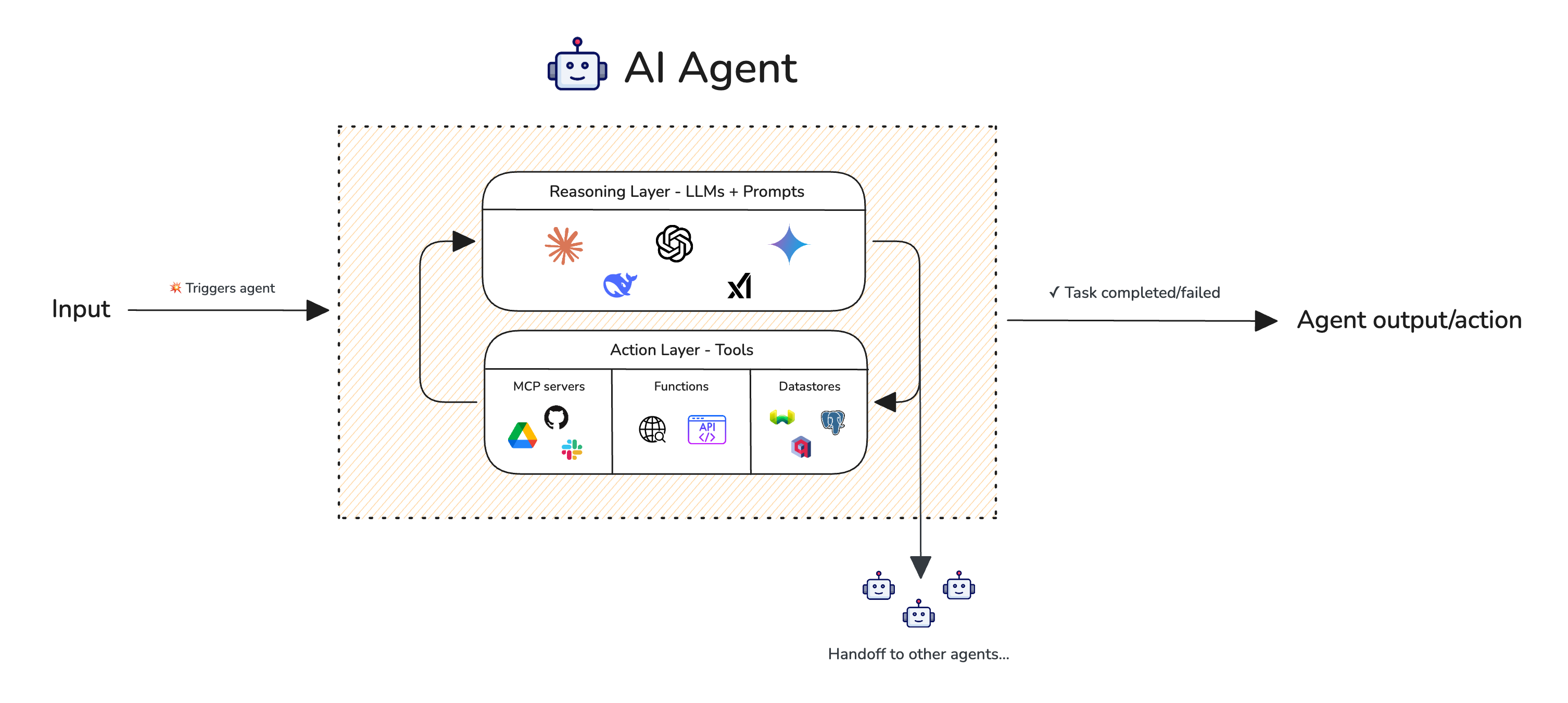

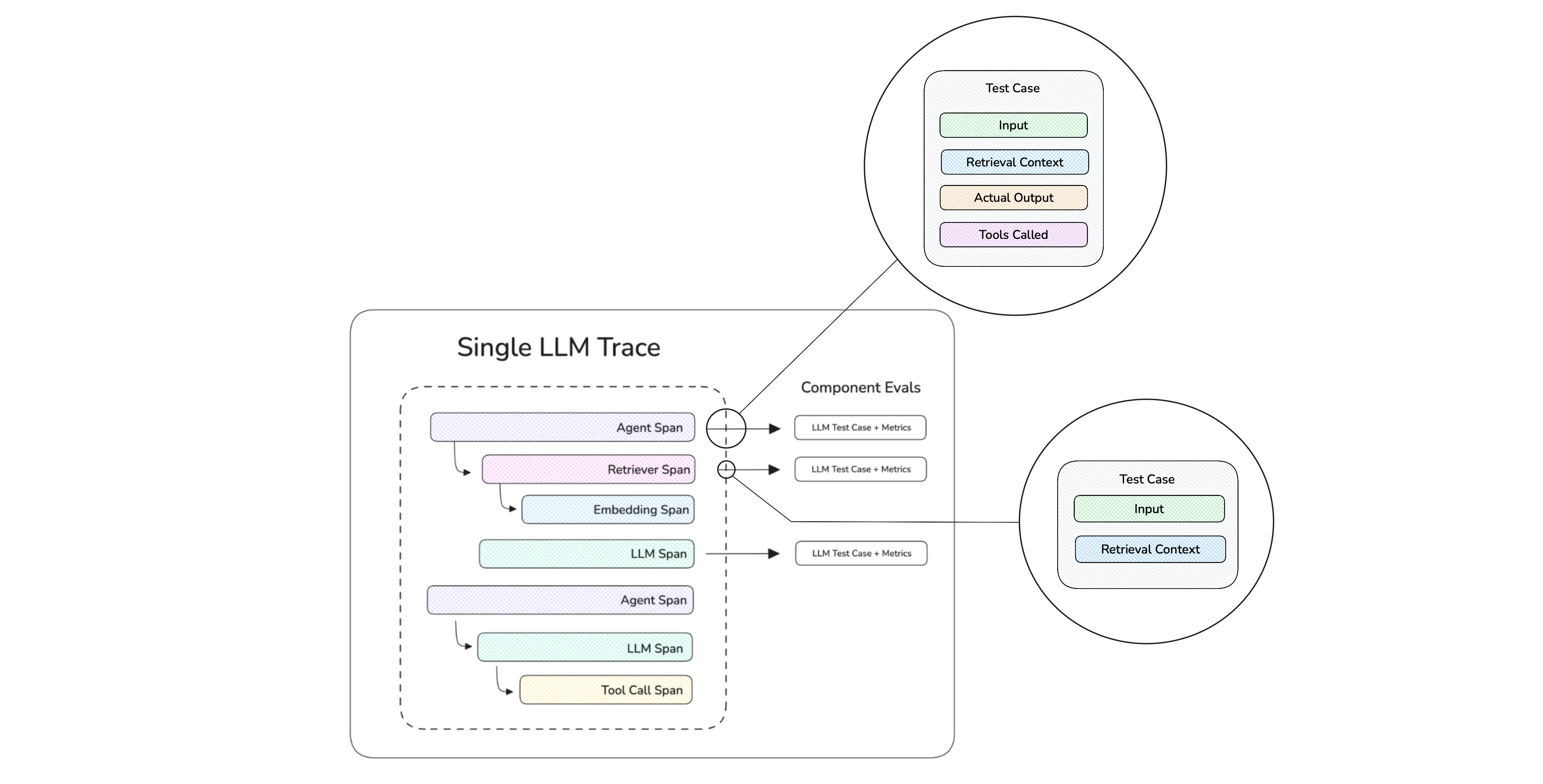

Evaluation metrics in the context of LLM evaluation can be categorized as either single or multi-turn, targeting end-to-end LLM systems or at a component-level.

Metrics for AI agents, RAG, chatbots, and foundational models are all different and has to be complimented with use case specific ones (e.g. Text-SQL, writing assistants).

DeepEval (100% OS ⭐https://github.com/confident-ai/deepeval) allows anyone to implement SOTA LLM metrics in 5 lines of code.

What are LLM Evaluation Metrics?

LLM evaluation metrics such as answer correctness, semantic similarity, and hallucination, are metrics that score an LLM system's output based on criteria you care about. They are critical to LLM evaluation, as they help quantify the performance of different LLM systems, which can just be the LLM itself.

Here are the most important and common metrics that you will likely need before launching your LLM system into production:

Answer Relevancy: Determines whether an LLM output is able to address the given input in an informative and concise manner.

Task Completion: Determines whether an LLM agent is able to complete the task it was set out to do.

Correctness: Determines whether an LLM output is factually correct based on some ground truth.

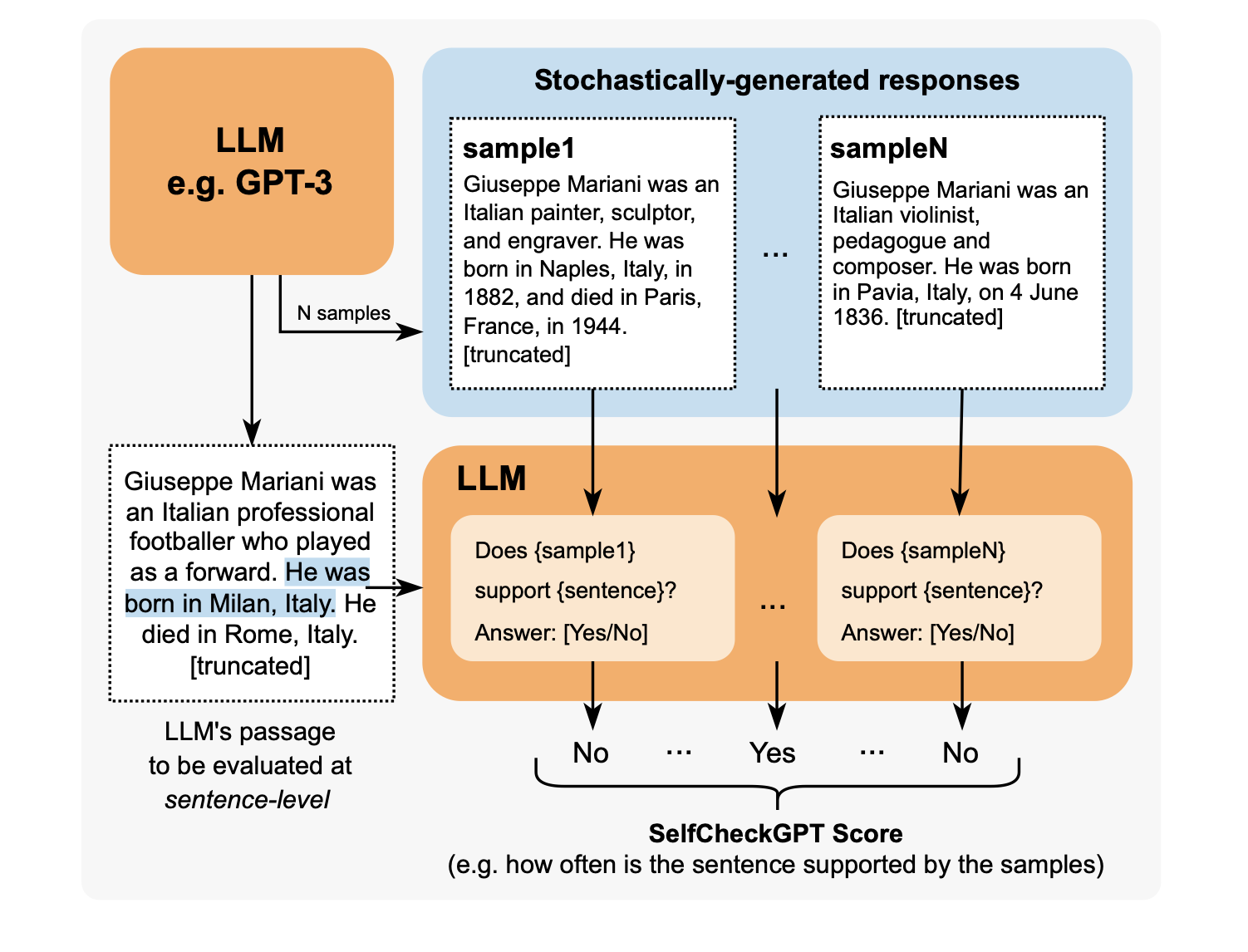

Hallucination: Determines whether an LLM output contains fake or made-up information.

Tool Correctness: Determines whether an LLM agent is able to call the correct tools for a given task.

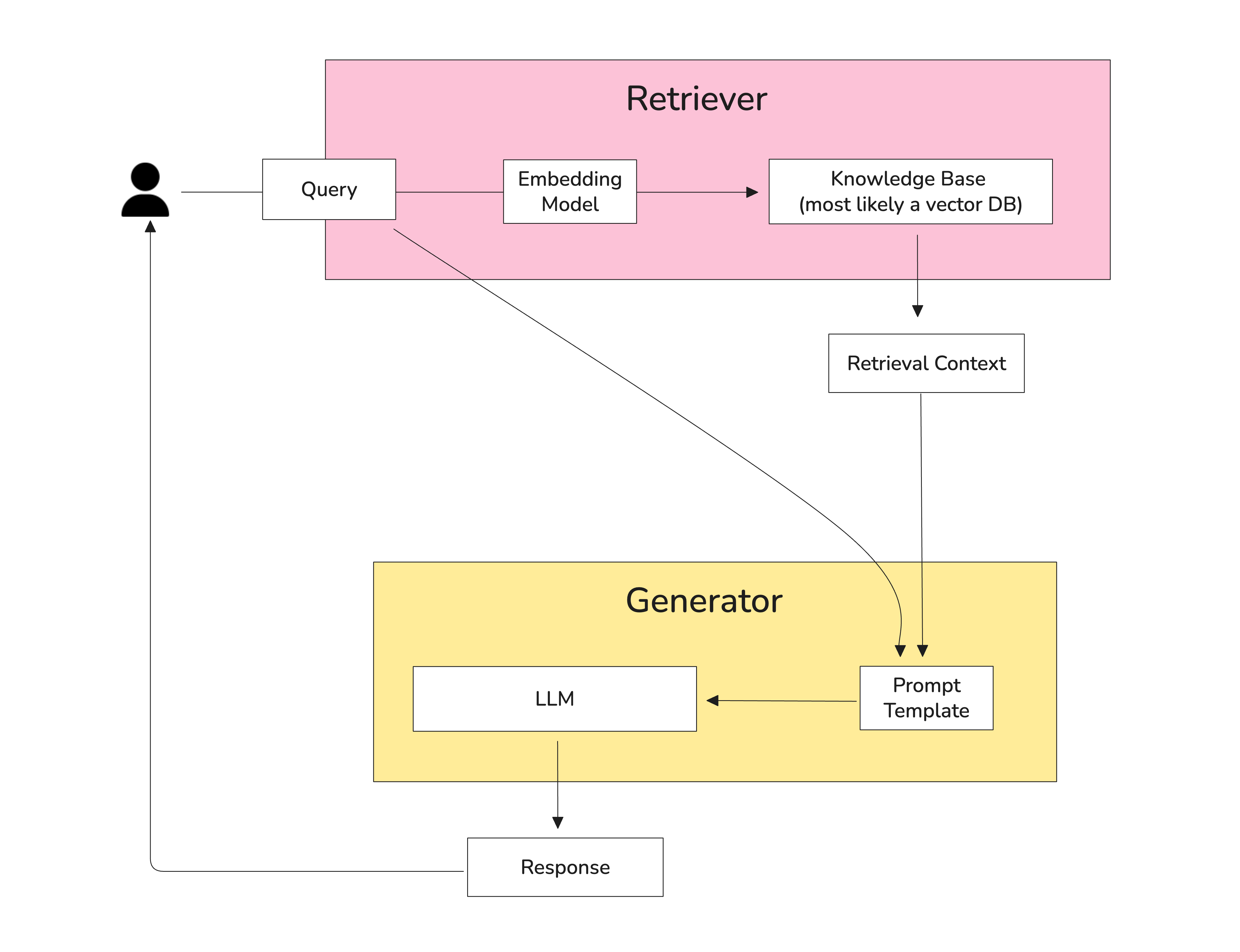

Contextual Relevancy: Determines whether the retriever in a RAG-based LLM system is able to extract the most relevant information for your LLM as context.

Responsible Metrics: Includes metrics such as bias and toxicity, which determines whether an LLM output contains (generally) harmful and offensive content.

Task-Specific Metrics: Includes metrics such as summarization, which usually contains a custom criteria depending on the use-case.

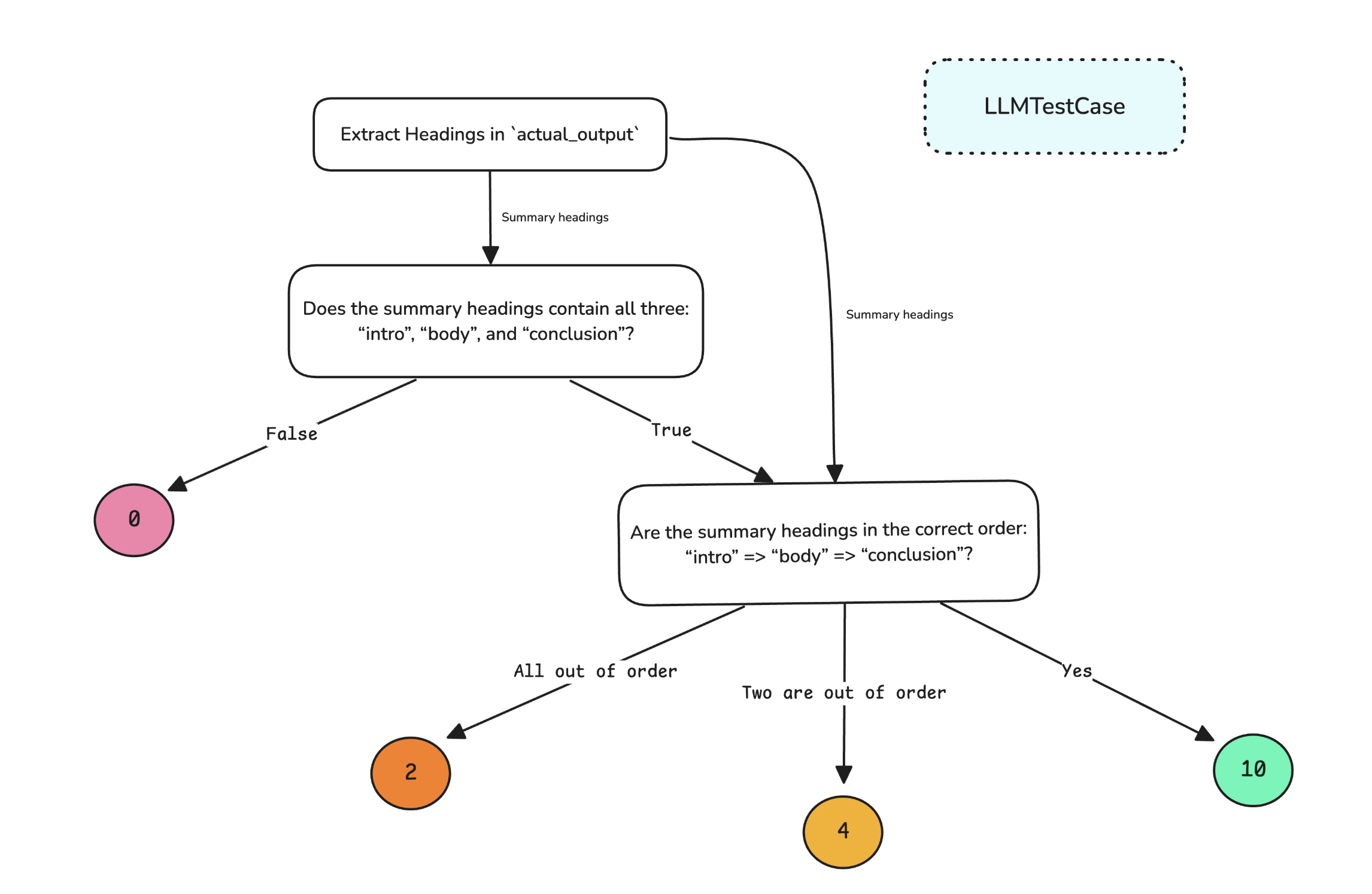

While most metrics are generic and necessarily, they are not sufficient to target specific use-cases. This is why you'll want at least one custom task-specific metric to make your LLM evaluation pipeline production ready (as you'll see later in the G-Eval and DAG sections). For example, if your LLM application is designed to summarize pages of news articles, you’ll need a custom LLM evaluation metric that scores based on:

Whether the summary contains enough information from the original text.

Whether the summary contains any contradictions or hallucinations from the original text.

Moreover, if your LLM application has a RAG-based architecture, you’ll probably need to score for the quality of the retrieval context as well. The point is, an LLM evaluation metric assesses an LLM application based on the tasks it was designed to do. (Note that an LLM application can simply be the LLM itself!)

In fact, this is why LLM-as-a-Judge is the preferred way to compute LLM evaluation metrics, which we will talk more in-depth later:

What makes great metrics?

That brings us to one of the most important points - your choice of LLM evaluation metrics should cover both the evaluation criteria of the LLM use case and the LLM system architecture:

LLM Use Case: Custom metrics specific to the task, consistent across different implementations.

LLM System Architecture: Generic metrics (e.g., faithfulness for RAG, task completion for agents) that depend on how the system is built.

If you decide to change your LLM system completely tomorrow for the same LLM use case, your custom metrics shouldn't change at all, and vice versa. We'll talk more about the best strategy to choose your metrics later (spoiler: you don't want to have more than 5 metrics), but before that let's go through what makes great metrics great.

Great evaluation metrics are:

Quantitative. Metrics should always compute a score when evaluating the task at hand. This approach enables you to set a minimum passing threshold to determine if your LLM application is “good enough” and allows you to monitor how these scores change over time as you iterate and improve your implementation.

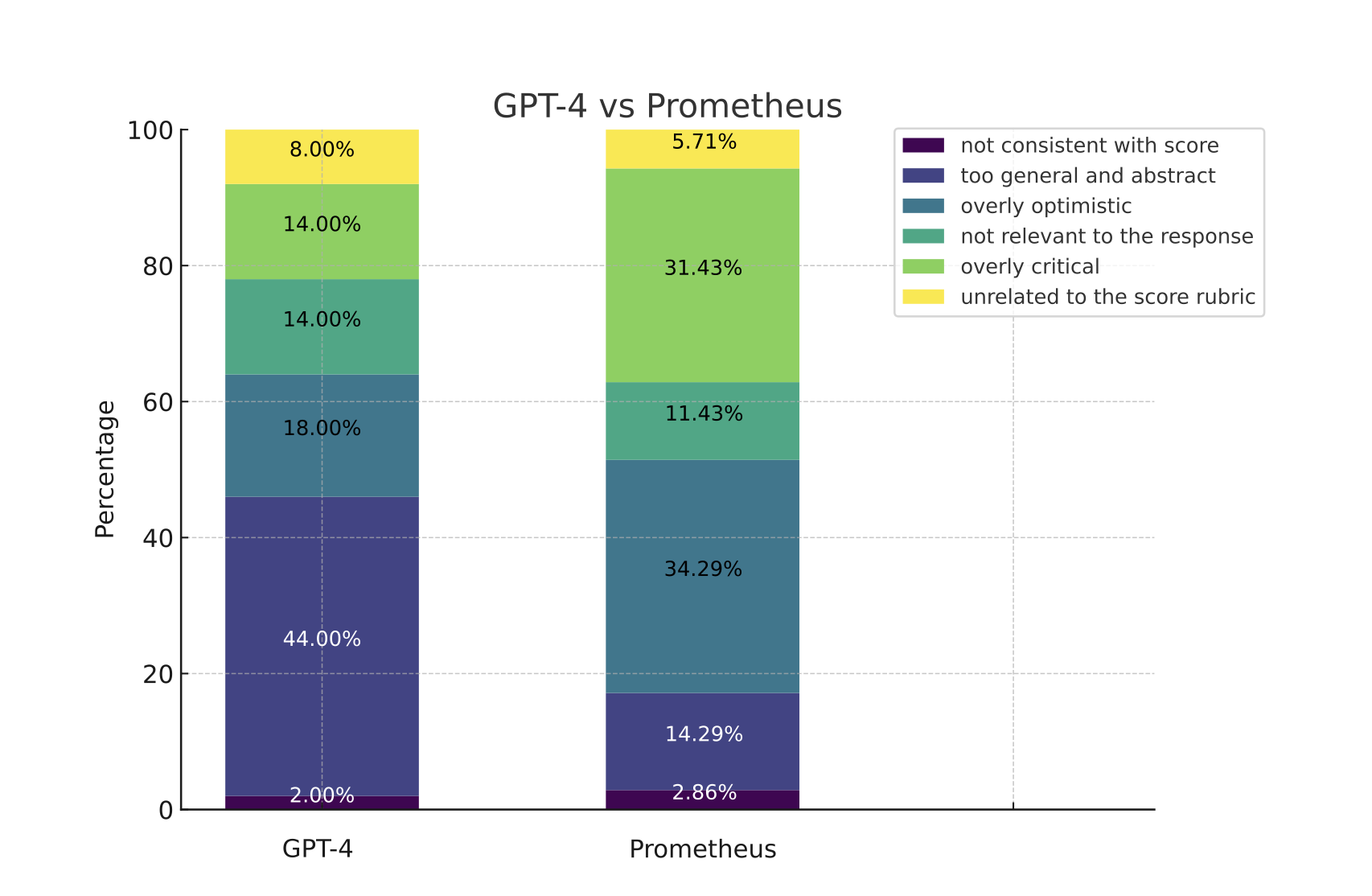

Reliable. As unpredictable as LLM outputs can be, the last thing you want is for an LLM evaluation metric to be equally flaky. So, although metrics evaluated using LLMs (aka. LLM-as-a-judge or LLM-Evals), such as G-Eval and especially for DAG, are more accurate than traditional scoring methods, they are often inconsistent, which is where most LLM-Evals fall short.

Accurate. Reliable scores are meaningless if they don’t truly represent the performance of your LLM application. In fact, the secret to making a good LLM evaluation metric great is to make it align with human expectations as much as possible.

So the question becomes, how can LLM evaluation metrics compute reliable and accurate scores?

Different Ways to Compute Metric Scores

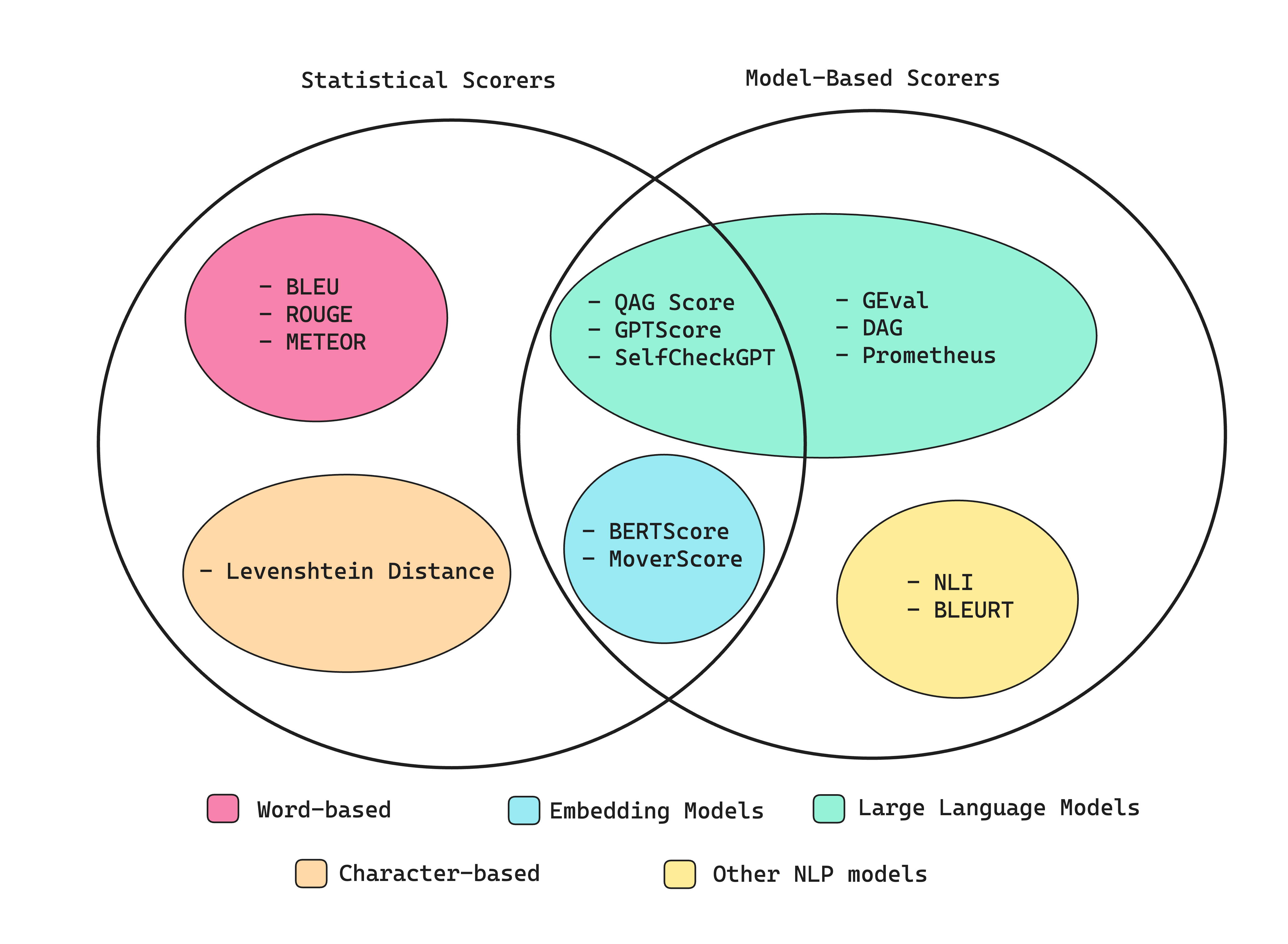

In one of my previous articles, I talked about how LLM outputs are notoriously difficult to evaluate. Fortunately, there are numerous established methods available for calculating metric scores — some utilize neural networks, including embedding models and LLMs, while others are based entirely on statistical analysis.

We’ll go through each method and talk about the best approach by the end of this section, so read on to find out!

Statistical Scorers

Before we begin, I want to start by saying statistical scoring methods in my opinion are non-essential to learn about, so feel free to skip straight to the “G-Eval” section if you’re in a rush. This is because statistical methods performs poorly whenever reasoning is required, making it too inaccurate as a scorer for most LLM evaluation criteria.

To quickly go through them:

The BLEU (BiLingual Evaluation Understudy) scorer evaluates the output of your LLM application against annotated ground truths (or, expected outputs). It calculates the precision for each matching n-gram (n consecutive words) between an LLM output and expected output to calculate their geometric mean and applies a brevity penalty if needed.

The ROUGE (Recall-Oriented Understudy for Gisting Evaluation) scorer is s primarily used for evaluating text summaries from NLP models, and calculates recall by comparing the overlap of n-grams between LLM outputs and expected outputs. It determines the proportion (0–1) of n-grams in the reference that are present in the LLM output.

The METEOR (Metric for Evaluation of Translation with Explicit Ordering) scorer is more comprehensive since it calculates scores by assessing both precision (n-gram matches) and recall (n-gram overlaps), adjusted for word order differences between LLM outputs and expected outputs. It also leverages external linguistic databases like WordNet to account for synonyms. The final score is the harmonic mean of precision and recall, with a penalty for ordering discrepancies.

Levenshtein distance (or edit distance, you probably recognize this as a LeetCode hard DP problem) scorer calculates the minimum number of single-character edits (insertions, deletions, or substitutions) required to change one word or text string into another, which can be useful for evaluating spelling corrections, or other tasks where the precise alignment of characters is critical.

Since purely statistical scorers hardly not take any semantics into account and have extremely limited reasoning capabilities, they are not accurate enough for evaluating LLM outputs that are often long and complex. However, there are exceptions. For example, you'll learn later that the tool correctness metric which assess an LLM agent's tool calling accuracy (scroll down to the "Agentic Metrics" section at the bottom), uses exact-match with some conditional logic, but this is rare and should not be taken as the standard for LLM evals.

The Eval Platform for AI Quality & Observability

Confident AI is the leading platform to evaluate AI apps on the cloud, powered by DeepEval.

](https://images.ctfassets.net/otwaplf7zuwf/7LQqgoaUIVCxJjI6vuunf7/b4c59330767b43596b8864a35f664f39/image.png)