Building a Retrieval-Augmented Generation (RAG) pipeline isn’t the mission impossible — knowing if it’s actually working is. You might fine-tune prompts, tweak retrievers, and switch models, but if your system is still hallucinating or citing the wrong context, what’s really broken? Without the right RAG evaluation metrics, it’s guesswork.

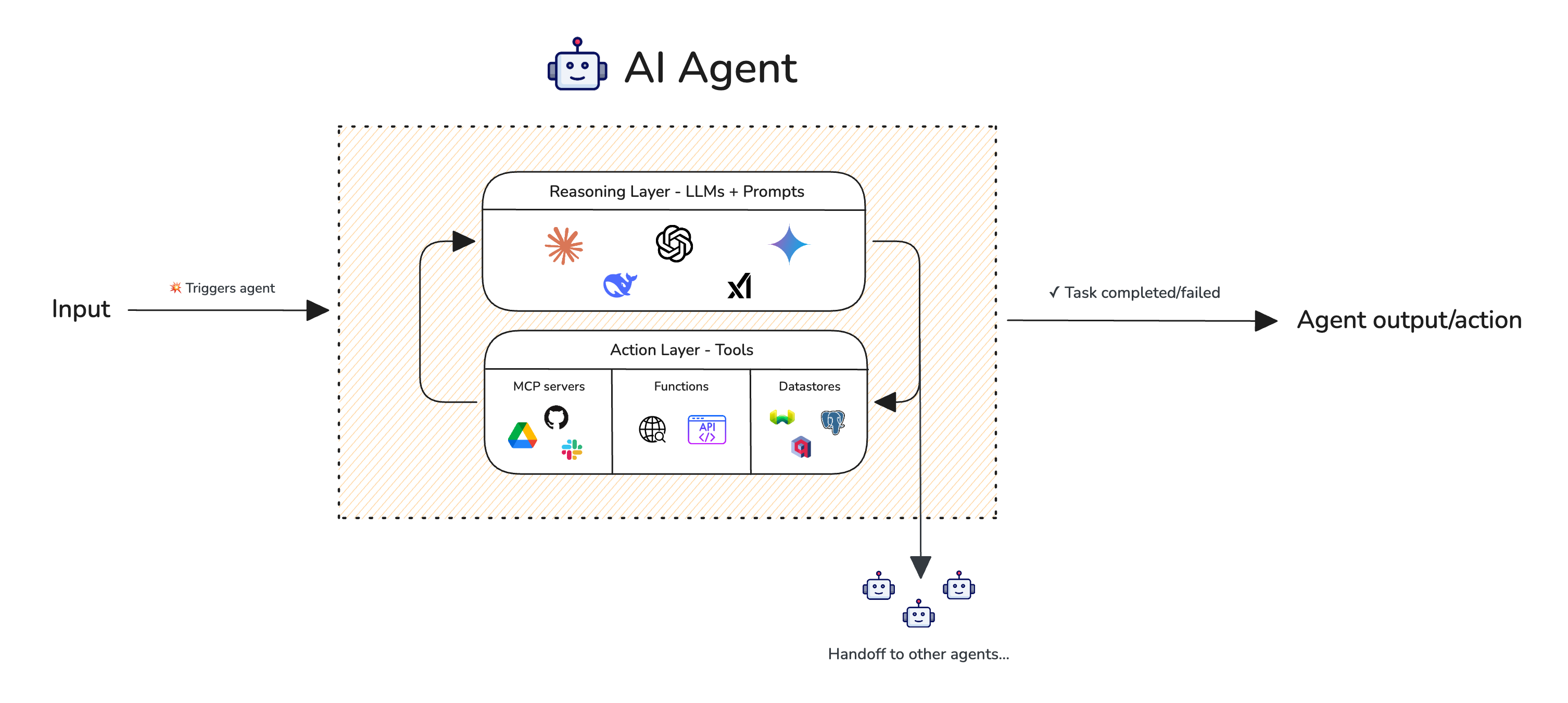

Sure, 2025 is the year of AI agents, but let’s face it: most agentic system still has a RAG pipeline somewhere in their AI workflow, and it’s vital that you’re about to secure the quality of that too.

So, in this article, we’ll go through everything you’ll need for RAG evaluation:

What is RAG evaluation, how is it different from regular LLM and AI agent evaluation, and common points of failure

Retriever metrics such as contextual relevancy, recall, and precision

Generator metrics such as answer relevancy and faithfulness

How to run RAG evaluation: both end-to-end and at a component-level

Best practices, including RAG evaluation in CI pipelines and post-deployment monitoring

All of course, this all includes code samples using DeepEval ⭐, an open-source LLM evaluation framework. Let’s get started.

TL;DR

RAG pipelines are made up of a retriever and a generator, both of which contribute to the quality of the final response.

RAG metrics measures either the retriever and generator in isolation, focusing on relevancy, hallucination, and retrieval.

Retriever metrics include: Contextual recall, precision, and relevancy, used for evaluating things like top-K values and embedding models.

Generator metrics include: Faithfulness and answer relevancy, used for evaluating the LLM and prompt template.

RAG metrics are generic, and you'll want to use at least one additional custom metric to tailor towards your use case.

Agentic RAG requires additional metrics such as task completion.

DeepEval (100% OS ⭐ https://github.com/confident-ai/deepeval) allows anyone to implement SOTA RAG metrics in 5 lines of code.

What is RAG Evaluation?

RAG evaluation is the process of using metrics such as answer relevancy, faithfulness, and contextual relevancy to test the quality of a RAG pipeline’s “retriever” and the “generator” separately to measure each component’s contribution to the final response quality

To do this, RAG evaluation involves 5 key industry-standard metrics:

Answer Relevancy: How relevant the generated response is to the given input.

Faithfulness: Whether the generated response contains hallucinations to the retrieval context.

Contextual Relevancy: How relevant the retrieval context is to the input.

Contextual Recall: Whether the retrieval context contains all the information required to produce the ideal output (for a given input).

Contextual Precision: Whether the retrieval context is ranked in the correct order (higher relevancy goes first) for a given input.

For agentic RAG use cases, which we'll cover more in a later section, you might also find it useful to include a task completion metric to evaluate your AI agent RAG pipeline as well.

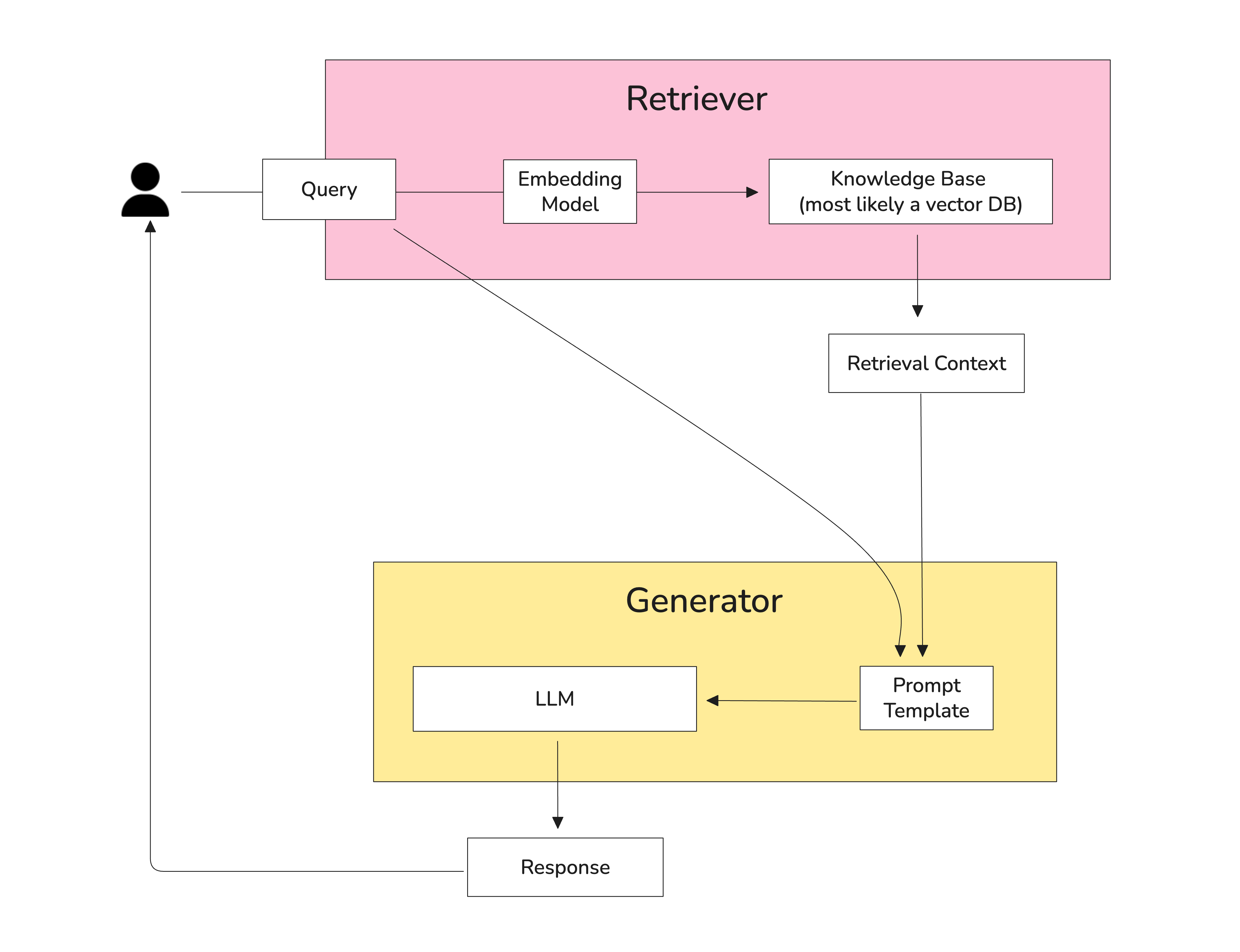

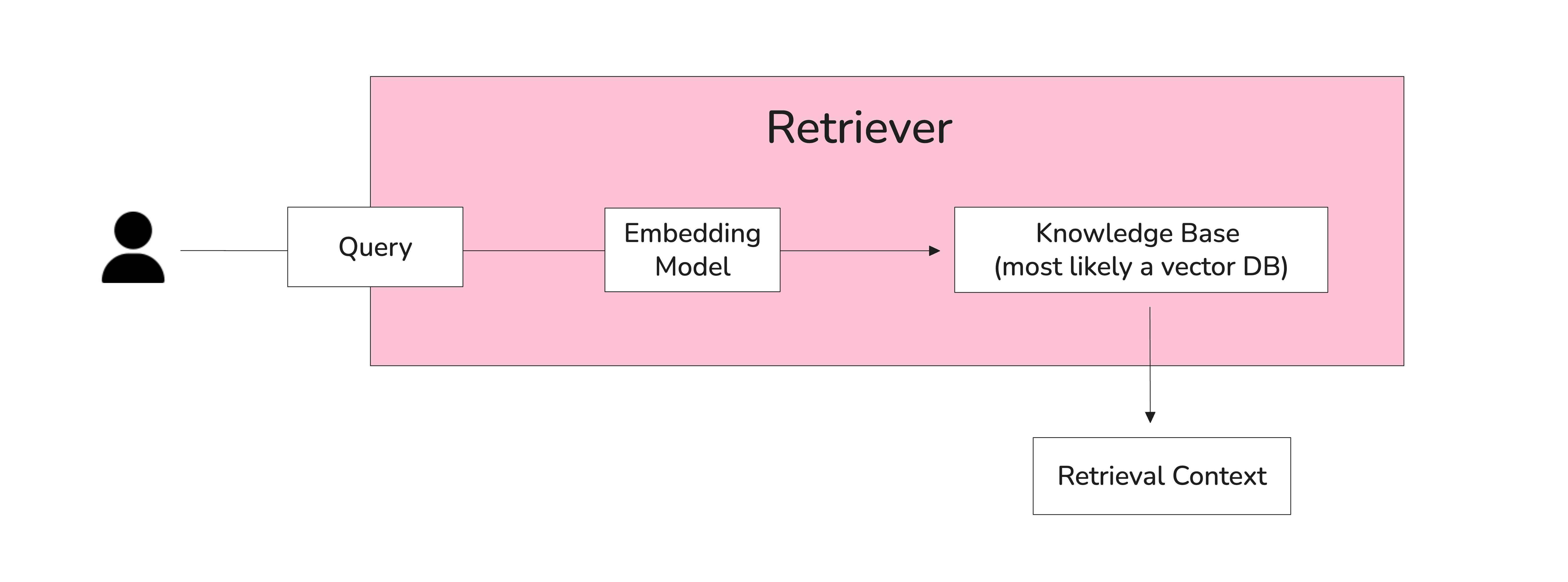

Before we get too into the metrics though and RAG agents, let’s recap what RAG is. A RAG pipeline is an architecture where an LLM’s output is informed by external data that is retrieved at runtime based on an input. Rather than relying solely on the model’s trained knowledge, a RAG system first:

Searches a knowledge source — like a document database, vector store, or API, then

Feeds the retrieved content into the prompt for the LLM to generate a response.

Here’s a diagram showing how RAG works:

You’ll notice that the quality of the final generation is highly dependent on the retriever doing its job well. A RAG pipeline can only produce a helpful, factually correct response if it:

Has access to the right context.

Does not hallucinate, and follows instructions given the right context.

In fact, the quality of your RAG generation is only as strong as its weakest component — retriever or generator. Think of it as a product, not a sum: if either the retriever or the generator performs poorly (or fails entirely), the overall quality of the output can drop to zero, regardless of how well the other performs.

So the question becomes: how do we evaluate both the retriever and the generator independently to understand where the failures are happening in a RAG pipeline?

Ways In Which Your RAG Pipeline Can Fail

You can address this by using retriever-targeted and generator-targeted metrics that evaluate each component separately, focusing on the most common failure modes within each stage of the pipeline.

When the retriever fails, it often due to the following:

Uninformative embeddings (by your embedding model)

Poor chunking strategy (caused by chunk size, partitioning logic, etc.)

Weak reranking logic

Suboptimal Top-K setting

This forces the LLM to “fill in the blanks,” leading to hallucinations or confidently wrong answers. Even with good retrieval, the generator can still fail to use the context effectively:

Ignores key information

Focuses on the wrong details

Misreads the prompt structure

Suffers from weak prompts or model limitations

Now with this in mind, it’s time to talk about how retrieval and generation metrics tackle each one of them.

Important Note: If your “RAG” pipeline involves “hard-coded” retrieval, where retrieval literally cannot go wrong (for example, fetching user data based on user ID and supplying that information into your prompt), there is no need for RAG evaluation per se, you can just do regular LLM system evaluation or even better, do agent evaluation instead if the process of fetching data is a tool calling one

The Eval Platform for AI Quality & Observability

Confident AI is the leading platform to evaluate AI apps on the cloud, powered by DeepEval.

](https://images.ctfassets.net/otwaplf7zuwf/5R5zmDwixZHm1DZA5JR33p/d537a5f3d4b59a15ecfdd08029bbca9f/image.png)

](https://images.ctfassets.net/otwaplf7zuwf/G331TNdP1AANX76zNLUHb/013e6cd337431d5acdc23e15040b2eb0/image.png)