LLM agents suck. I spent the past entire week building a web-crawling agent — only to watch it crawl at a snail’s pace, repeat pointless function calls, and spiral into infinite reasoning loops. Eventually, I finally threw in the towel and scrapped it for simple web-scraping script that took 30 minutes to code.

Alright, I’m not anti-LLM agents — I’m building an AI company, after all. That said, building an agent that’s efficient, reliable, and scalable is no easy task. The good news? Once you’ve pinpoint and eliminate the bottlenecks, the automation upside is enormous. The key is knowing where and how to evaluate your agent effectively.

Over the past year, I’ve helped hundreds of companies stress-test their agents, curate benchmarks, and drive performance improvements. Today, I’ll walk you through everything you need to know to evaluate LLM agents effectively.

(Update: Part 2 on evaluating AI agents is here!)

Tl;DR

LLM agent evaluation is different from evaluating regular LLM apps (think RAG, chatbots) since they are composed of complex, multi-component architectures.

The complex multi-component nature of agents make it necessary to evaluate not just the end to end system, but also each individual components.

Component-level evaluation allows you to treat your agent not as a mere black-box, but figure out where things go wrong (e.g. a faulty agent handoff).

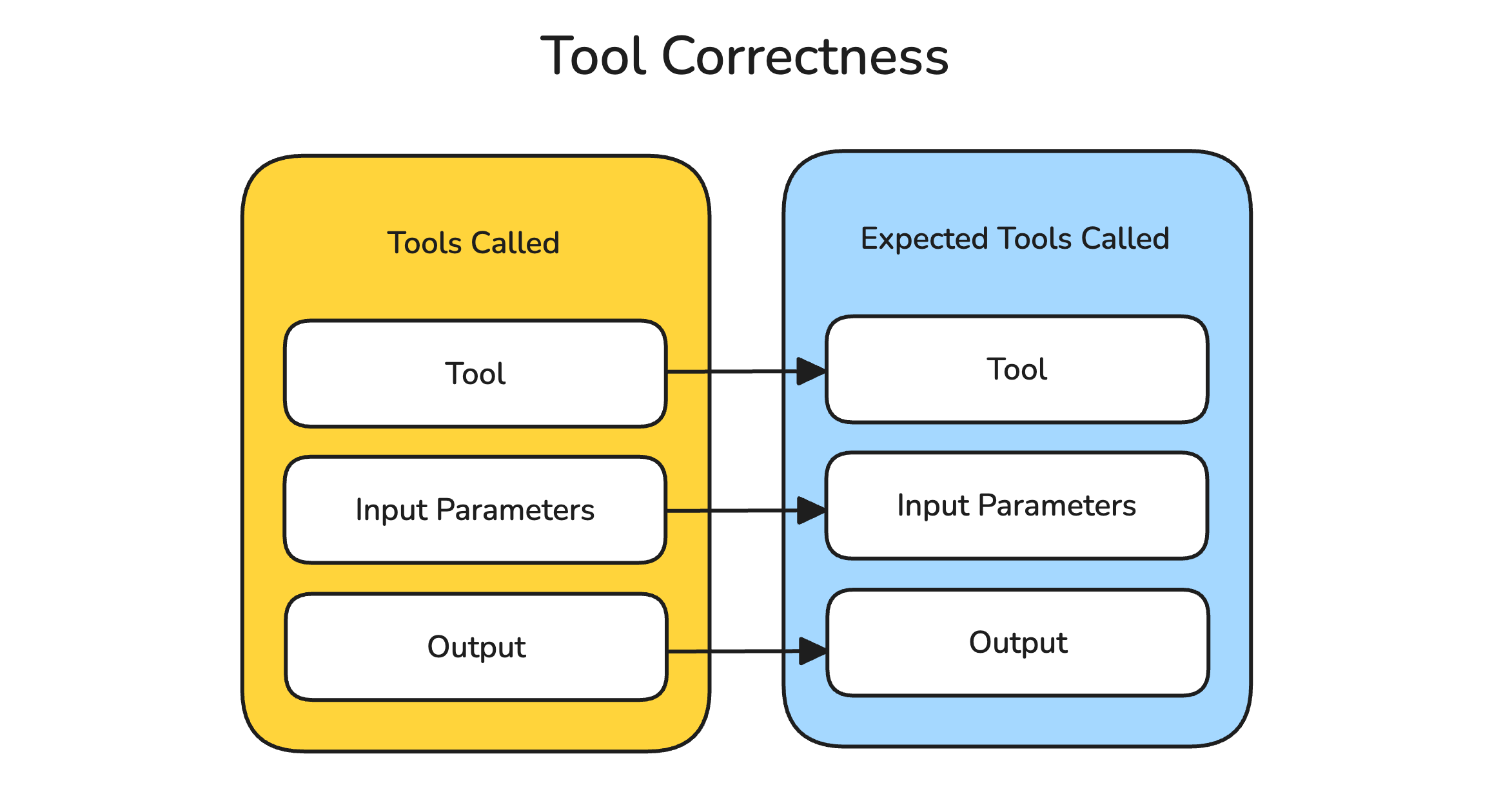

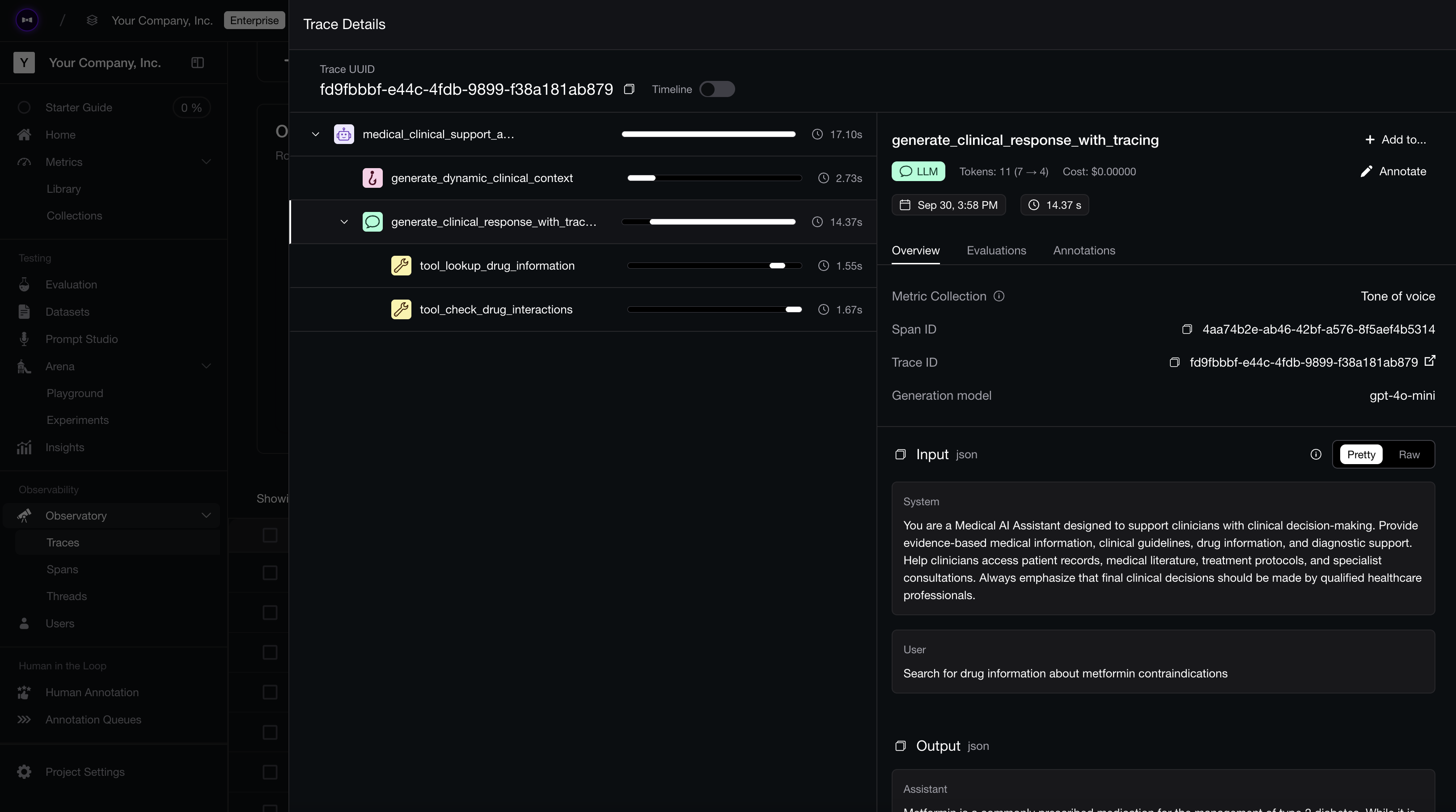

LLM evals for agents include tool correctness, and task completion, which can be ran on an LLM trace itself.

DeepEval (100% OS ⭐ https://github.com/confident-ai/deepeval) allows anyone to implement LLM evals for agents, and to trace and evaluate entire components in one go.

LLM Agent Evaluation vs LLM Evaluation

LLM agent evaluation is the process of assessing autonomous AI workflows on performance metrics such as task completion, which may sound familiar to regular LLM evaluation — but it isn't.

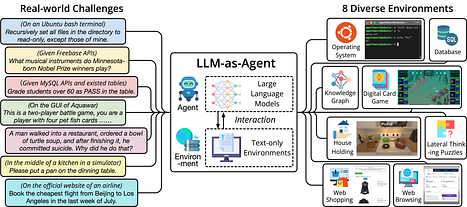

To understand how LLM agent evaluation differs from traditional LLM evaluation, it’s important to recognize what makes agents fundamentally different:

Architectural complexity: Agents are built from multiple components, often chained together in intricate workflows.

Tool usage: They can invoke external tools and APIs to complete tasks.

Autonomy: Agents operate with minimal human input, making dynamic decisions on what to do next.

Reasoning frameworks: They often rely on advanced planning or decision-making strategies to guide behavior.

This complexity makes evaluation challenging, because now it is not just the end-to-end system that we have to evaluate, as is the case in typical RAG evaluation. An agent might:

Call tools in varying sequences with different inputs.

Invoke other sub-agents, each with its own set of goals.

Generate non-deterministic behaviors based on state, memory, or context.

These component-level interactions must not be neglected when performing agentic evaluations

](https://images.ctfassets.net/otwaplf7zuwf/1OoL9WDyk0w2trNpI1Uycw/f395597dfb0eb895672fa9642fb551bb/image.png)

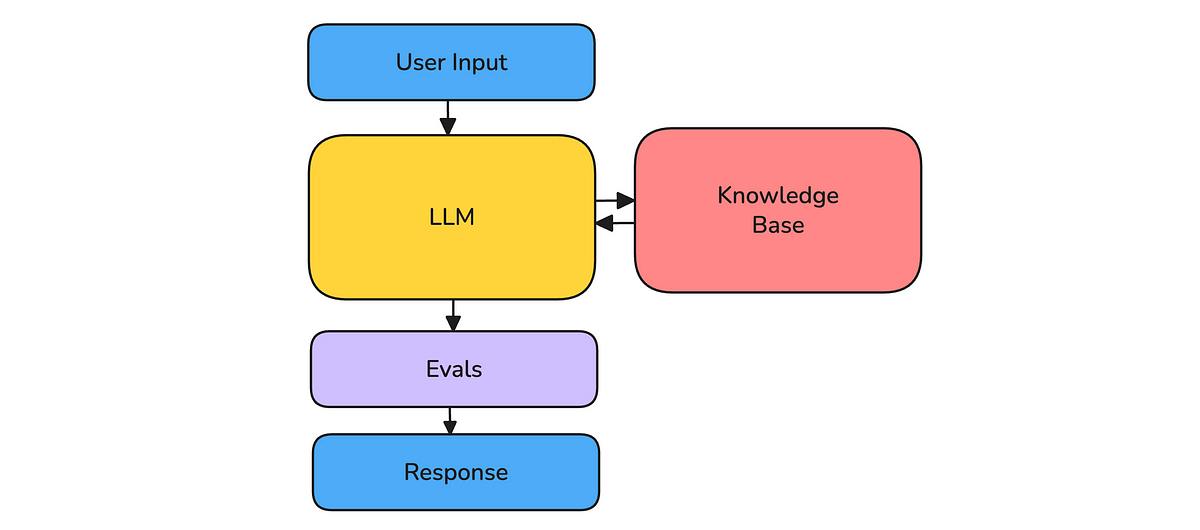

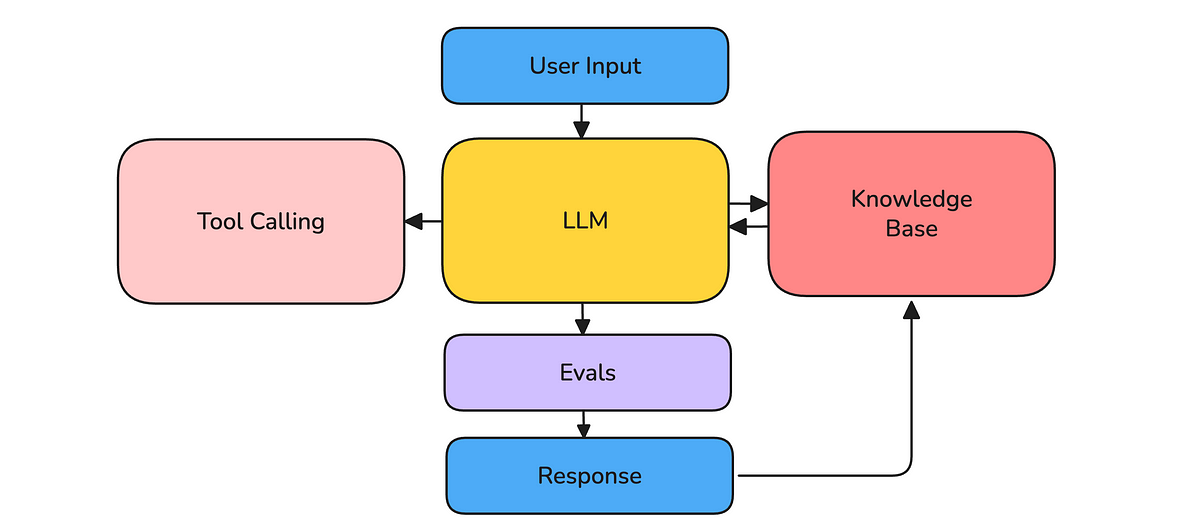

As a result, LLM agents are evaluated at two distinct levels:

End-to-end evaluation: Treats the entire system as a black box, focusing on whether the overall task was completed successfully given a specific input.

Component-level evaluation: Examines individual parts (like sub-agents, RAG pipelines, or API calls) to identify where failures or bottlenecks occur.

This layered approach helps diagnose both surface-level and deep-rooted issues in agent performance, and before we dive deeper into evaluation, let's understand how an agent works.

Characteristics of LLM Agents

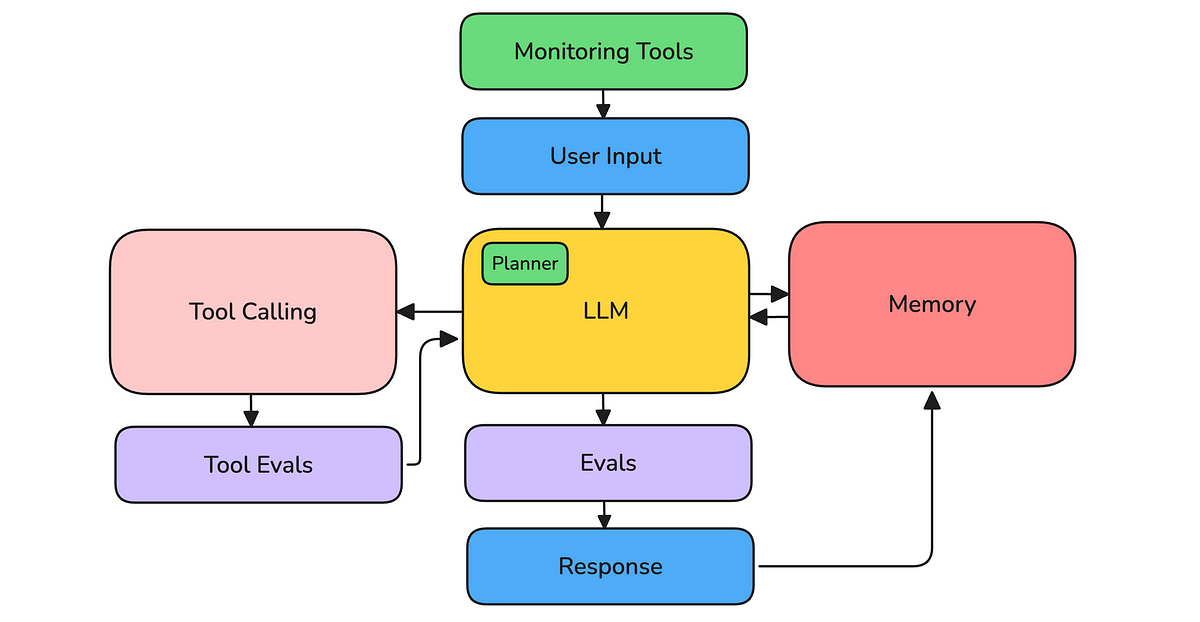

In the previous section, we briefly introduced the core characteristics of LLM agents: tool calling, autonomy, and reasoning. These traits give agents their unique capabilities and real-world reach, but are often themselves the source of errors.

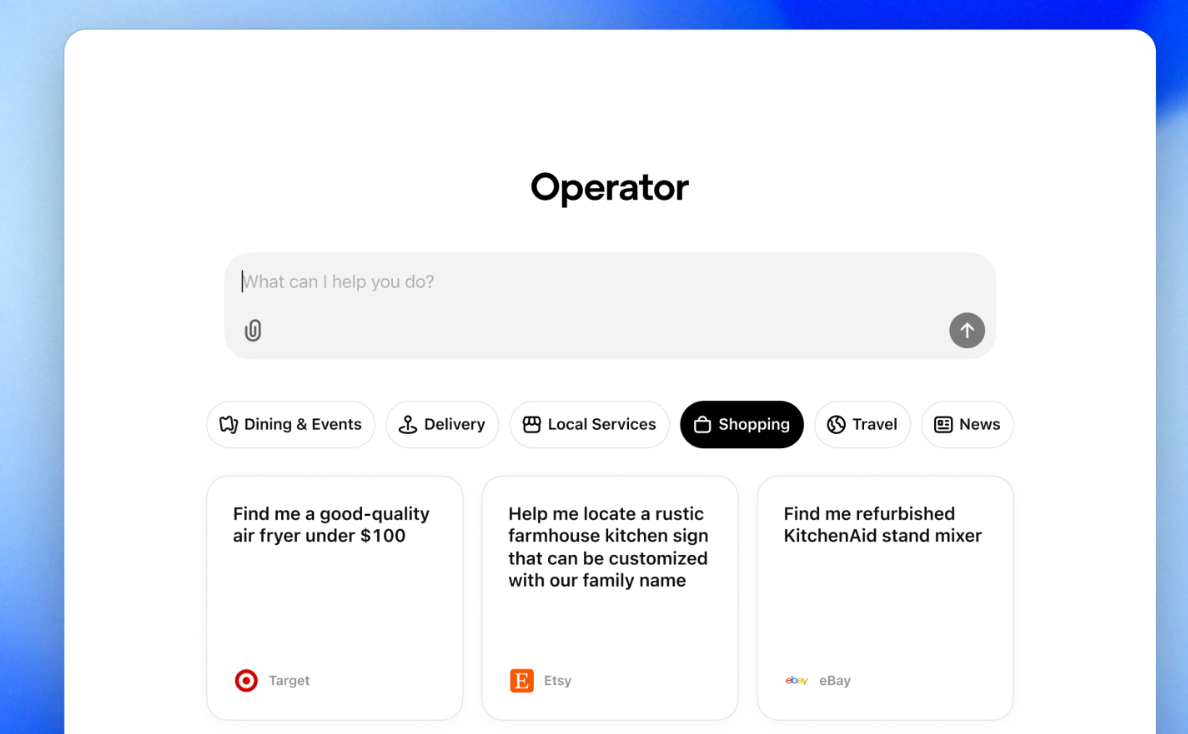

Tool invocation & API calls: agents can call external services — updating databases, booking restaurants, trading stocks, scraping websites — enabling real-world interaction. At the same time, mis-chosen tools, bad parameters, or unexpected outputs can derail the entire workflow.

High autonomy: agents plan and execute a sequence of steps with a high-level of autonomy — gathering information, invoking tools, then synthesizing a final answer. This multi-step process boosts capability but as with exploding gradients, magnifies the impact of any individual mistake.

Intermediate reasoning: agents deliberate before taking action — using reasoning frameworks like ReAct, which helps them make deliberate choices, but flawed logic can lead to infinite loops or misdirected tool calls.

These 3 characteristics set LLM agents apart, but not every agent invokes tools or engages in true reasoning. Agents operate at different autonomy levels depending on their purpose and use case: a basic chatbot isn’t the same as JARVIS. Defining these autonomy levels is crucial, since each tier demands its own evaluation criteria and techniques.

The Eval Platform for AI Quality & Observability

Confident AI is the leading platform to evaluate AI apps on the cloud, powered by DeepEval.