AI Connections

AI Connections let you run evaluations directly on the platform by connecting to your AI app via an HTTPS endpoint. Instead of writing code, you can trigger evaluations with a click of a button—Confident AI will call your endpoint with data from your goldens and parse the response.

Setting Up an AI Connection

To create an AI connection:

- Navigate to Project Settings → AI Connections

- Click New AI Connection

- Give it a unique identifying name

- Click Save

Your AI connection won’t be usable yet—you still need to configure the endpoint, payload, and at minimum the actual output key path.

Configuration Parameters

There are several parameters you’ll need to configure in order for your AI connection to work.

Name

Give your AI connection a unique name to identify it within your project.

AI App Endpoint

Your AI app must be accessible via an HTTPS endpoint that accepts POST requests and returns a JSON response containing the actual output of your AI app.

Payload

Configure the JSON payload that Confident AI sends to your endpoint. You can customize this to match your API’s expected structure using values from your goldens.

Available variables:

Example payload:

The custom payload feature lets you structure the request to match your existing API contract—no need to modify your AI app to accept a specific format.

Use golden.* variables for single-turn evaluations and conversationalGolden.* variables for multi-turn evaluations. See Prompts for details on how to use the prompts dictionary.

Headers

Add any headers required by your endpoint, such as API keys, authentication tokens, or content type specifications. These headers are sent with every request to your AI app.

Prompts

Associate prompt versions with your AI connection. When running evaluations, these prompts will be attributed to each test run, letting you trace results back to the prompts used.

The prompts variable in your payload is a dictionary where each key maps to an object containing alias and version:

Here’s an example of how your Python endpoint might handle the prompts dictionary:

For more details on working with prompts, see Prompt Versioning.

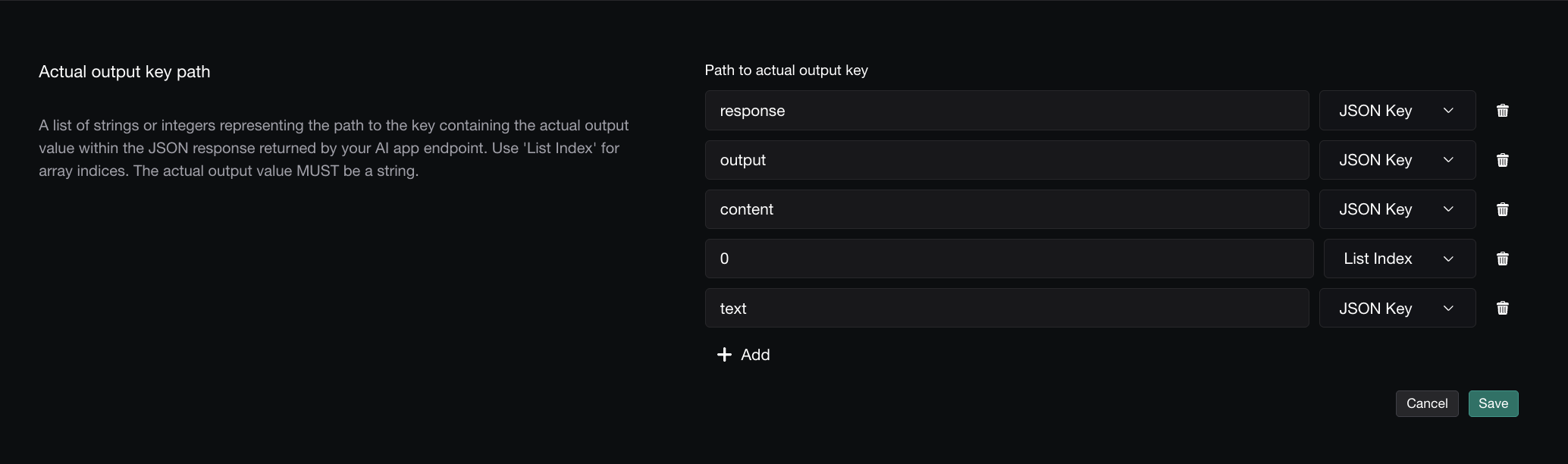

Actual Output Key Path

A list of strings or integers representing the path to the actual_output value in your JSON response. Use strings for JSON keys and integers for array indices. This is required for evaluation to work.

For example, if your endpoint returns:

Set the key path to ["response", "output"].

For nested arrays, use integers to specify the array index. For example, if your endpoint returns:

Set the key path to ["response", "output", "content", 0, "text"].

Retrieval Context Key Path

A list of strings or integers representing the path to the retrieval_context value in your JSON response. Use strings for JSON keys and integers for array indices. This is optional and only needed if you’re using RAG metrics. The value must be a list of strings.

For example, if your endpoint returns:

Set the key path to ["response", "retrieval_context"].

Tool Call Key Path

A list of strings or integers representing the path to the tools_called value in your JSON response. Use strings for JSON keys and integers for array indices. This is optional and only needed if you’re using metrics that require a tool call parameter. The value must be a list of ToolCall.

For example, if your endpoint returns:

Set the key path to ["response", "tools_called"].

For more information on the structure of a tool call, refer to the official DeepEval documentation.

Request Timeout

Set the maximum time (in seconds) that Confident AI will wait for your endpoint to respond before timing out. This helps prevent evaluations from hanging indefinitely if your AI connection is slow or unresponsive.

- Minimum: 1 second

- Default: 60 seconds

If your AI app performs complex operations or calls external services, you may need to increase the timeout to avoid premature failures.

Max Concurrency

Set the maximum number of concurrent requests that Confident AI will send to your endpoint at the same time. This helps prevent overwhelming your AI app during large evaluation runs.

- Minimum: 1

- Default: 20

Max Retries

Set the maximum number of times Confident AI will retry a failed request to your endpoint. This helps handle transient errors without failing the entire evaluation.

- Minimum: 0

- Default: 0

Linking Test Cases to Traces

When running evaluations through an AI Connection, you can link each test case to its corresponding trace for full observability. This is done by including testCaseId in your payload (enabled by default) and passing it to your tracing setup.

Include testCaseId in your payload configuration and ensure your AI connection is configured to accept it.

Then, pass the testCaseId to your tracing implementation:

Python

LangChain

LangGraph

Once linked, you can view the full trace for each test case directly from the evaluation results, making it easy to debug failures and understand model behavior.

Testing Your Connection

After configuring your AI connection, click Ping Endpoint to verify everything is set up correctly. You should receive a 200 status response. If not, check the error message and adjust your configuration accordingly.