When you imagine what a good summary for a 10-page research paper looks like, you likely picture a concise, comprehensive overview that accurately captures all key findings and data from the original work, presented in a clear and easily understandable format.

This might sound extremely obvious to us (I mean, who doesn’t know what a good summary looks like?), yet for large language models (LLMs) like GPT-4, grasping this simple concept to accurately and reliably evaluate a text summarization task remains a significant challenge.

In this article, I’m going to share how we built our own bullet-proof LLM-Evals (metrics evaluated using LLMs) to evaluate a text-summarization task. In summary (no pun intended), it involves asking closed-ended questions to:

Identify misalignment in factuality between the original text and summary.

Identify exclusion of details in the summary from the original text.

Existing Problems with Text Summarization Metrics

Traditional, non-LLM Evals

Historically, model-based scorers (e.g., BertScore and ROUGE) have been used to evaluate the quality of text summaries. These metrics, as I outlined here, while useful, often focus on surface-level features like word overlap and semantic similarity.

Word Overlap Metrics: Metrics like ROUGE (Recall-Oriented Understudy for Gisting Evaluation) often compare the overlap of words or phrases between the generated summary and a reference summary. If both summaries are of similar length, the likelihood of a higher overlap increases, potentially leading to higher scores.

Semantic Similarity Metrics: Tools like BertScore evaluate the semantic similarity between the generated summary and the reference. Longer summaries might cover more content from the reference text, which could result in a higher similarity score, even if the summary isn’t necessarily better in terms of quality or conciseness.

Moreover, these metrics struggle especially when the original text is composed of concatenated text chunks, which is often the case for a retrieval augmented generation (RAG) summarization use case. This is because they often fail to effectively assess summaries for disjointed information within the combined text chunks.

LLM-Evals

In one of my previous articles, I introduced G-Eval, an LLM-Evals framework that can be used for a summarization task. It usually involves providing the original text to an LLM like GPT-4 and asking it to generate a score and provide a reason for its evaluation. However, although better than traditional approaches, evaluating text summarization with LLMs presents its own set of challenges:

Arbitrariness: LLM Chains of Thought (CoTs) are often arbitrary, which is particularly noticeable when the models omit details that humans would typically consider essential to include in the summary.

Bias: LLMs often overlook factual inconsistencies between the summary and original text as they tend to prioritize summaries that reflect the style and content present in their training data.

In a nutshell, arbitrariness causes LLM-Evals to overlook the exclusion of essential details(or at least hinders their ability to identify what should be considered essential), while bias causes LLM-Evals to overlook factual inconsistencies between the original text and the summary.

LLM-Evals can be Engineered to Overcome Arbitrariness and Bias

Unsurprisingly, while developing our own summarization metric at Confident AI, we ran into all the problems I mentioned above. However, we came across this paper that introduced the Question Answer Generation framework, which was instrumental in overcoming bias and inconsistencies in LLM-Evals.

Question-Answer Generation

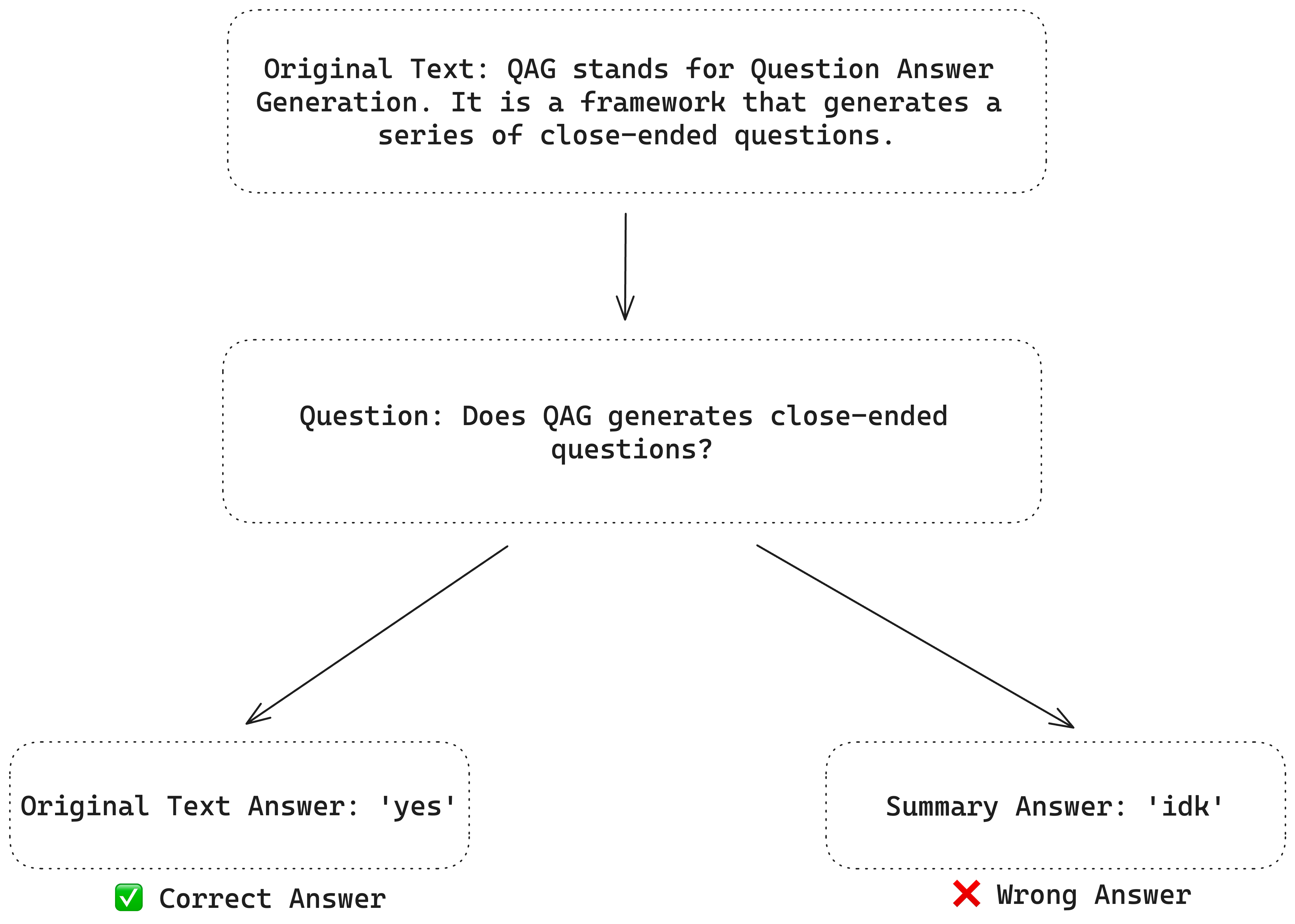

The Question-Answer Generation (QAG) framework is a process where close-ended questions are generated based on some text (which in our case is either the original text or the summary), before asking a language model (LM) to give an answer based on some reference text.

Let’s take this text for example:

The ‘coverage score’ is calculated as the percentage of assessment questions for which both the summary and the original document provide a ‘yes’ answer. This method ensures that the summary not only includes key information from the original text but also accurately represents it. A higher coverage score indicates a more comprehensive and faithful summary, signifying that the summary effectively encapsulates the crucial points and details from the original content.

A sample question according to QAG would be:

Is the ‘coverage score’ the percentage of assessment questions for which both the summary and the original document provide a ‘yes’ answer?

To which, the answer would be ‘yes’.

QAG is essential in evaluating a summarization task because closed-ended questions remove stochasticity, which in turn leads to arbitrariness and bias in LLM-Evals. (For those interested, here is another great read on why QAG is so great as an LLM metric scorer.)

The Eval Platform for AI Quality & Observability

Confident AI is the leading platform to evaluate AI apps on the cloud, powered by DeepEval.