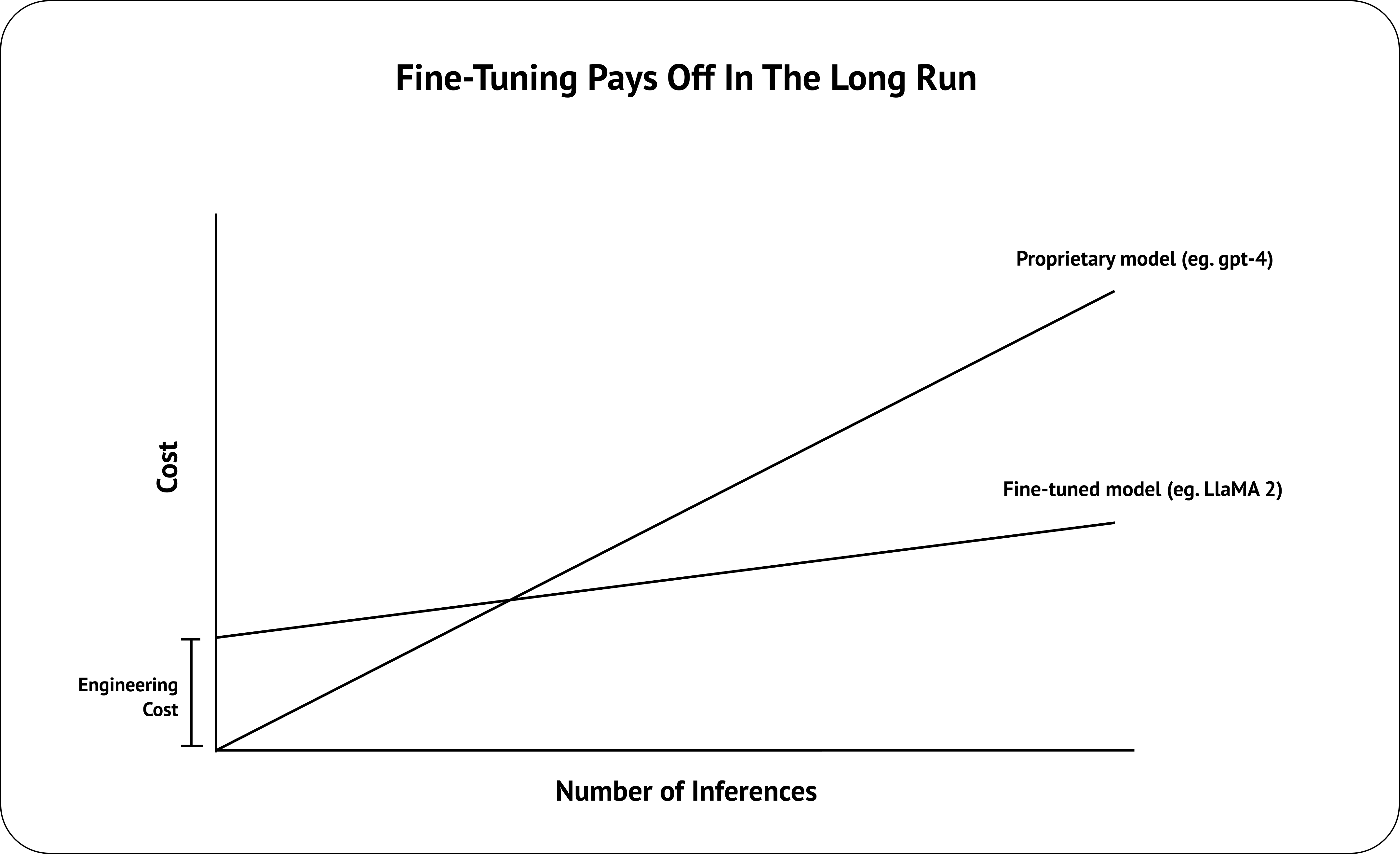

Fine-tuning a Large Language Model (LLM) comes with tons of benefits when compared to relying on proprietary foundational models such as OpenAI’s GPT models. Think about it, you get 10x cheaper inference cost, 10x faster tokens per second, and not have to worry about any shady stuff OpenAI’s doing behind their APIs. The way everyone should be thinking about fine-tuning, is not how we can outperform OpenAI or replace RAG, but how we can maintain the same performance while cutting down on inference time and cost for your specific use case.

But let’s face it, the average Joe building RAG applications isn’t confident in their ability to fine-tune an LLM — training data are hard to collect, methodologies are hard to understand, and fine-tuned models are hard to evaluate. And so, fine-tuning has became the best vitamin for LLM practitioners. You’ll often hear excuses such as “Fine-tuning isn’t a priority right now”, “We’ll try with RAG and move to fine-tuning if necessary”, and the classic “Its on the roadmap”. But what if I told you anyone could get started with fine-tuning an LLM in under 2 hours, for free, in under 100 lines of code? Instead of RAG or fine-tuning, why not both?

In this article, I’ll show you how to fine-tune a LLaMA-3 8B using Hugging Face’s transformers library and how to evaluate your fine-tuned model using DeepEval, all within a Google Colab.

Let’s dive right in.

What is LLaMA-3 and Fine-Tuning?

LLaMA-3 is Meta’s second-generation open-source LLM collection and uses an optimized transformer architecture, offering models in sizes of 8B and 70B for various NLP tasks. Although pre-trained auto-regressive models like LLaMA-3 predicts the next token in a sequence fairly well, fine-tuning is necessary to align their responses with human expectations.

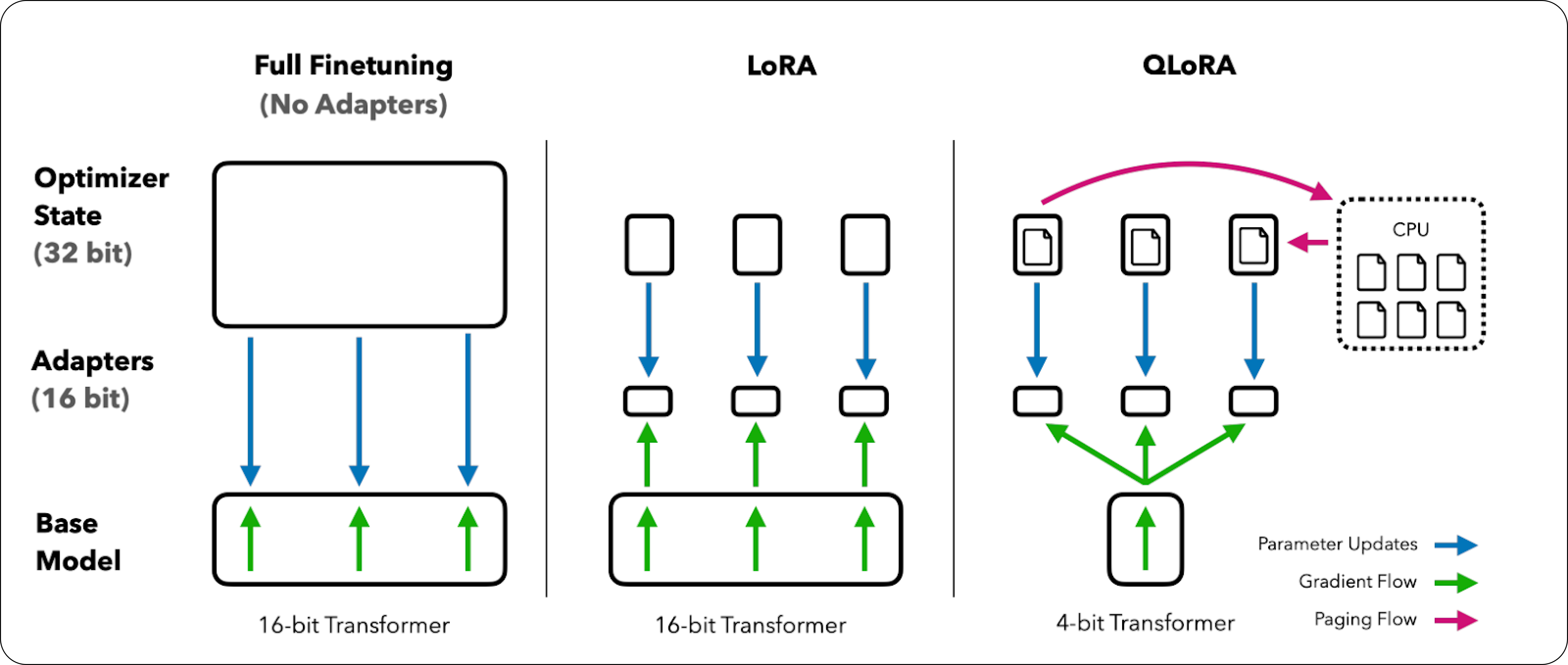

Fine-tuning in machine learning involves adjusting a pre-trained model’s weights on new data to enhance task-specific performance by training it on a task-specific dataset to adapt its responses to new inputs, and in the case of fine-tuning LLaMA-3, this means giving it a set of instructions and responses to employ instruction-tuning to make it useful as assistants. Fine-tuning is great because, did you know it took Meta 1.3M GPU hours to train LLaMA-3 8B alone?

Fine-tuning comes in two different forms:

SFT (Supervised Fine-Tuning): LLMs are fine-tuned on a set of instructions and responses. The model’s weights will be updated to minimize the difference between the generated output and labeled responses.

RLHF (Reinforcement Learning from Human Feedback): LLMs are trained to maximize the reward function (using Proximal Policy Optimization Algorithms or the Direct Preference Optimization (DPO) algorithm). This technique uses feedback from human evaluation of generated outputs, which in turn captures more intricate human preferences, but is prone to inconsistent human feedback.

As you may have guessed, we’ll be employing SFT in this article to instruction-tune a LLaMA-3 8B model.

The Eval Platform for AI Quality & Observability

Confident AI is the leading platform to evaluate AI apps on the cloud, powered by DeepEval.