Manually evaluating LLM systems is tedious, time-consuming and frustrating, which is why if you’ve ever found yourself looping through a set of prompts to manually inspect each corresponding LLM output, you’ll be happy to know that this article will teach you everything you need to know about LLM evaluation to ensure the longevity of you and your LLM application.

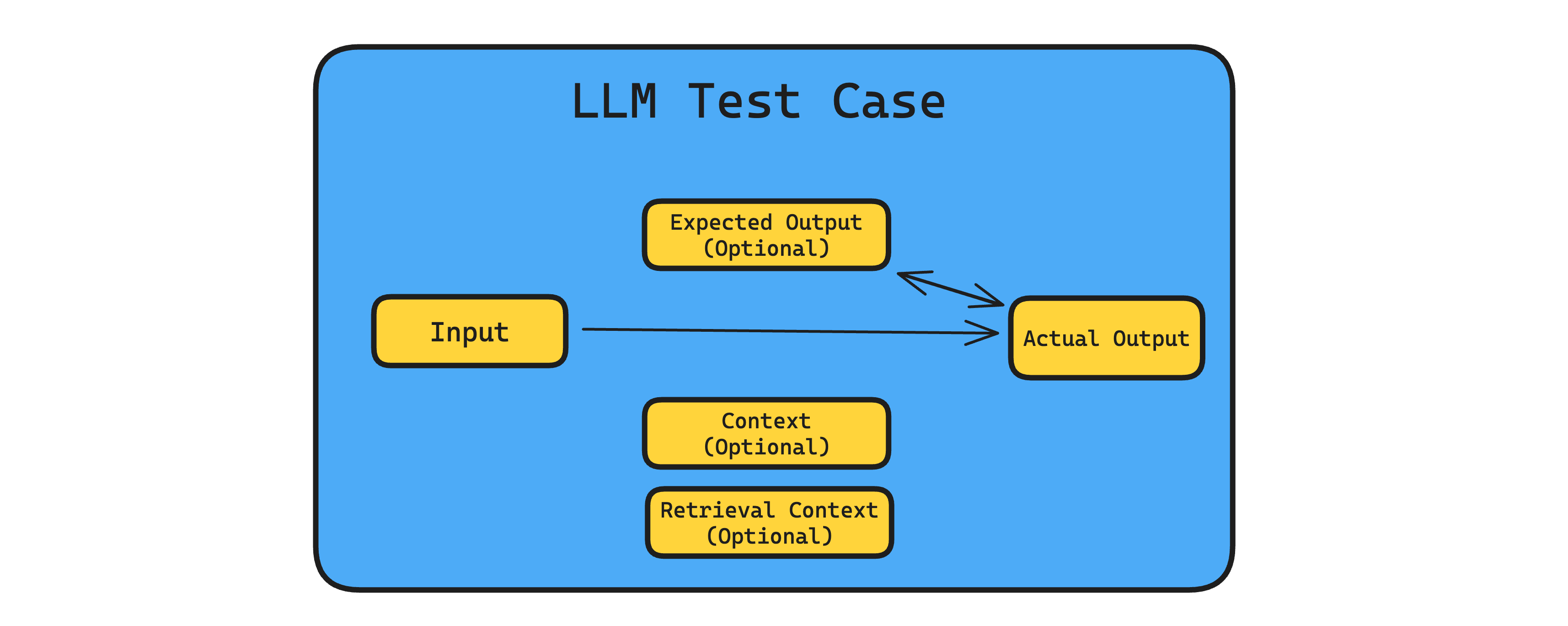

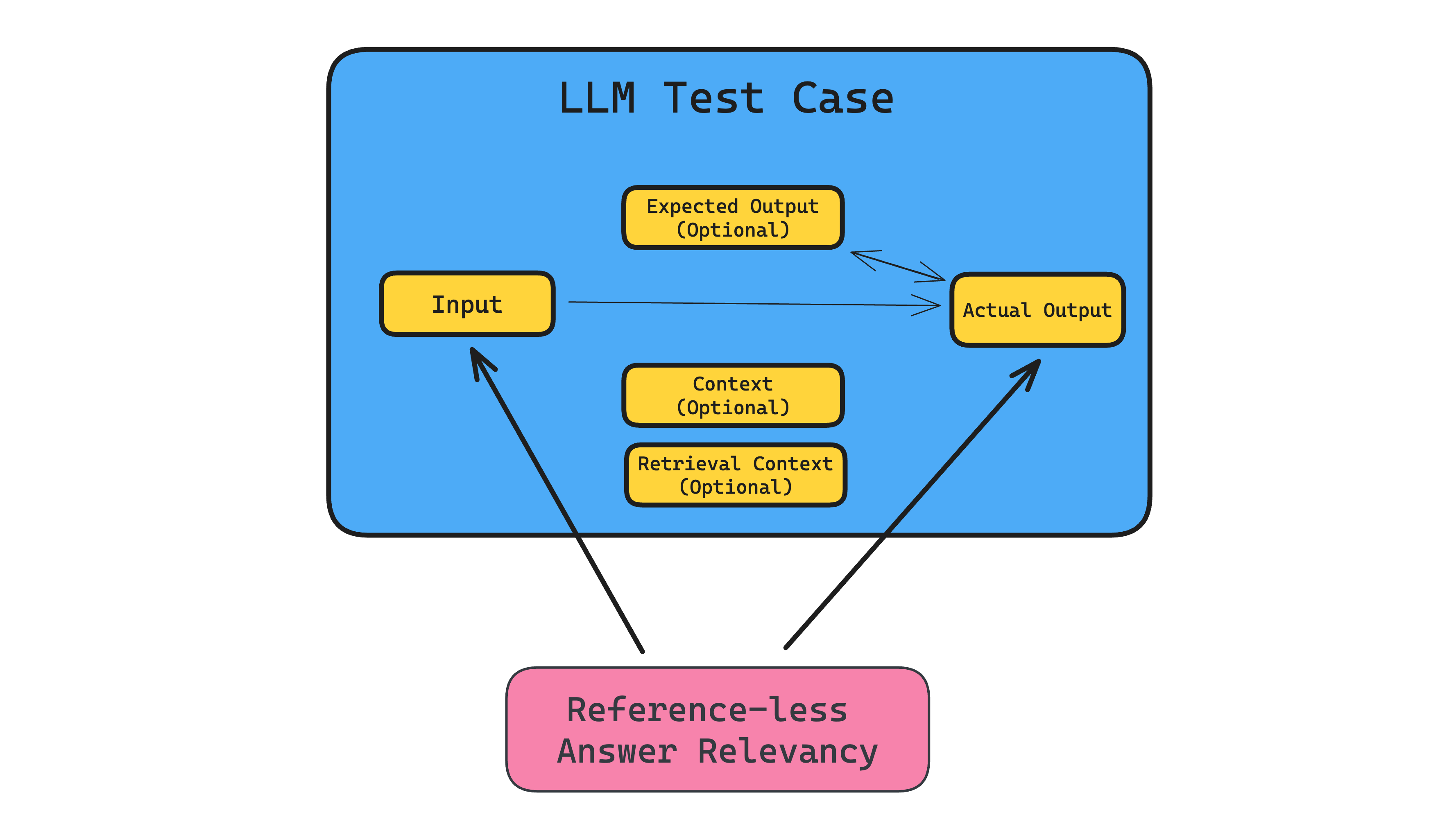

LLM evaluation refers to the process of ensuring LLM outputs are aligned with human expectations, which can range from ethical and safety considerations, to more practical criteria such as the correctness and relevancy of LLM outputs. From an engineering perspective, these LLM outputs can often be found in the form of unit test cases, while evaluation criteria can be packaged in the form of LLM evaluation metrics.

On the agenda, we have:

What is the difference between LLM and LLM system evaluation, and their benefits

Offline evaluations, what are LLM system benchmarks, how to construct evaluation datasets and choose the right LLM evaluation metrics (powered by LLM-as-a-judge), and common pitfalls

Real-time evaluations, and how they are useful in improving benchmark datasets for offline evaluations

Real-world LLM system use cases and how to evaluate them, featuring chatbotQA and Text-SQL

Let’s begin.

LLM vs LLM System Evaluation

Let’s get this straight: While an LLM (Large Language Model) refers specifically to the model (eg., GPT-4) trained to understand and generate human language, an LLM system refers to the complete setup that includes not only the LLM itself but also additional components such as function tool calling (for agents), retrieval systems (in RAG), response caching, etc., that makes LLMs useful for real-world applications, such as customer support chatbots, autonomous sales agents, and text-to-SQL generators.

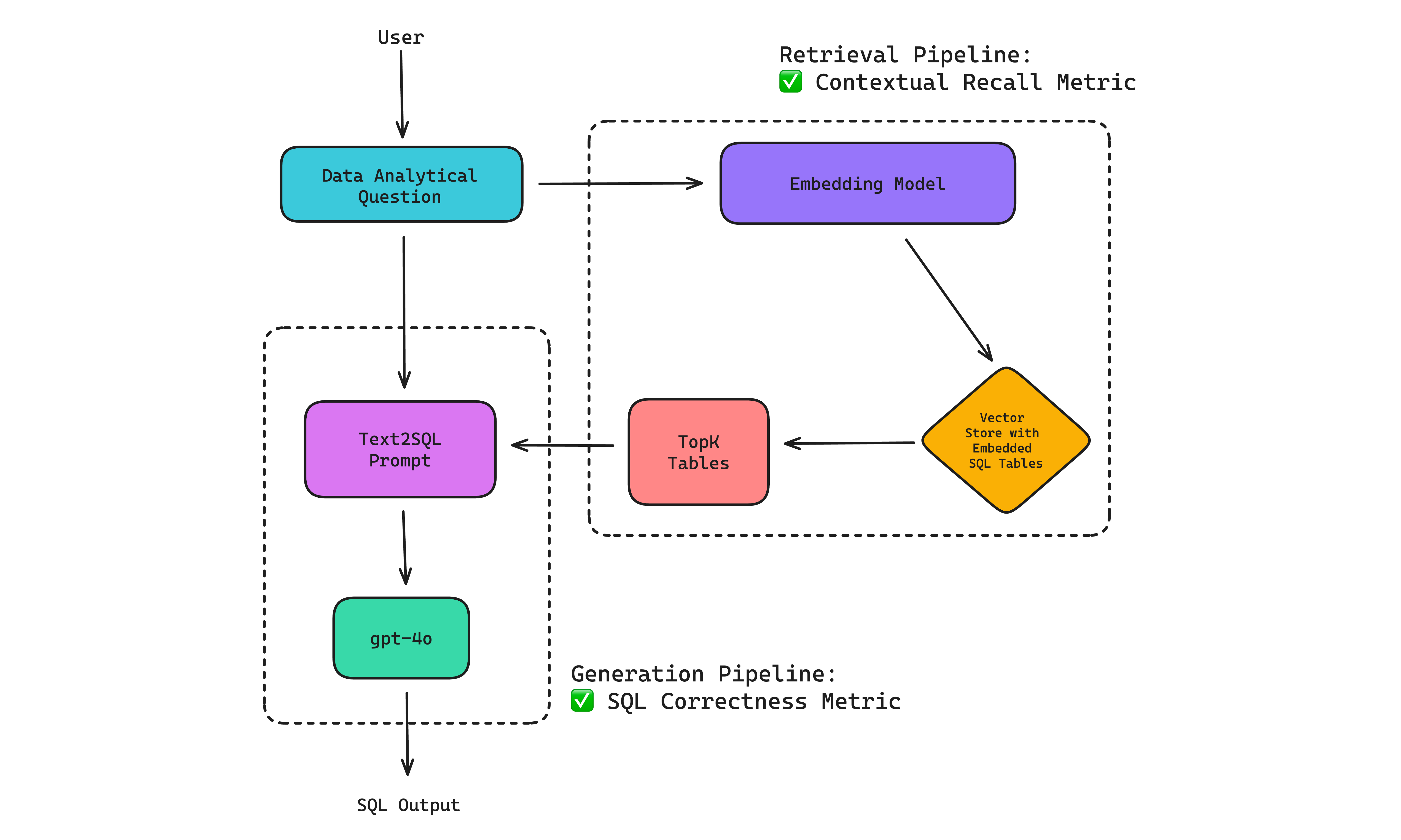

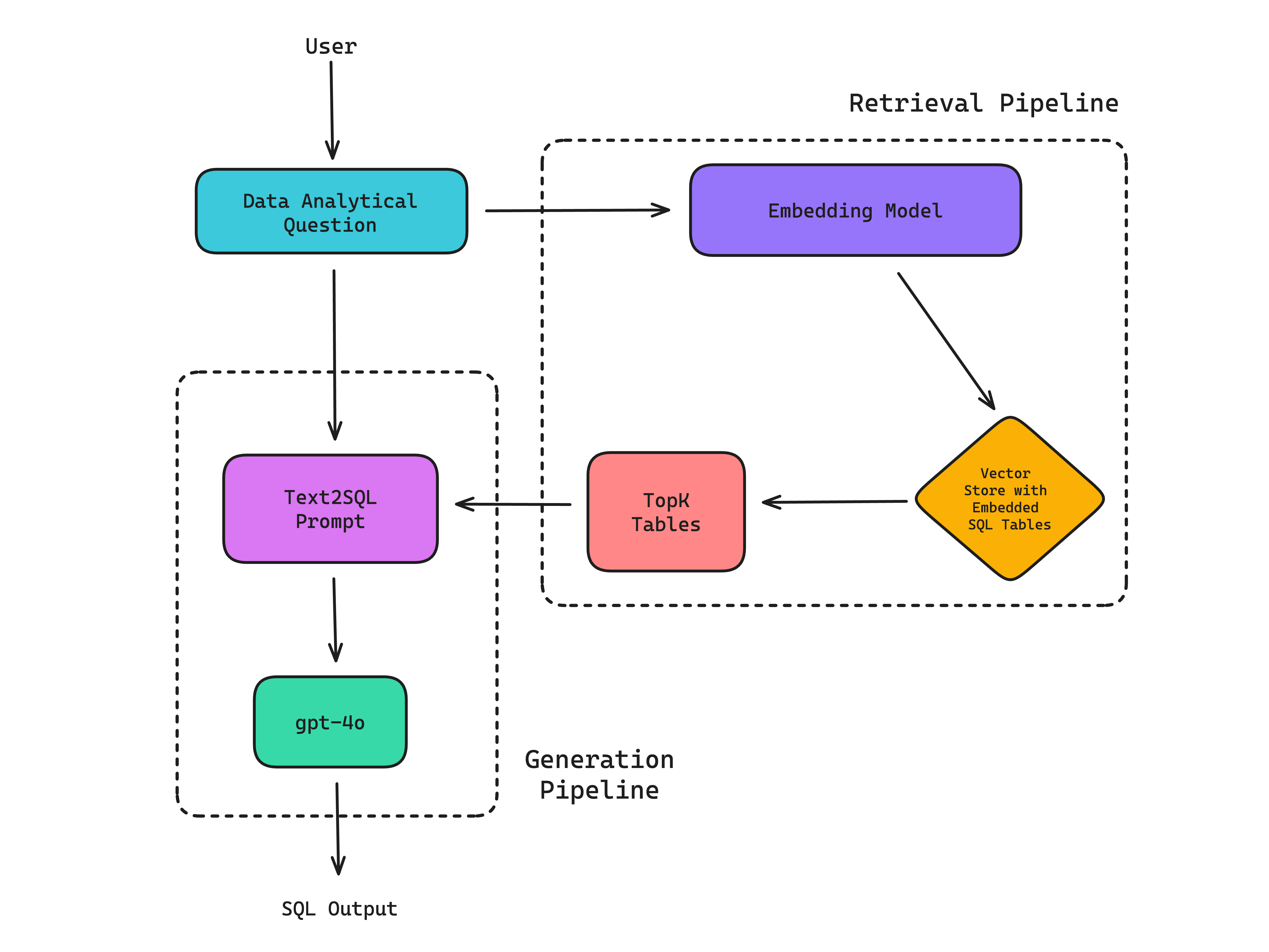

However, it’s important to note that an LLM system can sometimes simply be composed of the LLM itself, as is the case with ChatGPT. Here is an example RAG-based LLM system that performs the Text-SQL task:

Since the primary goal of Text-SQL is to generate correct and efficient SQL for a given user query, the user query is usually first used to fetch the relevant tables in a database schema via a retrieval pipeline before using it as context to generate the correct SQL via a SQL generation pipeline. Together, they make up a (RAG-based) LLM system.

(Note: Technically, you don’t have to strictly perform a retrieval before generation, but even for a moderately sized database schema it is better to perform a retrieval to help the LLM hallucinate less.)

Evaluating an LLM system, is therefore not as straightforward as evaluating an LLM itself. While both LLMs and LLM systems receive and generate textual outputs, the fact that there can be several components working in conjunction in an LLM system means you should apply LLM evaluation metrics more granularly to evaluate different parts of an LLM system for maximum visibility into where things are going wrong (or right).

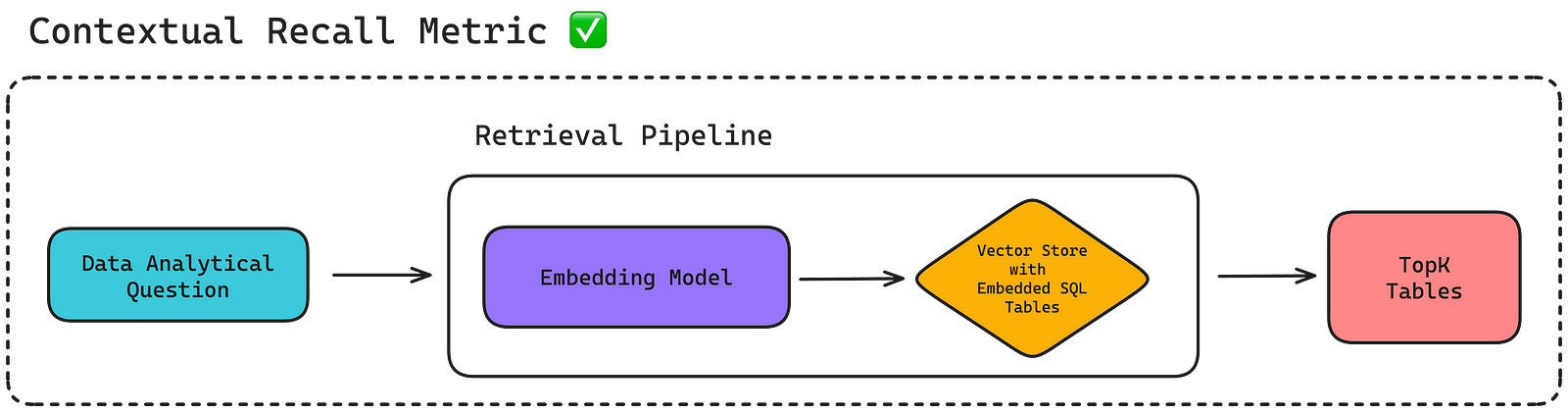

For example, you can apply a contextual recall metric to evaluate the retrieval pipeline in the Text-SQL example above to assess whether it is able to retrieve all necessary tables needed to answer a particular user query.

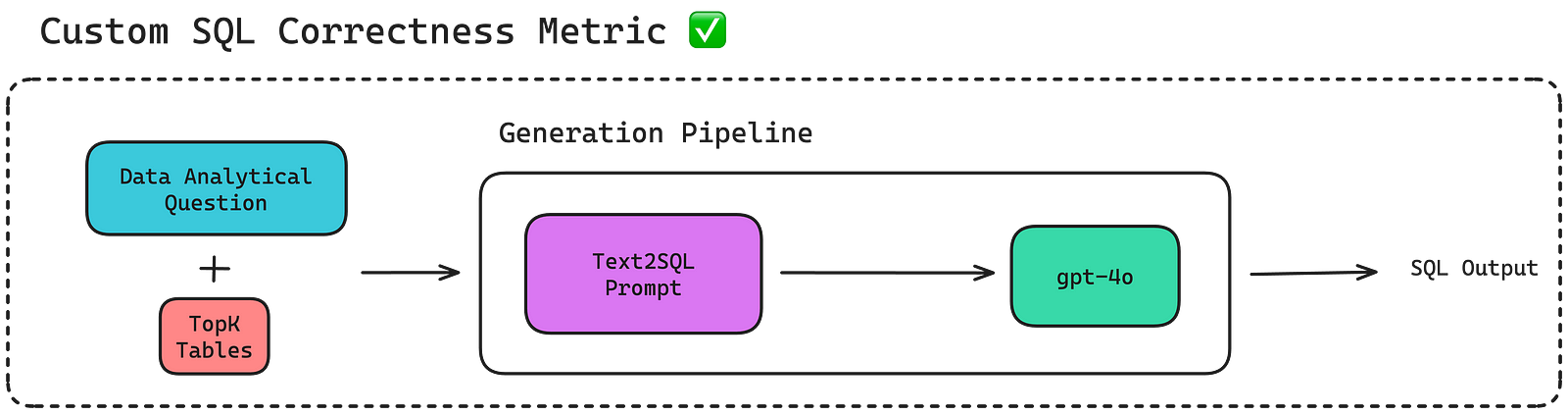

Similarly, you can also apply a custom SQL correctness metric implemented via G-Eval to evaluate whether the generation pipeline generates the correct SQL based on the top-K data tables retrieved.

In summary, an LLM system is composed of multiple components that help make an LLM more effective in carrying out its task as shown in the Text-SQL example, and it is harder to evaluate because of its complex architecture.

In the next section, we will see how we can perform LLM system evaluation in development (aka. offline evaluations), including ways in which we can quickly create large amounts of test cases to unit test our LLM system, and how to pick the right LLM evaluation metrics for certain components.

The Eval Platform for AI Quality & Observability

Confident AI is the leading platform to evaluate AI apps on the cloud, powered by DeepEval.