LLM observability has become a crowded category. Every platform promises tracing, monitoring, and dashboards — but most teams quickly discover that seeing what their AI does isn't the same as knowing whether it's doing it well. The tools that matter in 2026 aren't the ones with the most integrations or the prettiest trace viewer. They're the ones that help you systematically improve AI quality across your entire team.

This guide compares the seven most relevant LLM observability tools based on what actually drives value: evaluation depth, cross-functional accessibility, and the ability to catch problems before they reach production — not just log them after they do.

What Makes Good LLM Observability Great

Every engineering team has some form of tracing setup. The real question is whether your LLM observability tool does anything meaningful with those traces — or whether you've just layered another APM-style dashboard on top of your stack that logs prompts, tokens, latency, and model costs without adding AI-specific insight.

If your “LLM observability” looks indistinguishable from traditional APM — just with tokens instead of SQL queries — you’re monitoring infrastructure, not AI behavior.

LLM observability is only useful if you can tighten the iteration loop by incorporating traces into existing development and alerting workflows — not logging production data in one silo, running evaluations in another, and managing alerts through yet another tool your team already uses.

Tight iteration loops, not tool sprawl

LLM observability only works if traces flow directly into development and alerting workflows. If production data lives in one tool, evaluations in another, and alerts in a third, iteration slows down. Engineers context-switch. Insights get lost. Quality degrades quietly.

Great observability connects tracing, evaluation, and alerting into a single feedback loop.

Evaluation depth, not just trace logging

Traces tell you what happened. Evaluations tell you whether it was good. If your platform cannot answer questions like:

Was the output faithful to retrieved context?

Did the agent select the correct tool?

Was the response relevant and safe?

Then you have logging — not a top tool for AI quality monitoring. Great observability includes research-backed metrics for faithfulness, relevance, hallucination, and safety. It evaluates directly on production traces, not just curated development datasets.

Quality-aware monitoring and alerting

Your existing stack already catches latency spikes and 500 errors. What it does not catch:

Silent hallucinations

Gradual drops in relevance

Safety regressions

Tool misuse

Great LLM observability alerts on AI quality shifts — not just infrastructure failures.

Drift detection for prompts and use cases

AI systems degrade over time. Prompt changes, model updates, and shifts in user behavior all introduce drift. Without monitoring, degradation spreads quietly across segments and workflows. Great observability tracks quality across:

Prompt versions

User segments

Conversation types

Application flows

When combined with regression testing, teams can pinpoint whether drift stems from prompts, models, or usage patterns.

Workflows that go beyond engineering

If only engineers can run evaluations or annotate outputs, quality scales with engineering headcount. Product managers, domain experts, and QA teams should be able to review outputs, contribute feedback, and monitor quality without filing tickets.

Great observability systems expand access to AI quality, not bottleneck it.

Regression testing and pre-deployment checks

Production monitoring is reactive. You discover problems after users do. Great observability prevents regressions from reaching production. Automated regression testing and CI/CD quality gates stop prompt or model changes that degrade performance.

Monitoring finds issues. Regression testing prevents them.

Multi-turn and conversational support

Single-turn tracing is table stakes. Most real AI failures emerge across turns:

Context drift

Escalating hallucinations

Lost conversational coherence

Tool selection breakdowns

If your platform treats each request independently, you are blind to systemic failure patterns. Great observability understands conversations, not just calls.

Framework flexibility without lock-in

Your LLM stack will evolve. Today it might be LangChain. Tomorrow it could be Pydantic AI or a custom agent framework. Great observability provides consistent trace capture and quality monitoring across frameworks. OpenTelemetry support and ecosystem neutrality prevent observability from becoming a bottleneck.

How We Evaluated These Tools

With these principles in mind, we assessed each platform across six dimensions:

Evaluation maturity: Are the metrics research-backed and widely validated? Can teams define custom evaluators easily? Is evaluation core to the product or layered onto tracing?

Observability depth: Beyond basic integrations, can you drill into agent components, query large trace volumes efficiently, and evaluate directly on production traffic?

Non-technical accessibility: Can product managers or domain experts run evaluation cycles independently — upload datasets, trigger applications, and review results — without engineering support?

Setup friction: How quickly can a team integrate the platform? Clear SDKs, sensible defaults, and strong documentation matter more than raw feature count.

Data portability: Can you export trace data and metrics through APIs or standard formats? How difficult is migration if requirements change?

Annotation and feedback loops: Can domain experts annotate traces inline? Do those annotations feed back into evaluation datasets and model improvement workflows?

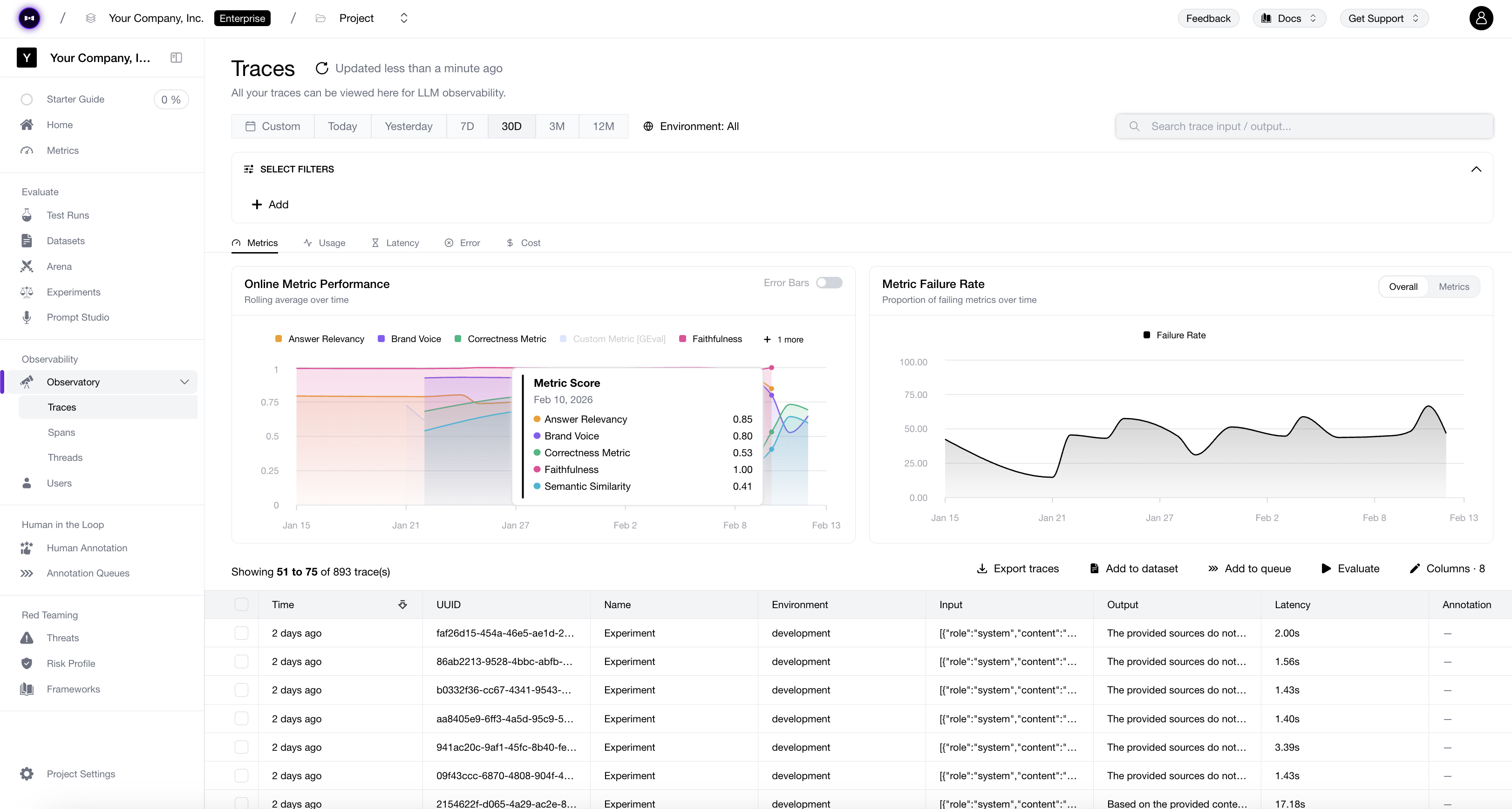

1. Confident AI

Confident AI is an evaluation-first LLM observability platform built around AI quality — combining tracing, evaluation, and human annotation in a single collaborative workspace to tighten the iteration loop. Unlike most observability tools that bolt evaluation onto tracing, Confident AI makes AI quality the core product, with observability as the supporting infrastructure.

It is also the cheapest observability tool per GB on the list, at $1 per GB-month retained or ingested, with no limitations on the number of traces and spans. Its 50+ research-backed evaluation metrics are open-source through DeepEval, one of the most widely adopted LLM evaluation frameworks.

Customers include Panasonic, Toshiba, Amdocs, BCG, CircleCI, and Humach. Humach, an enterprise voice AI company serving McDonald's, Visa, and Amazon, shipped deployments 200% faster after adopting Confident AI.

Best for: Cross-functional teams that need evaluation-first observability to monitor AI quality in production and get alerted to drift across prompts, use cases, and user behavior over time.

Key Capabilities

Unified tracing: OpenTelemetry-native with 10+ framework integrations, graph visualization, and full conversation tracing across sessions.

Quality-aware alerting: Alerts are triggered by drops in online evaluation metrics, including faithfulness (via LLM-as-a-judge), relevance, safety, or heuristics such as latency, cost, and error spikes.

Prompt monitoring & regression detection: Compare prompt versions and detect quality drift before users are affected.

Automatic dataset curation from trace data: Production traces are automatically converted into evaluation datasets, eliminating the manual effort of building test sets from scratch. This bridges observability and development — turning what you see in production into what you test against before the next deployment.

Pros

Complements your existing software observability stack rather than duplicating it — focused on AI quality, not another tracing dashboard

Tightens the iteration loop between production and development through automatic dataset curation and drift detection

Non-technical team members contribute to AI quality independently without creating engineering bottlenecks

One platform replaces what would otherwise be separate vendors for observability, evaluation, red teaming, and simulation

Cons

Not open-source — cloud-based with enterprise self-hosting available, unlike Langfuse or Phoenix

Teams that only need basic tracing without evaluation workflows may find the platform broader than necessary

GB-based pricing is straightforward at scale but may require a short calibration period to estimate initial usage

Pricing for Confident AI starts at $0 (Free), $19.99/seat/month (Starter), $79.99/seat/month (Premium), custom pricing for Team and Enterprise plan.

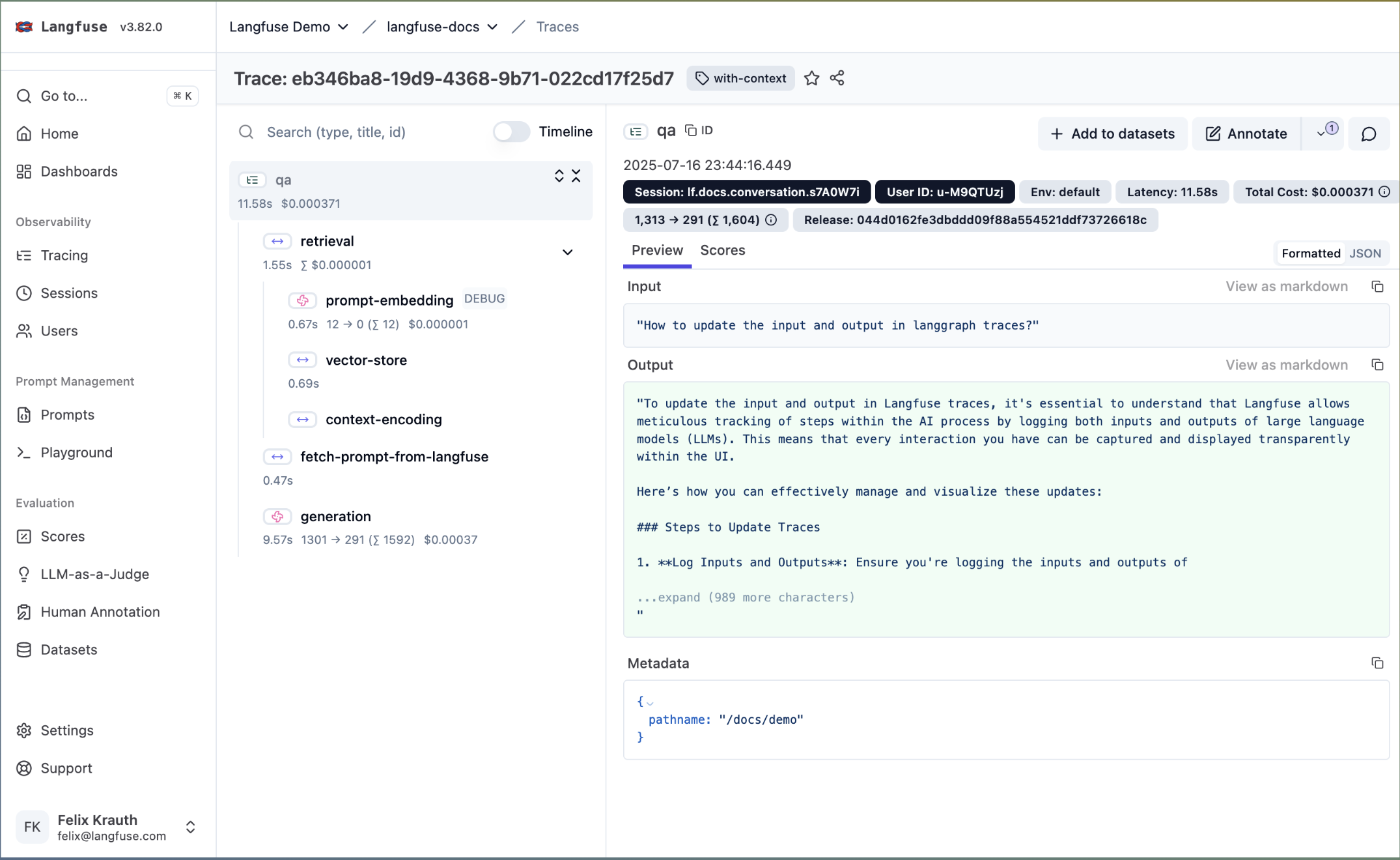

2. Langfuse

Langfuse is an open-source LLM observability platform focused on trace capture, cost monitoring, and OpenTelemetry-native instrumentation.

Best for: Engineering teams that want self-hosted LLM tracing with infrastructure-level control.

Key Features

OpenTelemetry-based tracing for prompts, completions, metadata, and latency

Session-level grouping for multi-turn conversations

Token usage and cost tracking

Trace search and performance dashboards

Pros

Fully open-source with self-hosting support

Strong OpenTelemetry alignment

Flexible deployment and data control

Cons

No native alerting or real-time monitoring triggers

Limited built-in quality-aware monitoring

Recently acquired, creating roadmap uncertainty

Pricing for Langfuse starts at $0, $29.99/month (Core), $199/month (Pro), $2499/year for enterprise.

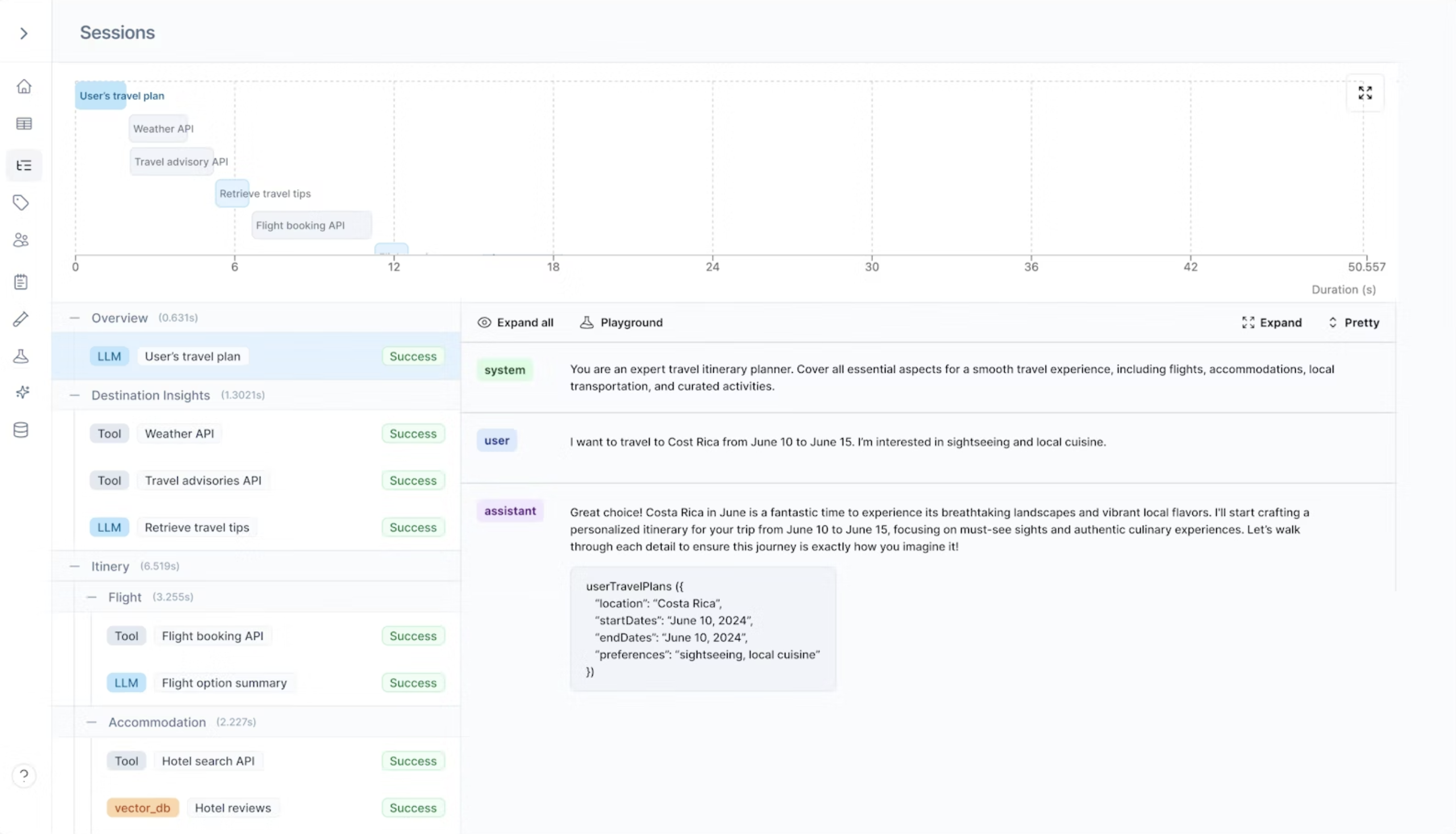

3. LangSmith

LangSmith is a managed LLM observability platform from the LangChain team, built to trace and debug LangChain-based applications.

](https://images.ctfassets.net/otwaplf7zuwf/4NouKDb4qRvFEVl0TjayAR/5248233c7fd30f3f088fa62bfeb159c2/Screenshot_2026-01-05_at_6.25.59_PM.png)

Best for: Teams building heavily on LangChain who want native tracing without managing infrastructure.

Key Features

Native LangChain and LangGraph trace capture

Agent execution graph visualization

Token usage and latency monitoring

Trace search and filtering

Pros

Deep visibility into LangChain workflows

Managed infrastructure reduces operational overhead

Clear agent execution visualization

Cons

Observability depth drops outside LangChain

No self-hosting option

Seat-based pricing limits team-wide access

Pricing for LangSmith starts at $0 (Developer), $39/seat per month (Plus), and custom pricing for Enterprise.

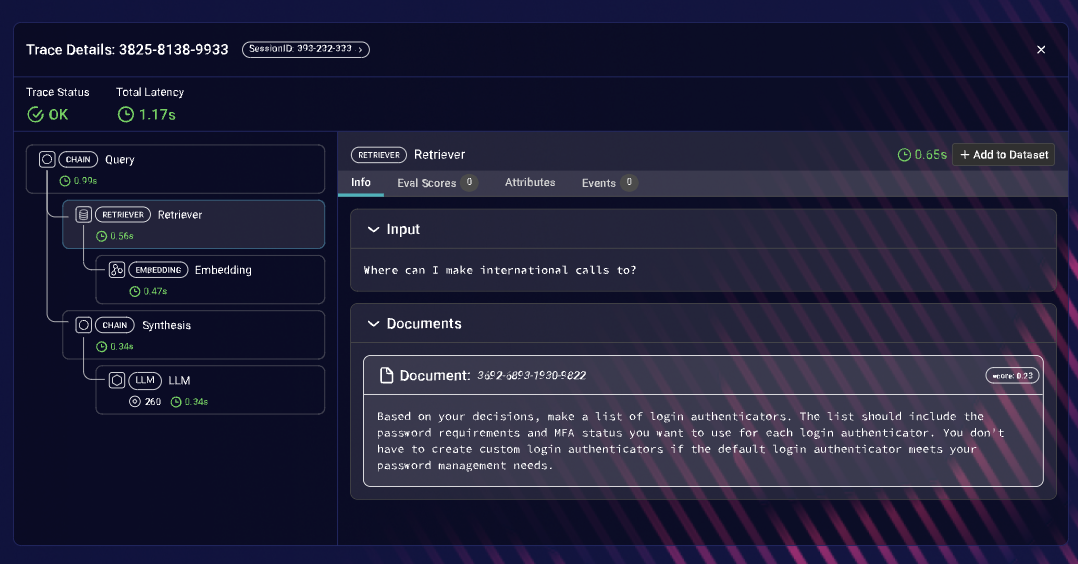

4. Arize AI

Arize AI is an enterprise-grade observability platform that extends ML monitoring infrastructure into LLM systems with span-level tracing and real-time telemetry.

Best for: Large organizations running high-volume LLM workloads that require scalable monitoring.

Key Features

Span-level LLM tracing with custom metadata

Real-time dashboards for latency, error rates, and token usage

Agent workflow visualization

Interactive trace querying and filtering

Pros

Built for high-scale production environments

Strong real-time monitoring capabilities

Mature enterprise infrastructure

Cons

Interface geared toward technical users

Commercial tiers required for advanced features

Setup complexity for smaller teams

Pricing for Arize AI starts at $0 (Phoenix, open source), $0 (AX Free), $50/month (AX Pro), and custom pricing for AX Enterprise.

5. Helicone

Helicone combines an AI gateway with request-level observability focused on provider monitoring and cost tracking.

Best for: Teams using multiple LLM providers that need lightweight model-level observability.

Key Features

Unified gateway across 100+ LLM providers

Request-level logging for prompts and completions

Cost, latency, and error tracking

Spend monitoring with budget thresholds

Pros

Strong multi-provider visibility

Quick setup with minimal instrumentation

Granular cost tracking and attribution

Cons

Limited to model request-level visibility

No deep application or agent tracing

Not designed for complex workflow debugging

Pricing for Helicone starts at $0 (Hobby), $79/month (Pro), $799/month (Team), and custom pricing for Enterprise.

6. Braintrust

Braintrust provides production trace logging with structured metadata and monitoring integrations.

](https://images.ctfassets.net/otwaplf7zuwf/5ePsO8Crn7D7w7z0yAP9Gc/2c4745c5fe0b9bff45af98c7393307e0/Screenshot_2025-09-01_at_3.08.50_PM.png)

Best for: Teams that want trace visibility combined with structured monitoring workflows.

Key Features

Production trace capture with metadata logging

Token usage and latency tracking

Search and filtering across traces

Webhook-based monitoring integrations

Pros

Clean UI for exploring production traces

Alerting integrations with external systems

Broad framework compatibility

Cons

Less infrastructure-level telemetry depth

Limited agent workflow visualization

No self-hosting option

Pricing for Braintrust starts at $0/month (Free), $249/month (Pro), and custom pricing for Enterprise.

7. Datadog LLM Monitoring

Datadog LLM Monitoring extends Datadog’s APM platform to include LLM-specific telemetry within existing infrastructure monitoring.

](https://images.ctfassets.net/otwaplf7zuwf/14rjieQp05LYPxytKEoNeP/c15f87d784a08f0011e5cc5ef18593af/Screenshot_2026-02-13_at_2.21.44_AM.png)

Best for: Teams already using Datadog who want LLM visibility inside their current monitoring stack.

Key Features

LLM trace capture within APM

Token usage and latency monitoring

Unified dashboards across app and infra

Mature alerting configuration

Pros

No additional vendor for existing Datadog users

Enterprise-grade monitoring and alerting

Full-stack correlation with backend systems

Cons

Not purpose-built for AI debugging

Limited agent or workflow-level visualization

Pricing scales with trace volume

Pricing for Datadog LLM Observability starts at $8 per 10K monitored LLM requests per month (billed annually), or $12 on-demand, with a minimum of 100K LLM requests per month.

Full Comparison Table

Platform

Starting Price

Best For

Features That Stand Out

Confident AI

Free, unlimited traces and spans within 1 GB limit

Cross-functional teams needing evaluation-first observability

Quality-aware alerting, 50+ online evals, prompt drift detection

Langfuse

Free unlimited (self-host)

Engineering-led teams wanting open-source, self-hosted observability

OpenTelemetry-native tracing, 100+ framework integrations

Langsmith

Free with 1 user, 5k traces/month

Teams invested in LangChain ecosystem

Deep LangChain & LangGraph integration, agent graph visualization

Arize AI

Free, 25k spans/month

Large enterprises with technical ML/LLM teams

technical ML/LLM teamsHigh-volume trace logging, framework-agnostic

Helicone

Free, 10k requests/month

Startup & small teams needing LLM gateway

Unified AI gateway for multi-provider access, request-level cost/latency tracking

Braintrust

Free: 1M trace spans/month

Teams using CI/CD workflows for evaluation

Production tracing, webhook-based alerts, broad framework integrations

Datadog LLM Observability

Free plan available with limited metric retention

Teams already using Datadog

United LLM + infra monitoring, mature alerting, integrated dashboards,

Why Confident AI is the Best Choice for LLM Observability

Most LLM observability tools on this list solve the same problem: giving you visibility into what your AI is doing. Confident AI solves the problem that comes after — what do you do about it?

The difference is the iteration loop. Other platforms log traces and leave it to your team to manually build test sets, run evaluations in separate tools, and coordinate testing across engineering, product, and QA. Confident AI automatically curates datasets from production traces, runs quality-aware evaluations on incoming data, detects drift across prompts and use cases, and makes all of this accessible to non-technical team members without engineering bottleneck.

The practical impact breaks down into three areas:

You stop duplicating your existing stack. Confident AI focuses on AI quality monitoring — not another tracing dashboard competing with your Datadog or New Relic setup. Every feature is oriented around measuring and improving AI output quality, not replicating infrastructure observability you already have.

You close the loop between production and development. Automatic dataset curation from traces, drift detection, and regression testing mean production insights directly feed into your development workflow. Most observability tools stop at logging. Confident AI turns those logs into actionable test sets and quality gates.

Your entire team participates in AI quality. Product managers trigger evaluations against production applications via HTTP to view traces. Domain experts annotate traces and conversation threads. QA teams run regression tests on traces through the UI. Engineering sets up the initial integration, then the whole organization contributes — instead of every evaluation decision routing through an engineer's calendar.

When Confident AI Might Not Be the Right Fit

Confident AI can't be everyone's favorite. Here are a few reasons why:

If you need fully open-source: Confident AI offers enterprise self-hosting with publicly available deployment guides, but it's not open-source. If your organization mandates open-source tooling, Langfuse is the strongest alternative.

If you only need basic tracing: If your team's sole requirement is logging traces and monitoring costs without evaluation workflows, a lighter tool like Helicone or your existing Datadog setup may be sufficient. Confident AI's breadth is an advantage for teams that need it — but unnecessary overhead for teams that don't.

If you're fully committed to LangChain and never plan to change: LangSmith's native LangChain and LangGraph integration offers a tighter developer experience within that ecosystem. If your entire stack is LangChain today and will be LangChain tomorrow, that tight coupling is a feature rather than a limitation.

Frequently Asked Questions

What are LLM observability tools?

LLM observability tools help teams monitor, trace, and evaluate AI outputs in production. They track quality metrics like faithfulness, relevance, safety, multi-turn context, and operational signals such as latency, token usage, and cost.

Why do I need an LLM observability platform?

Observability ensures your AI applications perform reliably, reduces errors in production, detects prompt or model drift, and enables faster iteration cycles — improving ROI and user trust.

Which LLM observability tools are most widely used?

Leading platforms include Confident AI, Langfuse, LangSmith, and Arize AI. Confident AI stands out for evaluation-first observability, multi-turn analysis, and quality-aware alerting. Langfuse is open-source and self-hosted, LangSmith integrates deeply with LangChain, and Arize AI scales for enterprise ML teams.

How does Confident AI compare to other observability tools?

Confident AI combines unified tracing, 50+ research-backed evaluation metrics, multi-turn monitoring, quality-aware alerting, and collaborative annotation. This ensures teams can detect AI quality issues in real time, shorten iteration cycles, and integrate production insights directly into development — unlike tools that only log traces or focus on infrastructure metrics.

Can LLM observability tools monitor multi-turn conversations?

Yes. Multi-turn evaluation is crucial for detecting context drift, hallucinations, and tool-selection errors that emerge across conversation threads. Confident AI and other advanced platforms support native multi-turn monitoring, not just single requests.

Are non-technical team members able to use LLM observability platforms?

Some platforms require engineering support, but Confident AI allows product managers, QA, and domain experts to review evaluations, annotate traces, and monitor AI quality independently.

Can LLM observability tools integrate with different frameworks?

Yes. Many support OpenAI, LangChain, Pydantic AI, and custom frameworks. Platforms like Confident AI are framework-agnostic and OpenTelemetry-compatible, ensuring consistent monitoring regardless of your stack.

How can observability tools improve ROI?

By alerting on AI quality degradation, detecting prompt or model drift, automating dataset curation, and consolidating evaluation and monitoring in one platform, teams reduce errors, shorten development cycles, and ship AI features faster.

How does LLM observability differ from traditional APM?

Traditional APM monitors application performance — latency, errors, and infrastructure health. LLM observability goes further, evaluating AI output quality, multi-turn conversations, hallucinations, and drift across prompts and models. Tools like Confident AI complement your APM by linking AI quality insights directly to production traces, rather than just logging tokens or response times.