Traditional monitoring doesn't catch AI failures. Your APM dashboard might show a 200 response in 1.2 seconds — but it won't tell you the model hallucinated a policy, leaked PII, or drifted off-topic mid-conversation.

That's the gap LLM monitoring tools fill. They trace prompts, completions, tool calls, and retrieval steps across your AI pipeline — then evaluate whether your application is actually performing well, not just responding.

The category is crowded though, and most platforms stop at logging and tracing. The most effective tools go further — scoring output quality, detecting safety risks, alerting on performance degradation, and making insights accessible to teams beyond engineering.

This guide compares the five most relevant LLM monitoring tools for production AI systems, evaluated on what actually drives long-term AI quality: metric depth, alerting maturity, pricing transparency, and cross-functional usability.

What Is AI Quality Monitoring?

AI quality monitoring is about more than just logging requests or counting tokens. It’s about measuring whether an AI system is behaving correctly, safely, and consistently over time, including:

Functional correctness

Safety metrics like PII leakage, bias, and hallucinations

Performance drift after prompt/template or model changes

Multi-turn conversation coherence

Cross-segment differences in quality

While top LLM observability tools focuses on infrastructure signals (latency, resource usage, error codes), AI quality monitoring focuses on model behavior and output quality. It evaluates what the model is doing — not just whether it responded.

This distinction matters. Tracing alone doesn’t tell you if the model’s answer was correct or harmful. Alerts that only trigger on 500s or latency spikes miss the silent failures that erode user trust. AI quality monitoring bridges this gap by combining trace capture with research-backed evaluation metrics so teams can track quality in production rather than just logs after the fact.

Our Evaluation Criteria

To compare the market’s leading LLM monitoring tools fairly — and to reflect what teams actually need in production — we evaluated each platform against five core criteria:

Quality-Aware Metrics Coverage

Does the tool go beyond basic tracing and logs? Top performers include:

Built-in quality metrics (e.g., hallucination, faithfulness)

Safety and bias detection

Research-backed and customizable scoring — not just token counts

Organizations need to agree on a set of metrics they can trust - not just logs they can see. This is where AI quality monitoring really differentiates itself from traditional observability.

Real-Time Monitoring & Alerts

Monitoring is only useful if you know when something goes wrong. We assessed:

Real-time detection of quality drops

Customizable alert triggers on evaluation shifts

Drift & regression alarms (not just infrastructure alarms)

Some tools simply capture traces without alerting on quality; others send alerts when production quality degrades — a critical distinction.

Prompt & Model Drift Detection

AI applications change constantly — prompts, models, and data distributions all shift. We looked for:

Prompt version tracking

Model comparison dashboards

Drift detection across time and user segments

Tools without drift monitoring may let silent regressions erode quality unnoticed.

Pricing Competitiveness & Retention

LLM monitoring pricing can be confusing and expensive. Key comparisons include:

Cost per GB or trace

Free tier generosity

Retention limits

For some platforms, retention is measured monthly and can expire quickly, while others offer flexible retention and more affordable GB-based pricing.

Workflows & Cross-Team Accessibility

Monitoring tools should serve more than just engineers. We evaluated:

Whether product and QA teams can investigate quality issues

Built-in workflows to annotate, review, and prioritize issues

Integration with development and CI pipelines

Platforms that let only engineers view traces or build test sets limit organization-wide quality ownership.

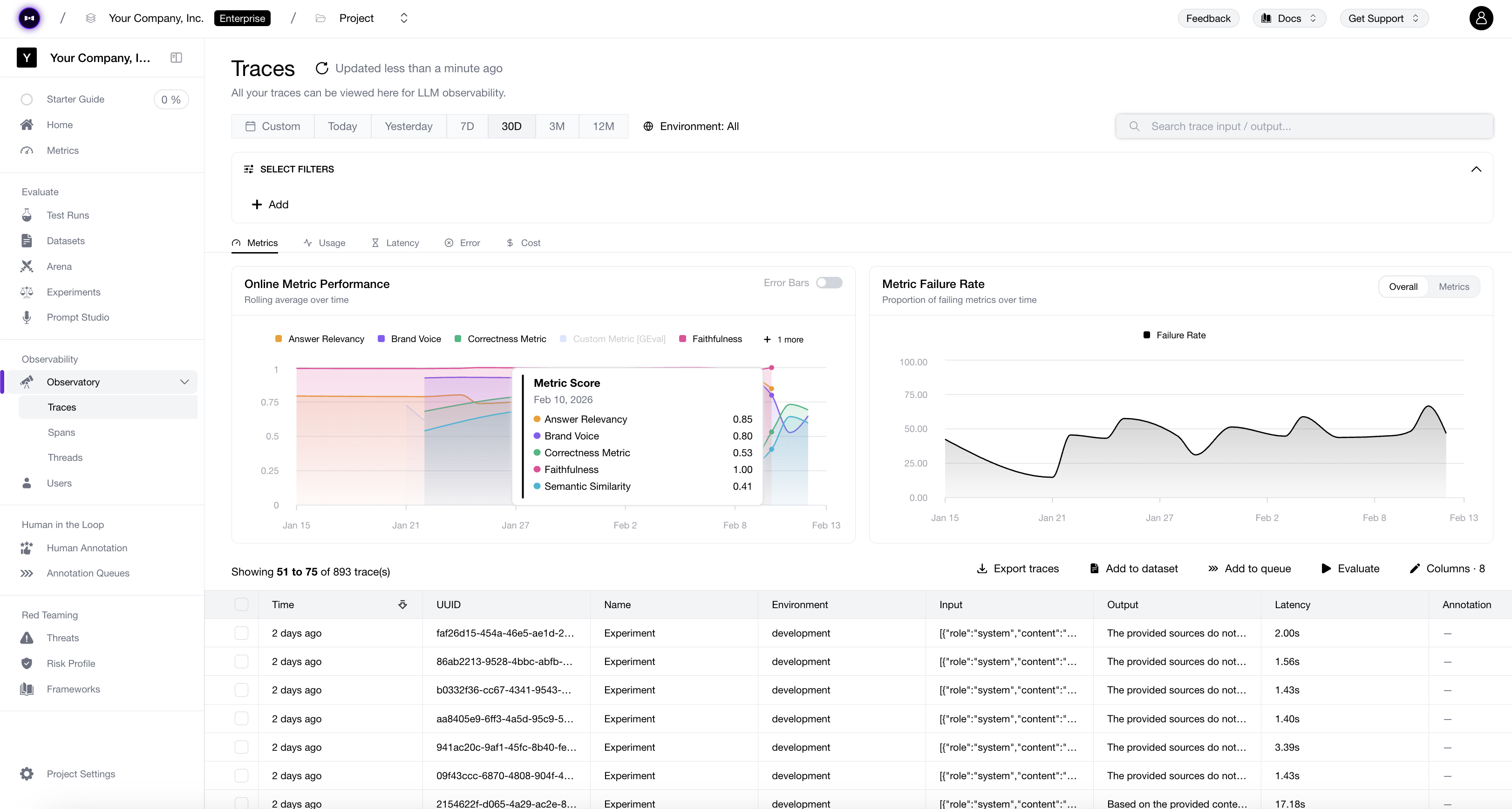

1. Confident AI

Confident AI centers LLM quality monitoring around evals and structured quality metrics rather than the kind of APM-style observability you'd get from something like Datadog. It brings together automated evaluation scoring, LLM tracing, vulnerability detection, and human feedback into one workspace — designed to help teams continuously measure and improve the quality of model outputs in production.

Pricing is straightforward at $1 per GB-month ingested or retained, with no caps on the number of traces and spans. The platform's metrics also integrates with DeepEval, a widely adopted open-source evaluation framework used by the best AI companies such as OpenAI, Google, and Microsoft. It power Confident AI's 50+ research-backed metrics for monitoring things like faithfulness, relevance, and hallucination rates.

Customers include Panasonic, Toshiba, Amdocs, BCG, and CircleCI.

Best for: Cross-functional teams (engineering, QAs, PMs,) that want to treat AI quality as a continuous production concern — using evals, quality metrics, and alerting to catch regressions rather than relying on user complaints or manual spot-checks.

Key Capabilities

Online evals on production traffic: Using metrics from DeepEval, Confident AI automatically run evals on traces, spans, and threads for any downstream processing tasks such as alerts.

Eval-driven alerting: Rather than alerting purely on latency or error rates, Confident AI triggers alerts when evaluation scores like faithfulness, relevance, or safety drop below thresholds you define.

Quality drift detection across prompt versions: Track how output quality shifts as prompts change over time, making it easy to pinpoint when and why a regression was introduced.

End-to-end tracing: OpenTelemetry-native with 10+ framework integrations, conversation-level tracing, and graph visualization to understand how outputs are generated across complex chains.

Production-to-eval pipeline: Traces are automatically curated into evaluation datasets, so your test coverage evolves alongside real production usage instead of relying on hand-crafted test cases.

Safety monitoring: Unique to Confident AI on this list - continuously evaluate production traffic for toxicity, bias, and jailbreak vulnerabilities — giving governance teams real-time visibility into model safety without requiring separate tooling or manual audits.

Pros

Built around eval scores and quality metrics by DeepEval as first-class signals — not another APM dashboard with an AI label.

It's metrics are open-sourced through DeepEval and adopted by top AI companies such as Google, OpenAI, and Microsoft.

Custom code to transform traces, spans, and threads prior to running online evals.

Automatically turns production data into eval datasets, keeping your test coverage aligned with how your system is actually being used

Designed for cross-functional teams — reviewers and domain experts can annotate and flag issues without engineering support

Replaces the need to stitch together separate tools for evals, tracing, red teaming, and simulation

Cons

Cloud-based and not open-source, though enterprise self-hosting is available — teams committed to open-source may prefer Langfuse or Phoenix

The breadth of the platform may be more than what's needed if your use case only calls for lightweight trace inspection

Usage-based pricing at $1/GB is the cheapest on the list, but teams new to this kind of tooling may need a ramp-up period to forecast costs

Pricing starts at $0 (Free), $19.99/seat/month (Starter), $79.99/seat/month (Premium), with custom pricing for Team and Enterprise plans.

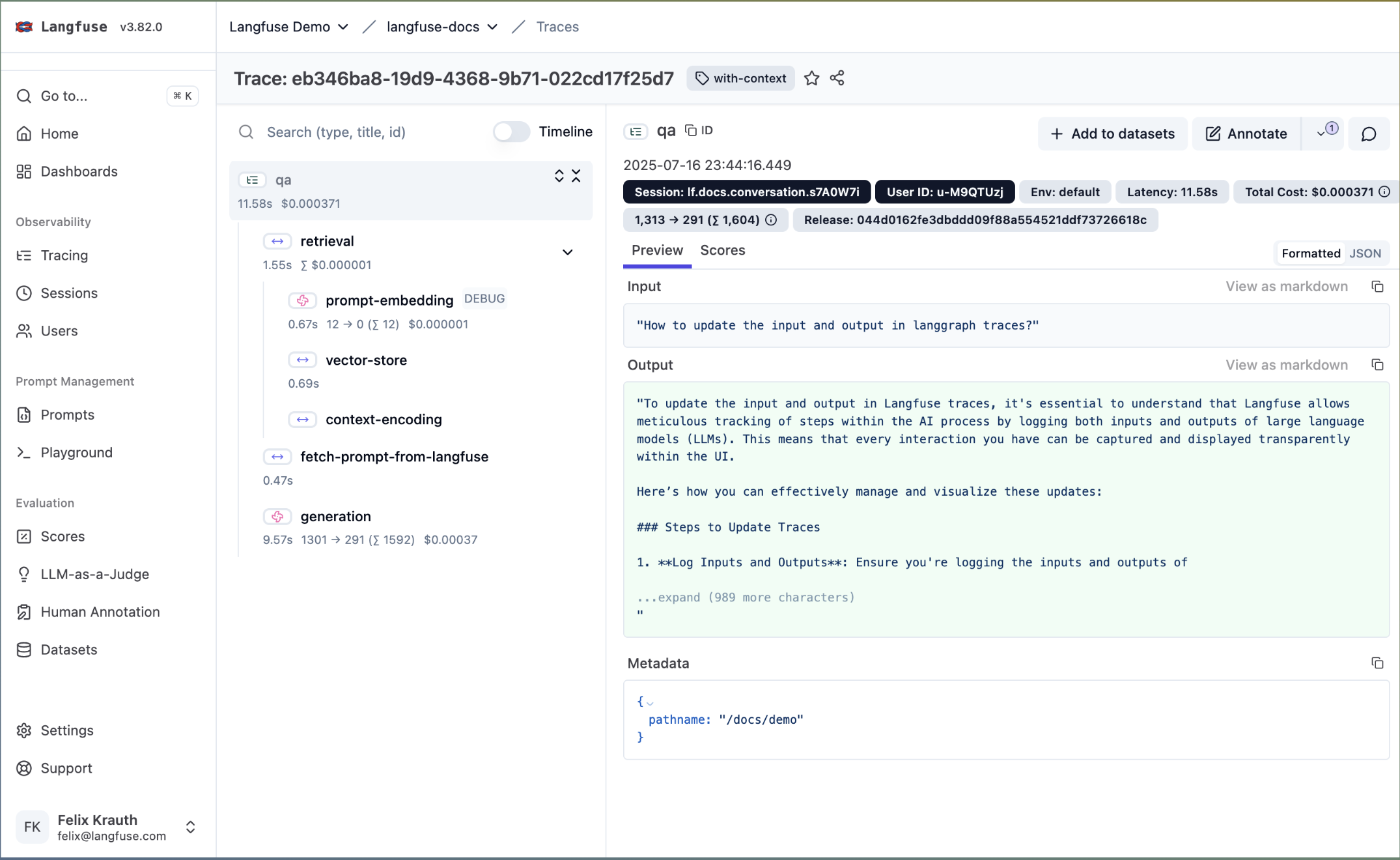

2. Langfuse

Langfuse stands out since it is a fully open-source tracing and cost tracking platform for LLM applications, built on industry standards such as OpenTelemetry with countless integrations. It gives engineering teams granular visibility into what their models are doing — traces, token spend, latency — but leaves quality evaluation largely to external tooling or custom implementation.

Best for: Engineering teams that want full infrastructure control over their tracing data and are comfortable building their own quality monitoring layer on top.

Key Features

OpenTelemetry-native trace capture covering prompts, completions, metadata, and latency breakdowns

Multi-turn conversation grouping at the session level

Token usage dashboards with cost attribution across models and environments

Searchable trace explorer for debugging production issues

Pros

Fully open-source with self-hosting, giving teams complete ownership over sensitive production data

Strong OpenTelemetry foundation makes it easy to integrate into existing infrastructure

Good fit for teams that already have internal eval pipelines and just need a tracing backbone

Cons

Limited eval metrics or quality scoring — if you want to monitor faithfulness, relevance, or hallucination rates, you'll need to bring your own custom LLM-as-a-judge implementation

Lacks native alerting, so there's no way to get notified when output quality degrades without building custom integrations

More of a tracing tool than a quality monitoring platform — teams looking for eval-driven insights will need to supplement it

Pricing starts at $0 (Free), $29.99/month (Core), $199/month (Pro), $2,499/year for Enterprise.

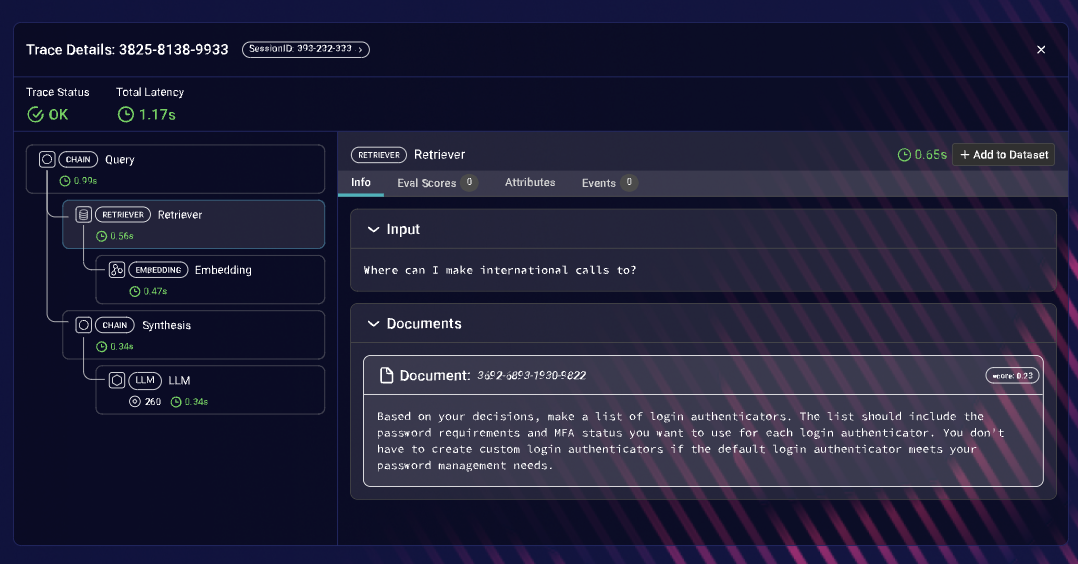

3. Arize AI

Arize AI comes from an ML monitoring background and has expanded into LLM observability, bringing enterprise-scale infrastructure to AI quality monitoring. It offers span-level tracing, real-time dashboards, and agent workflow visualization — though its strength leans more toward operational telemetry than eval-driven quality insights.

Best for: Large engineering organizations already invested in ML monitoring that need to extend their existing infrastructure to cover LLM workloads at scale.

Key Features

Span-level tracing with custom metadata tagging for granular production debugging

Real-time performance dashboards tracking latency, error rates, and token consumption

Visual agent workflow maps for understanding multi-step LLM pipelines

Flexible trace querying and filtering for root cause analysis

Pros

Battle-tested at enterprise scale — handles high-throughput production environments well

Real-time telemetry gives immediate visibility into operational health

Natural fit for organizations that already use Arize for traditional ML monitoring

Cons

Quality monitoring is more infrastructure-oriented than eval-oriented — teams wanting to track metrics like faithfulness or hallucination rates will find less out of the box compared to evaluation-first platforms

Interface and workflows are designed for technical users, which can limit involvement from cross-functional team members like PMs or QA

Can be complex to set up and configure for smaller teams that don't need enterprise-grade infrastructure

Advanced capabilities are locked behind commercial tiers, with only 14 days of retention offered even for priced tiers

Pricing starts at $0 (Phoenix, open-source), $0 (AX Free), $50/month (AX Pro), with custom pricing for AX Enterprise.

4. Helicone

Helicone stands out because it takes a gateway-first approach to AI monitoring, sitting between your application and LLM providers to capture request-level data. This gives it strong visibility into model calls, cost, and provider performance — but because it operates at the gateway level, monitoring is scoped to individual model requests rather than full application traces or complex agent workflows. It does offer some built-in scoring capabilities, though its evaluation features are limited compared to dedicated eval platforms.

Best for: Teams juggling multiple LLM providers that want a single pane of glass for cost tracking, request logging, and lightweight quality scoring without heavy instrumentation.

Key Features

AI gateway supporting 100+ LLM providers with unified request logging

Prompt and completion capture at the model request level

Cost attribution, latency tracking, and budget threshold alerts

Built-in scorers for basic quality checks on model outputs

Pros

Excellent multi-provider visibility — useful for teams that need to compare performance and cost across models

Minimal setup since the gateway handles instrumentation automatically

Solid option for teams that want cost monitoring and basic quality scoring in one place

Cons

Gateway architecture means monitoring is limited to the model request level — you won't get visibility into how outputs flow through your broader application or agent chains

Evaluation capabilities exist but are shallow compared to eval-first platforms — teams with serious quality monitoring needs will likely outgrow them

Not suited for debugging complex multi-step workflows or tracing issues across an entire LLM pipeline

Adding a gateway introduces an extra layer in your infrastructure that some teams may want to avoid

Pricing starts at $0 (Hobby), $79/month (Pro), $799/month (Team), with custom pricing for Enterprise.

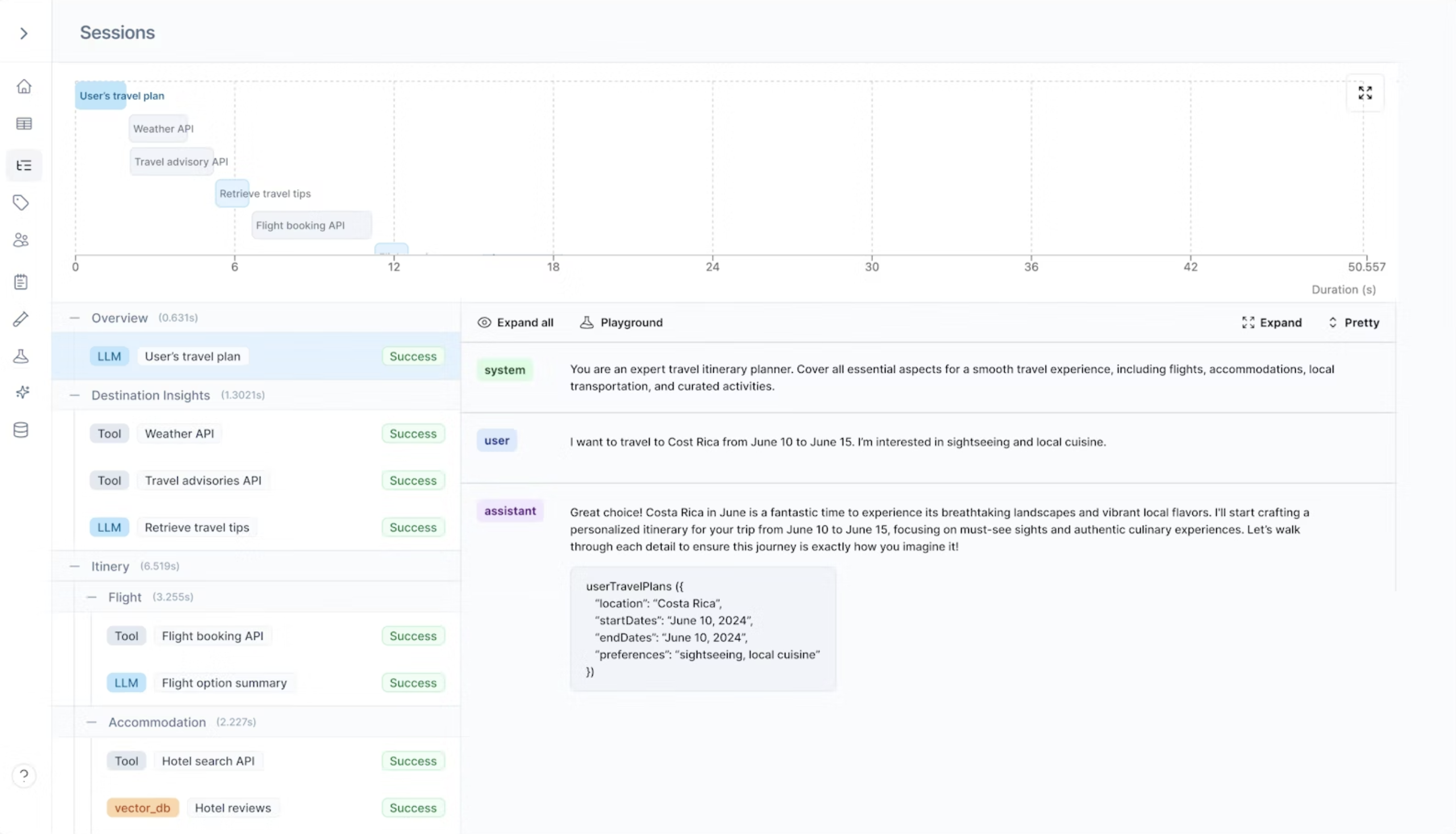

5. LangSmith

LangSmith is a managed observability platform from the LangChain team that provides tracing and debugging for LangChain-based applications. It's essentially a closed-source alternative to Langfuse, tightly coupled to the LangChain ecosystem.

While it offers some evaluation features, its quality monitoring capabilities are limited — LLM-as-a-judge requires custom implementation, and there's no deep library of pre-built eval metrics to draw from.

](https://images.ctfassets.net/otwaplf7zuwf/2WfGkukLUQIGZ52ePitsuz/5360def59aad86e0c75ec3dee232b002/398b368f-db52-46b2-9a6c-7bc7d6611cf3_3020x1706.png)

Best for: Teams that are deeply committed to LangChain and want native tracing without the overhead of self-hosting — and don't need advanced evaluation workflows.

Key Features

Native trace capture for LangChain and LangGraph applications

Agent execution graph visualization for debugging multi-step chains

Token usage and latency monitoring across runs

Trace search and filtering for production debugging

Pros

Seamless integration if your stack is already built on LangChain

Managed infrastructure means no self-hosting burden

Agent execution visualization is clear and useful for understanding chain behavior

Cons

Tightly coupled to LangChain — observability quality drops significantly for non-LangChain components, making it a poor fit for mixed or framework-agnostic stacks

Evaluation support is thin — no robust built-in eval metrics, and setting up LLM-as-a-judge scoring requires custom work

No self-hosting option, which limits data control for security-conscious teams

One of the more expensive options on this list, with seat-based pricing that restricts access for cross-functional teams — PMs, QA, and domain experts may get priced out

Doesn't offer much beyond what Langfuse provides, minus the open-source flexibility

Pricing starts at $0 (Developer), $39/seat/month (Plus), with custom pricing for Enterprise.

Top LLM Monitoring Tools Comparison Table

To help you decide, here's how each platform compares across features needed for a robust LLM monitoring tool:

Feature

Confident AI

Langfuse

Arize AI

Helicone

Langsmith

Built-in eval metrics

50+ via DeepEval

Limited, custom LLM-as-a-judge

Supported

Basic scorers

Limited, custom LLM-as-a-judge

Eval-driven alerting

Safety monitoring

Agent and RAG monitoring

Multi-turn, conversation monitoring

Production-to-eval pipeline

Limited

Limited

Limited

Prompt drift detection

Additionally, here's how each platform compares across pricing, use case, and standout features:

Platform

Starting Price

Best For

Features That Stand Out

Confident AI

Free, unlimited traces and spans within 1 GB limit

Cross-functional teams needing evaluation-first observability

Quality-aware alerting, 50+ online evals, prompt drift detection

Langfuse

Free unlimited (self-host)

Engineering-led teams wanting open-source, self-hosted observability

OpenTelemetry-native tracing, 100+ framework integrations

Langsmith

Free with 1 user, 5k traces/month

Teams invested in LangChain ecosystem

Deep LangChain & LangGraph integration, agent graph visualization

Arize AI

Free, 25k spans/month

Large enterprises with technical ML/LLM teams

technical ML/LLM teamsHigh-volume trace logging, framework-agnostic

Helicone

Free, 10k requests/month

Startup & small teams needing LLM gateway

Unified AI gateway for multi-provider access, request-level cost/latency tracking

How to Choose the Best LLM Monitoring Tool

The biggest decision when choosing an LLM monitoring tool comes down to what you actually want to monitor. If your priority is operational health — latency, uptime, error rates, token costs — most tools will suffice, and general-purpose APM platforms like Datadog may already cover you. But if you care about output quality, the field narrows quickly. Here are some key questions to ask:

Evals or just traces? Tracing shows what happened. Evals show whether it was any good. Many tools offer strong tracing but treat evaluation as an afterthought, requiring custom work to measure faithfulness, relevance, or hallucinations. If quality matters, choose a platform where evals are first-class, or even open-sourced through a tool like DeepEval.

Quality or safety — or both? Some teams only need to monitor output quality. Others need to prove their models are safe — tracking toxicity, bias, and jailbreak susceptibility for compliance or executive reporting. Most tools treat safety as a separate concern. Look for platforms that evaluate quality and safety within the same workflow.

How framework-dependent are you? Tools like LangSmith integrate deeply with their own ecosystems but lose value outside them. If your stack is mixed or evolving, a framework-agnostic solution reduces migration risk.

Where does monitoring sit? Gateway tools like Helicone provide easy setup and request-level visibility. But understanding multi-step agents or workflow-wide quality issues requires deeper, application-level tracing.

Open-source or managed? Self-hosting offers control but adds overhead. Managed platforms reduce ops burden but may limit flexibility. Some tools like Langfuse offer both, though out-of-the-box quality depth varies. If you need a tool that provides you open-source eval metrics, Confident AI provides 50+ research backed metrics through DeepEval, which is adopted by companies such OpenAI, Google, and Microsoft.

Once you're sure of your criteria, you should narrow it down based on your company and the maturity of AI adoption:

For enterprises with compliance and safety requirements, Confident AI provides eval-driven monitoring that covers both output quality and safety — giving data governance teams visibility into model safety metrics and giving CTOs the evidence they need to prove it. Enterprise self-hosting is also available.

For growth-stage startups and SMBs focused on shipping fast, Confident AI consolidates evals, tracing, alerting, and human review into one frictionless platform — no need to stitch together multiple tools. At $1/GB with no caps on trace span volume, it's also the most cost-effective option as you scale.

For early-stage startups, Confident AI's free tier provides a starting point to grow into.

For RAG and agent workflows, Confident AI provides 50+ metrics through DeepEval covering faithfulness, context relevance, and correctness across multi-step chains.

For multi-turn conversational systems, Confident AI pairs session-level tracing with conversation-aware eval scoring, taking into account tool calling in agents and RAG context during monitoring.

For red teaming and safety monitoring, Confident AI allows you to jailbreak detection, toxicity, and bias checks run natively without external tooling.

For cross-functional teams where PMs, QA, and domain experts need to participate in quality workflows alongside engineers, Confident AI supports this with human annotation workflows, shared dashboards, and role-based access designed to make quality monitoring a team-wide effort rather than an engineering-only concern.

Most teams start with tracing and cost tracking, then realize the real challenge is knowing whether their AI is performing well. Pick a tool that treats quality monitoring as the core, not a bolt-on, otherwise you might find yourself double-paying for multiple vendors that does the same thing.

Why Confident AI is the Best Tool for LLM Monitoring

Most LLM monitoring tools started as tracing platforms and bolted on evaluation later. Confident AI took the opposite approach — built from the ground up around AI quality. Evals, quality metrics, and best-in-class LLM observability live in the same workflow, so teams aren't just seeing what their models did — they're measuring whether the outputs were any good.

This matters because the hardest part of running LLMs in production isn't tracking requests. It's knowing whether responses were faithful, relevant, safe, and useful. Confident AI's 50+ evaluation metrics — open-sourced through DeepEval and used by OpenAI, Google, and Microsoft — run directly on production traffic. When quality drops, eval-driven alerts catch it. Production traces automatically become test datasets. The loop between monitoring and improving gets tighter with every deployment.

Quality monitoring also shouldn't be an engineering-only concern. PMs need to track output trends. QA needs to catch regressions. Domain experts need to flag edge cases. Confident AI brings everyone into one workspace through annotation workflows, shared dashboards, and role-based access. For teams that need to prove model safety for compliance or executive reporting, red teaming and safety checks run natively — no extra tooling needed.

At $1/GB with no caps on evaluation volume, it's the most cost-effective option on this list. From early-stage startups on the free tier to enterprises needing self-hosting, Confident AI scales without requiring separate vendors for tracing, evals, alerting, safety, and review. One platform, focused on what actually matters — the quality of your AI.

Frequently Asked Questions

What are LLM monitoring tools?

LLM monitoring tools track the quality, safety, and performance of model outputs in production. Unlike traditional monitoring that focuses on uptime and latency, LLM monitoring measures whether responses are faithful, relevant, and safe — combining tracing with evaluation to give a complete picture.

Why do I need an LLM monitoring tool?

LLMs are non-deterministic — quality can degrade silently as models update, prompts change, or user behavior shifts. Without monitoring, you only find out through user complaints. A dedicated tool gives you continuous visibility into output quality so you catch issues as they happen.

Which LLM monitoring tools are most widely used?

The most widely used in 2026 include Confident AI, Langfuse, Arize AI, Helicone, and LangSmith. Confident AI leads on evaluation depth with 50+ metrics open-sourced through DeepEval, used by OpenAI, Google, and Microsoft. Langfuse is popular for open-source tracing, Arize for enterprise telemetry, Helicone for cost monitoring, and LangSmith for LangChain-native workflows.

How does Confident AI compare to other LLM monitoring tools?

Most tools started as tracing platforms and added evaluation later. Confident AI was built around quality from the start — evals, metrics, and observability in one workflow. It offers eval-driven alerting, automatic dataset curation from traces, and native safety monitoring. At $1/GB with no evaluation caps, it's the most cost-effective option for teams that want everything in one platform.

How does LLM monitoring differ from traditional APM?

APM tools like Datadog monitor infrastructure — latency, uptime, error rates. LLM monitoring measures output quality. A model can return a 200 response in 50ms and still hallucinate or produce unsafe content. LLM monitoring evaluates the actual content using metrics like faithfulness and safety — things APM was never designed to capture.

What metrics should I track when monitoring LLMs in production?

At minimum: faithfulness (is the output grounded in context), relevance (does it answer the question), and safety (is it free from toxicity or bias). For RAG systems, add context relevance and answer correctness. For multi-turn apps, track conversational coherence. Operational metrics like latency and cost still matter but shouldn't be your only signals.

What is the difference between LLM tracing and LLM evaluation?

Tracing captures what happened — prompts, completions, latency, token usage, data flow. Evaluation scores whether it was good. Tracing tells you five chunks were retrieved and a response generated in 800ms. Evaluation tells you whether those chunks were relevant and the response faithful. Both matter, but quality monitoring requires evaluation — tracing alone isn't enough.

Can LLM monitoring tools evaluate RAG pipelines and agents?

Depth varies significantly. RAG and agent workflows need metrics across multiple steps — retrieval relevance, context utilization, faithfulness, and end-to-end correctness. Confident AI covers this through DeepEval's 50+ metrics out of the box. Most other tools require custom implementation for similar coverage.

Can LLM monitoring tools monitor multi-turn conversations?

Some tools support session-level grouping, but true multi-turn monitoring requires conversation-aware evaluation — measuring coherence, context retention, and task completion across the full interaction. Confident AI pairs session-level tracing with conversation-aware eval scoring to handle this natively.

What is eval-driven alerting?

Eval-driven alerting triggers notifications when evaluation scores drop — not just when latency spikes or errors increase. It fires when faithfulness, relevance, or safety fall below thresholds you set, catching quality regressions that traditional monitoring misses entirely. Confident AI supports this natively, running evals on production traffic and alerting based on the results.