A week after the famous, or infamous, OpenAI Dev Day, we at Confident AI released JudgementalGPT—an LLM agent built using OpenAI's Assistants API, specifically designed for the purpose of evaluating other LLM applications. What initially started off as an experimental idea quickly turned into a prototype that we were eager to ship as we received feedback from users that JudgementalGPT gave more accurate and reliable results when compared to other state-of-the-art LLM-based evaluation approaches such as G-Eval.

Understandably, knowing that Confident AI is the world's first open-source evaluation infrastructure for LLMs, many demanded more transparency into how JudgementalGPT was built after our initial public release:

I thought it's all open source, but it seems like JudgementalGPT, in particular, is a black box for users. It would be great if we had more knowledge on how this is built.

So here you go, dear anonymous internet stranger, this article is dedicated to you.

Limitations of LLM-based Evaluations

The authors of G-Eval state that:

Conventional reference-based metrics, such as BLEU and ROUGE, have been shown to have relatively low correlation with human judgments, especially for tasks that require creativity and diversity.

For those who don't already know, G-Eval is a framework that utilizes Large Language Models (LLMs) with chain-of-thought (CoT) processing to evaluate the quality of generated texts in a form-filling paradigm, and if you've ever tried implementing a version of your own, you'll quickly find that using LLMs for evaluation presents its own set of problems:

Unreliability - although G-Eval uses a low-precision grading scale (1–5), which makes it easier for interpretation, these scores can vary a lot even under the same evaluation conditions. This variability is due to an intermediate step in G-Eval that dynamically generates steps for later evaluation, which increases the stochasticity of evaluation scores (and is also why providing an initial seed value doesn't help).

Inaccuracy - for certain tasks, one digit usually dominates (e.g., 3 for a grading scale of 1–5 using gpt-3.5-turbo). A way to get around this problem would be to take the probabilities of output tokens from an LLM to normalize the scores and take their weighted summation as the final score. But, unfortunately, this isn't an option if you're using OpenAI's GPT models as an evaluator, since they deprecated the logprobs parameter a few months ago.

In fact, another paper that explored LLM-as-a-judge pointed out that using LLMs as an evaluator is flawed in several ways. For example, GPT-4 gives preferential treatment to self-generated outputs, is not very good at math (but neither am I), and is prone to verbosity bias. Verbosity bias means it favors longer, verbose responses instead of accurate, shorter alternatives. (In fact, an initial study has shown that GPT-4 exhibits verbosity bias 8.75% of the time)

Can you see how this becomes a problem if you're trying to evaluate a summarization task?

OpenAI Assistants offers a workaround to existing problems

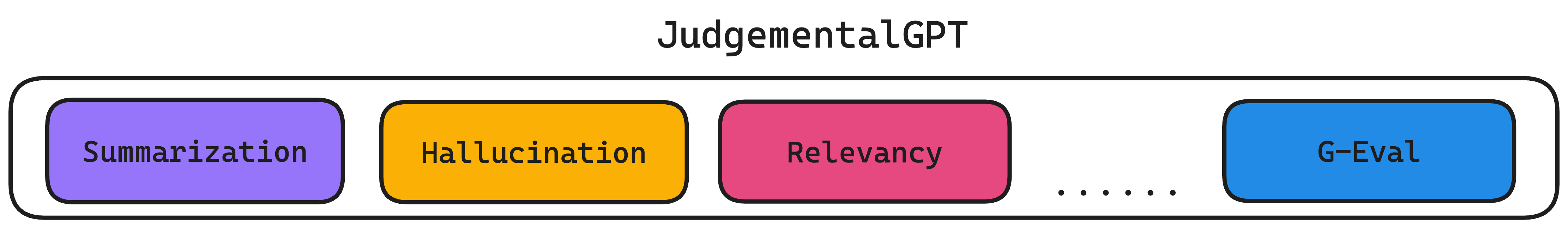

Here's a surprise—JudgementalGPT isn't composed of one evaluator built using the new OpenAI Assistant API, but multiple. That's right, behind the scenes, JudgementalGPT is a proxy for multiple assistants that perform different evaluations depending on the evaluation task at hand. Here are the problems JudgementalGPT was designed to solve:

Bias—we're still experimenting with this (another reason for close-sourcing JudgementalGPT!), but assistants have the ability to write and execute code using the code interpreter tool, which means that, with a bit of prompt engineering, it can account for tasks that are more prone to logical fallacies, such as asserting coding or math problems, or tasks that require more factuality rather than giving preferential treatment to its own outputs.

Reliability—since we no longer require LLMs to dynamically generate CoTs/evaluation steps, we can enforce a set of rules for specific evaluation tasks. In other words, since we've pre-defined multiple sets of evaluation steps based on the evaluation task at hand, we have removed the biggest parameter contributing to stochasticity.

Accuracy—having a set of pre-defined evaluation steps for different tasks also means we can provide more guidance based on what we as humans actually expect from each evaluator and quickly iterate on the implementation based on user feedback.

Another insight we gained while integrating G-Eval into our open-source project DeepEval was the realization that LLM-generated evaluation steps tend to be arbitrary and generally does not help in providing guidance for evaluation. Some of you might also wonder what happens when JudgementalGPT can't find a suitable evaluator for a particular evaluation task. For this edge case, we default back to G-Eval. Here's a quick architecture diagram on how JudgementalGPT works:

As I'm writing this article, I discovered recent paper introducing Prometheus, "a fully open-source LLM that is on par with GPT-4's evaluation capabilities when the appropriate reference materials (reference answer, score rubric) are accompanied", which also requires evaluation steps to be explicitly defined instead.

The Eval Platform for AI Quality & Observability

Confident AI is the leading platform to evaluate AI apps on the cloud, powered by DeepEval.