Most developers don't setup a process to automatically evaluate LLM outputs when building LLM applications even if that means introducing unnoticed breaking changes because evaluation can be an extremely challenging task. In this article, you're going to learn how to evaluate LLM outputs the right way. (PS. if you want to learn how to build your own evaluation framework, click here.)

On the agenda:

what are LLMs and why they're difficult to evaluate

different ways to evaluate LLM outputs in Python

how to evaluate LLMs using DeepEval

Enjoy!

What are LLMs and what makes them so hard to evaluate?

To understand why LLMs are difficult to evaluate and why they're often times referred to as a "black box", let's debunk are LLMs and how they work.

GPT-4 is an example of a large language model (LLM) and was trained on huge amounts of data. To be exact, around 300 billion words from articles, tweets, r/tifu, stack-overflow, how-to-guides, and other pieces of data that were scraped off the internet.

Anyway, the GPT behind "Chat" stands for Generative Pre-trained Transformers. A transformer is a specific neural network architecture which is particularly good at predicting the next few tokens (a token == 4 characters for GPT-4, but this can be as short as one character or as long as a word depending on the specific encoding strategy).

So in fact, LLMs don't really "know" anything, but instead "understand" linguistic patterns due to the way in which they were trained, which often times makes them pretty good at figuring out the right thing to say. Pretty manipulative huh?

All jokes aside, if there's one thing you need to remember, it's this: the process of predicting the next plausible "best" token is probabilistic in nature. This means that, LLMs can generate a variety of possible outputs for a given input, instead of always providing the same response. It is exactly this non-deterministic nature of LLMs that makes them challenging to evaluate, as there's often more than one appropriate response.

Why do we need to evaluate LLM applications?

When I say LLM applications, here are some examples of what I'm referring to:

Chatbots: For customer support, virtual assistants, or general conversational agents.

Code Assistance: Suggesting code completions, fixing code errors, or helping with debugging.

Legal Document Analysis: Helping legal professionals quickly understand the essence of long contracts or legal texts.

Personalized Email Drafting: Helping users draft emails based on context, recipient, and desired tone.

LLM applications usually have one thing in common - they perform better when augmented with proprietary data to help with the task at hand. Want to build an internal chatbot that helps boost your employee's productivity? OpenAI certainly doesn't keep tabs on your company's internal data (hopefully).

This matters because it is now not only OpenAI's job to ensure GPT-4 is performing as expected, but also yours to make sure your LLM application is generating the desired outputs by using the right prompt templates, data retrieval pipelines, model architecture (if you're fine-tuning), etc.

Evaluation (I'll just call them evals from hereon) helps you measure how well your application is handling the task at hand. Without evals, you will be introducing unnoticed breaking changes and would have to manually inspect all possible LLM outputs each time you iterate on your application, which to me sounds like a terrible idea.

How to evaluate LLM outputs

There are two ways everyone should know about when it comes to evals - with and without LLMs. In fact, you can learn how to build your own evaluation framework in under 20 minutes here.

Evals without LLMs

A nice way to evaluate LLM outputs without using LLMs is using other machine learning models derived from the field of NLP. You can use specific models to judge your outputs on different metrics such as factual correctness, relevancy, biasness, and helpfulness (just to name a few, but the list goes on), despite non-deterministic outputs.

For example, we can use natural language inference (NLI) models (which outputs an entailment score) to determine how factually correct a response is based on some provided context. The higher the entailment score, the more factually correct an output is, which is particularity helpful if you're evaluating a long output that's not so black and white in terms of factual correctness.

You might also wonder how can these models possibly "know" whether a piece of text is factually correct. It turns out you can provide context to these models for them to take at face value. In fact, we call these context ground truths or references. A collection of these references are often referred to an evaluation dataset.

But not all metrics require references. For example, relevancy can be calculated using cross-encoder models (another ML model), and all you need is supply the input and output for it to determine how relevant they are to each another.

Off the top of my head, here's a list of reference-less metrics:

relevancy

bias

toxicity

helpfulness

harmlessness

coherence

And here is a list of reference based metrics:

hallucination

semantic similarity

Note that reference based metrics doesn't require you to provide the initial input, as it only judges the output based on the provided context. Click here to learn everything you need to know about LLM evaluation metrics.

Using LLMs for Evals

There's a new emerging trend to use state-of-the-art (aka. gpt-4) LLMs to evaluate themselves or even other others LLMs.

G-Eval is a Recently Developed Framework that uses LLMs for Evals

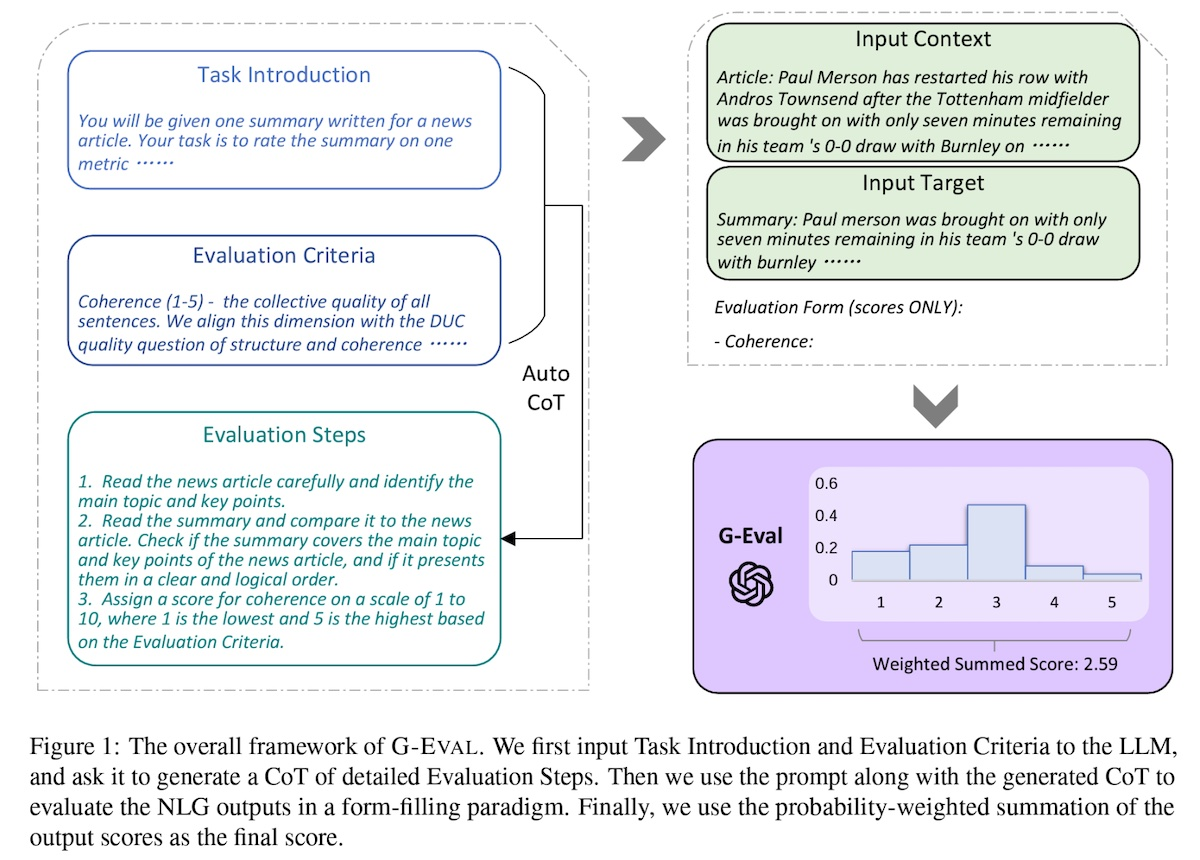

I'll attach an image from the research paper that introduced G-eval below, but in a nutshell G-Eval is a two part process - the first generates evaluation steps, and the second uses the generated evaluation steps to output a final score.

Let's run though a concrete example. Firstly, to generate evaluation steps:

introduce an evaluation task to GPT-4 (eg. rate this summary from 1 - 5 based on relevancy)

introduce an evaluation criteria (eg. Relevancy will based on the collective quality of all sentences)

Once the evaluation steps has been generated:

concatenate the input, evaluation steps, context, and the actual output

ask it to generate a score between 1 - 5, where 5 is better than 1

(Optional) take the probabilities of the output tokens from the LLM to normalize the score and take their weighted summation as the final result

Step 3 is actually pretty complicated, because to get the probability of the output tokens, you would typically need access to the raw model outputs, not just the final generated text. This step was introduced in the paper because it offers more fine-grained scores that better reflect the quality of outputs.

Here's a diagram taken from the paper that can help you visualize what we learnt:

Utilizing GPT-4 with G-Eval outperformed traditional metrics in areas such as coherence, consistency, fluency, and relevancy, but, evaluations using LLMs can often be very expensive. So, my recommendation would be to evaluate with G-Eval as a starting point to establish a performance standard and then transition to more cost-effective metrics where suitable.

For those who interested, click here to learn more about G-Eval and all the different types of LLM evaluation metrics.

The Eval Platform for AI Quality & Observability

Confident AI is the leading platform to evaluate AI apps on the cloud, powered by DeepEval.