LangSmith markets itself as an observability platform, but most teams also need it for evaluation—and that's where the friction starts. Observability tools are built for reactive debugging. Evaluation platforms are built for proactive testing. The difference determines whether you're catching issues in development or firefighting them in production.

In this guide, we'll examine the top LangSmith alternatives through this lens: which platforms treat evaluation as a first-class citizen versus an observability add-on?

Our Evaluation Criteria

Choosing the right LLM observability tool requires balancing technical capabilities with business needs. Based on our experience, the most critical factors include:

Ease of setup and integration: How quickly can your team get up and running? Enterprise teams need seamless integration points with existing infrastructure, while developers need intuitive APIs and SDKs that don't require extensive configuration.

Non-technical user accessibility: Can product managers and domain experts run full evaluation cycles, upload datasets in CSV format, and test AI applications without writing code? This democratizes AI quality assurance beyond the engineering team.

Vendor lock-in risk: How easy is it to export your data, build custom dashboards using APIs, and migrate to another platform if needed? Data portability and API flexibility are crucial for long-term strategic control.

Observability depth: Does the platform support key integrations (OpenTelemetry, LangChain, LangGraph, OpenAI) and allow you to observe individual components, filter traces effectively, and run evaluations directly on production data?

Evaluation capabilities: Are the evaluation metrics research-backed and widely adopted? How easy is it to set up custom metrics? More importantly, is evaluation a core product focus or an afterthought?

Human-in-the-loop workflows: Can domain experts annotate traces easily? Does the platform align evaluation metrics with human feedback? How seamlessly can you export annotations for model fine-tuning?

With these criteria in mind, let's examine how the top LangSmith alternatives stack up across these dimensions.

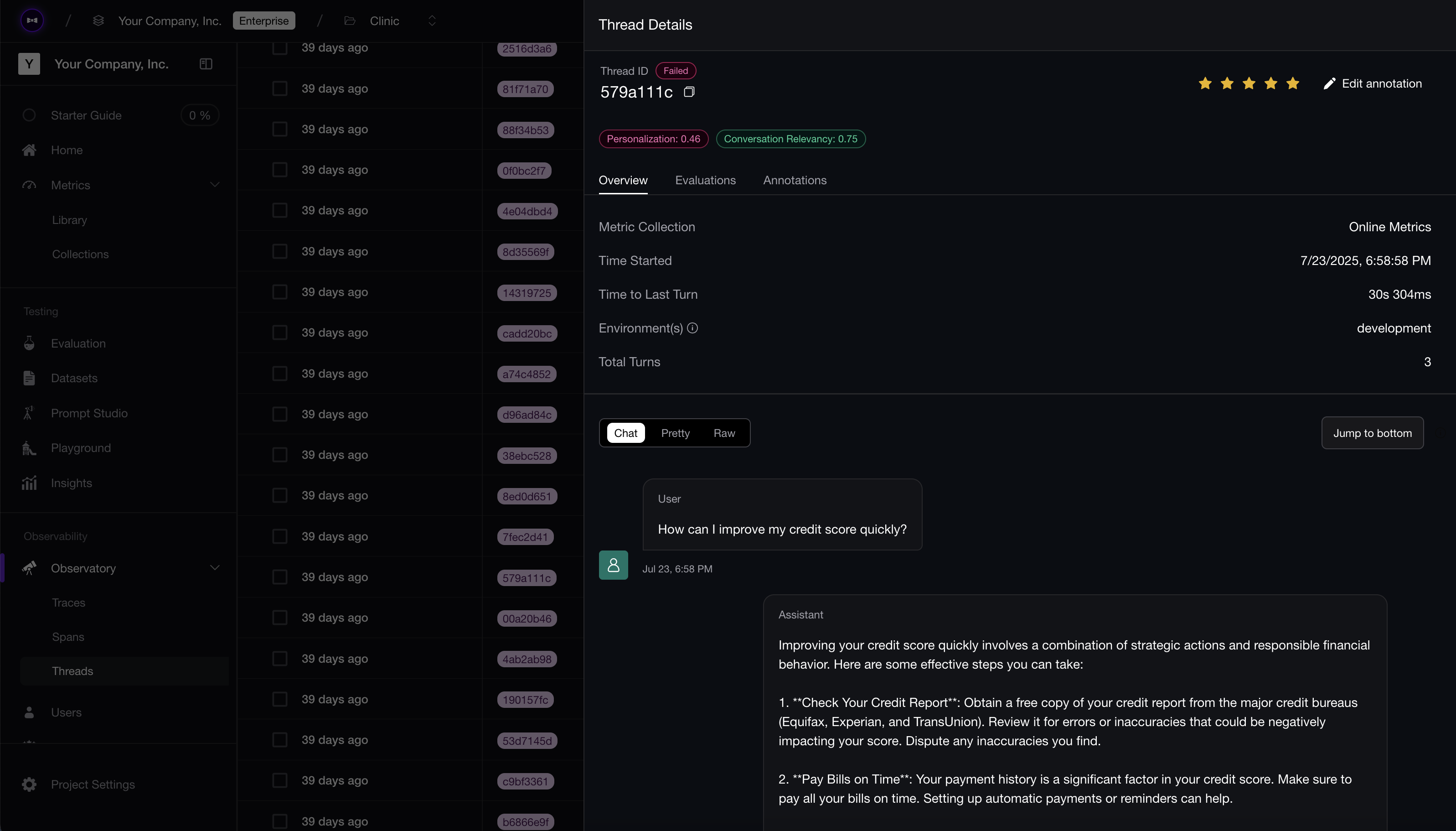

1. Confident AI

Founded: 2023

Most similar to: LangSmith, Langfuse, Arize AI

Typical users: Engineers, product, and QA teams

Typical customers: Mid-market B2Bs and enterprises

](https://images.ctfassets.net/otwaplf7zuwf/4q3lprPzY1bA25prak02ao/27881f3860e072c3c82af45dac1b6baa/Screenshot_2025-09-02_at_4.47.16_PM.png)

What is Confident AI?

Confident AI is a LLM observability platform that combines LLM evals, A|B testing, metrics, tracing, dataset management, human-in-the-loop annotations, and prompt versioning to test AI apps in one collaborative workflow.

It is built for engineering, product, and QA teams, and eval capabilities are native to DeepEval, a popular open-source LLM evaluation framework.

Key features

🧪 LLM evals, including sharable testing reports, A|B regression testing, prompts and model performance insights, custom dashboards, and multi-turn evals.

🧮 LLM metrics, with support for 30+ single-turn evals, 10+ multi-turn evals, multi-modal, LLM-as-a-judge, and custom metrics such as G-Eval. Metrics are 100% open-source and by DeepEval.

🌐 LLM tracing, with integrations with OpenTelemetry, and 10+ integrations with OpenAI, LangChain, Pydantic AI, etc. Traces can be

evaluated via online + offline evals in development and production.

🗂️ Dataset management, including support for multi-turn datasets, annotation assignment, versioning, and backups.

📌 Prompt versioning, which supports single-text and messages prompt types, variable interpolation, and automatic deployment.

✍️ Human annotation, where domain experts can annotate production traces, spans, threads, and incorporate back in datasets for testing.

Who uses Confident AI?

Typical Confident AI users are:

Engineering teams that focus on code-driven AI testing in development

Product teams that require annotations from domain expert

Companies that have AI QA teams needing modern automation

Teams that want to track performance over time in production

Typical customers include growth-stage startups to up-market enterprises, including Panasonic, Amazon, BCG, CircleCI, and Humach.

How does Confident AI compare to LangSmith?

Confident AI ensures you’re not vendor-locked into the “Lang” ecosystem, hear it from a customer that switched from LangSmith to Confident AI:

We chose Confident AI because it offers laser-focused LLM evaluation built on the open-source DeepEval framework—giving us customizable metrics, seamless A/B testing, and real-time monitoring in one place. LangSmith felt too tied into the LangChain ecosystem and lacked the evaluation depth and pricing flexibility we needed. Confident AI delivered the right mix of evaluation rigor, observability, and cost-effective scalability.

— A5Labs (migrated to Confident AI in July 2025)

Here's a more in-depth comparison in terms of features and functionalities:

Confident AI

LangSmith

Single-turn evals Supports end-to-end evaluation workflows

End-to-end no code eval Pings your actual AI app for evals

Limited

LLM tracing Stand AI observability

Multi-turn evals Supports conversation evaluation including simulations

Limited

Regression testing Side-by-side performance comparison of LLM outputs

Custom LLM metrics No-code workflows to run evaluations

Research-backed & open-source

Limited + heavy setup required

AI playground No-code experimentation workflow

Limited, single-prompt only

Online evals Run evaluations as traces are logged

Error, cost, and latency tracking Track model usage, cost, and errors

Multi-turn datasets Workflows to edit single and multi-turn datasets

Prompt versioning Manage single-text and message-prompts

Human annotation Annotate monitored data, align annotation with evals, and API support

Only on traces

API support Centralized API to manage data

Red teaming Safety and security testing

Confident AI is the only choice if you want to support all forms of LLM evaluation around one centralized platform. These include single & multi-turn, for AI agents, chatbots, and RAG use cases alike.

Confident AI's multi-turn simulations compress 2 to 3 hours of manual testing into under 5 minutes per experiment. Furthermore, built-in AI red teaming eliminates the need for separate security tools, while a fully end-to-end, no-code evaluation workflow save teams on average 20+ hours per week on testing - consolidating evaluation, safety testing, and observability into a single integrated platform.

LangSmith has support for evaluation scores but mainly for traces that are not applicable for all use cases (especially multi-turn), and creates a disconnect for less-technical team members. They also don't offer red teaming and simulations - often a deal breaker for teams that don't want to double-pay for multiple vendors.

Evals on Confident AI is also powered by DeepEval, one of the most popular LLM evaluation framework. This means you get access to the same evaluations as Google, Microsoft, and other Big Techs that have adopted DeepEval.

How popular is Confident AI?

Confident AI is an AI observability and evals platform powered by DeepEval, and as of September 2025, DeepEval has become the world’s most popular and fastest growing LLM evaluation framework in terms of downloads (700k+ monthly), and 2nd in terms of GitHub stars (runner-up to OpenAI’s open-source evals repo).

More than half of DeepEval users end up using Confident AI within 2 months of adoption.

Why do companies use Confident AI?

Companies use Confident AI because:

It covers all use cases, for all team members: Since engineers are no longer the only ones involved inAI testing unlike traditional software development, Confident AI is built for multiple personas, even for those without coding experience, and allow direct experimentation on any AI app without code.

It combines open-source metrics with an enterprise platform: Confident AI brings a full-fledged platform to those using DeepEval, and it just works without additional setup. This simplifies cross-team collaboration and centralizes AI testing.

It is evals centric, not just an observability solution: Customers appreciate that it is not another observability platform with generic tracing. Confident AI offers evals that is deeply integrated with LLM traces, that operates on different components within your AI agents.

Bottom line: Confident AI is the best LangSmith alternative for growth-stage startups to mid-sized enterprises. It takes an evaluation-first approach to observability, while not vendor-locked into the “Lang” ecosystem.

It’s broad eval capabilities mean you don’t have to adopt multiple solutions within your org, and AI app playgrounds allow non-technical users to run evals without touching a line of code.

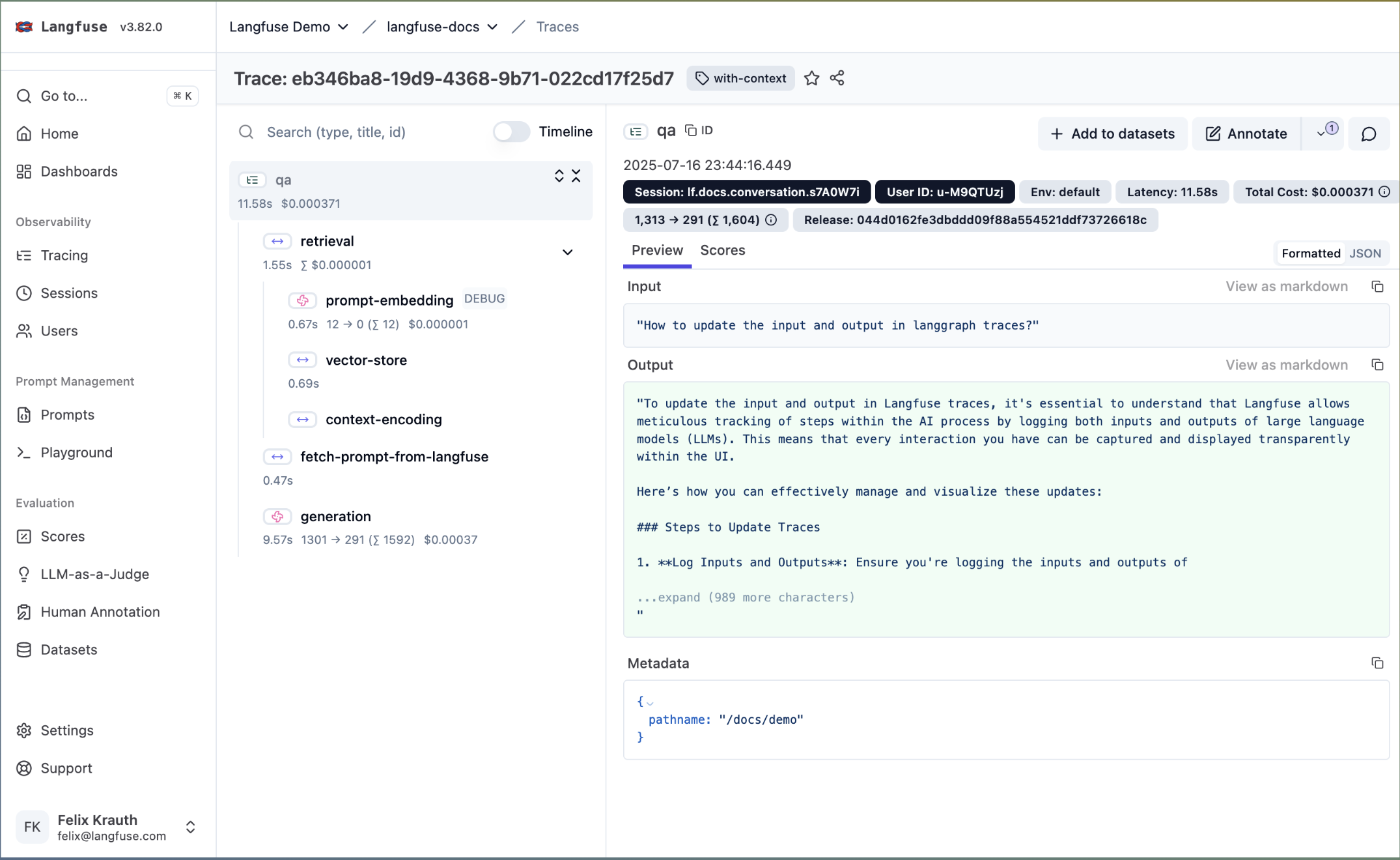

2. Arize AI

Founded: 2020

Most similar to: Confident AI, Langfuse, LangSmith

Typical users: Engineers, and technical teams

Typical customers: Mid-market B2Bs and enterprise

](https://images.ctfassets.net/otwaplf7zuwf/4KFDcor6DyHin6CsDht999/74e26dd8d8e31329a7e9f0e1e1774bea/Screenshot_2025-09-01_at_3.07.49_PM.png)

What is Arize AI?

Arize AI is an AI observability and evaluation platform for AI agents, and is agnostic to tools other than LangChain/Graph. It was originally built for ML engineers, with it’s more recent releases on Phoenix, it’s open-source platform, tailored towards developers for LLM tracing instead.

Key Features

🕵️ AI agent, with support for graph visualizations, latency and error tracking, integrations with 20+ frameworks such as LangChain.

🔗 Tracing, including span logging, with custom metadata support, and the ability to run online evaluations on spans.

🧑✈️ Co-pilot, a “cursor-like” experience to chat with traces and spans, for users to debug and analyze observability data more easily.

🧫 Experiments, a UI driven evaluation workflow to evaluate datasets against LLM outputs without code.

Who uses Arize AI?

Typical Arize AI users are:

Highly technical teams at large enterprises

Engineering teams with few PMs

Companies with large-scale observability needs

While it offers a free and $50/month tier, it’s limitations is a barrier for teams wishing to scale up. Only a maxium of 3 users are allowed, with a 14-day data retention, meaning you’ll have to engage in an annual contract for anything beyond this.

How does Arize AI compare to LangSmith?

Arize AI

LangSmith

Single-turn evals Supports end-to-end evaluation workflows

Multi-turn evals Supports conversation evaluation including user simulation

Limited

Custom LLM metrics Use-case specific metrics for single and multi-turn

Limited + heavy setup required

Limited + heavy setup required

AI playground No-code experimentation workflow

Limited, single-prompt only

Limited, single-prompt only

Offline evals Run evaluations retrospectively on traces

Error, cost, and latency tracking Track model usage, cost, and errors

Dataset management Workflows to edit single-turn datasets

Prompt versioning Manage single-text and message-prompts

Human annotation Annotate monitored data, including API support

API support Centralized API to manage data

While both look similar on paper, and targets the same technical teams, Arize AI is stricter on it’s lower tier plans, and pricing is not transparent for both beyond the middle-tier.

How popular is Arize AI?

Arize AI is slightly less popular than LangSmith, but mostly due to the LangChain brand. Stated on Arize’s website, around 50 million evaluations are ran per month, with over 1+ trillion spans logged.

Data on LangSmith is less readily available.

Why do companies use Arize AI?

Self-host OSS: Part of its platform, Phoenix, is self-hostable as it os open-source, making it suitable for teams that need something quick up and running.

Laser-focused on observability: Arize AI handles observability scale well, for teams looking for fault tolerant tracing, it is one of the best options.

Non-vendor-lockin: Unlike LangSmith, Arize AI is not tied into any ecosystem, and instead follows industry standards such as OpenTelemetry.

Bottom line: Arize AI is the best LangSmith alternative for large enterprises with highly technical teams looking for large-scale observability. Startups, mid-sized enterprises, and those needing comprehensive evaluations, pre-deployment testing, and non-technical collaborations might find better-valued alternatives.

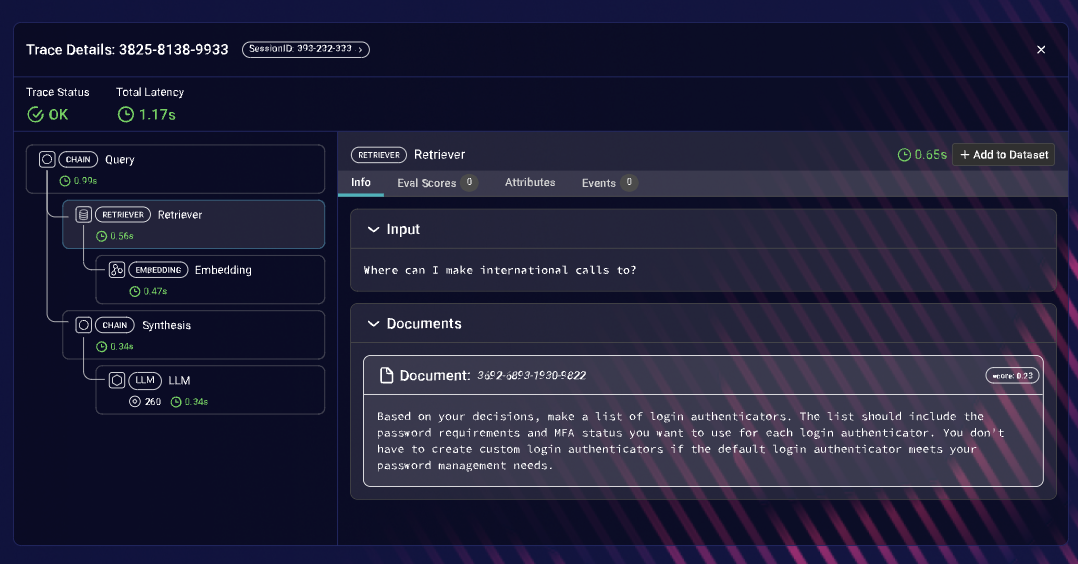

3. Langfuse

Founded: 2022

Most similar to: Confident AI, Helicone, LangSmith

Typical users: Engineers and product

Typical customers: Startups to mid-market B2Bs

](https://images.ctfassets.net/otwaplf7zuwf/5MlsEwUbMe7ayiuF1TXLfm/502001cd005016a2d9ed53438e9db348/Screenshot_2025-09-01_at_3.09.58_PM.png)

What is Langfuse?

Langfuse is a 100% open-source platform for LLM engineering. To break it down, this means they offer LLM tracing, prompt management, evals, to “debug and improve your LLM application”.

Key Features

⚙️ LLM tracing, which is similar to what LangSmith has, the difference being Langfuse supports more integrations, with easy-to-setup features such as data masking, sampling, environments, and more.

📝 Prompt management allow users to version prompts and makes it easy to develop apps without storing prompts in code.

📈 Evaluation allow users to score traces and track performance over time, on top of cost and error tracking.

Who uses Langfuse?

Typical Langfuse users are:

Engineering teams that need data on their own premises

Teams that want to own their own prompts on their infrastructure

Langfuse puts a strong focus on open-source observability. Customers include Twilio, Samsara, and Khan Academy.

How does Langfuse compare to LangSmith?

Langfuse

LangSmith

Single-turn evals Supports end-to-end evaluation workflows

Multi-turn evals Supports conversation evaluation, including user simulationi

Limited

Custom LLM metrics Use-case specific metrics for single and multi-turn

Limited + heavy setup required

Limited + heavy setup required

AI playground No-code experimentation workflow

Limited, single-prompt only

Limited, single-prompt only

Offline evals Run evaluations retrospectively on traces

Error, cost, and latency tracking Track model usage, cost, and errors

Prompt versioning Manage single-text and message-prompts

API support Centralized API to manage data

It should not be confused that Langfuse is part of the “Lang”-Chain ecosystem. For LLM observability, evals, and prompt management, both platforms are extremely similar.

However Langfuse does have better developer experience, and its generous pricing of unlimited users for all tiers means there is less barrier to entry.

How popular is Langfuse?

Langfuse is one of the most popular LLMops platforms out there due to it being 100% open-source, with over 12M SDK downloads each month for its OSS platform, while there is little data available for LangSmith.

Why do companies use Langfuse?

100% open-source:

Being open-source means anyone can setup Langfuse without worry about data privacy, making adoption fast and easy.

Great developer experience:

Langfuse has great documentation with clear guides, as well as a breadth of integrations supported by it’s OSS community.

Bottom line: Langfuse is basically LangSmith, but open-source with slightly better developer experience. For companies looking for a quick solution that can be hosted on-prem, Langfuse is a great alternative to avoid security and procurement.

For teams that does not have this requirement, needs to support more non-technical workflows, and more streamlined evals, there are other better-valued alternatives.

4. Helicone

Founded: 2023

Most similar to: Langfuse, Arize AI

Typical users: Engineers and product

Typical customers: Startups from early to growth stage

](https://images.ctfassets.net/otwaplf7zuwf/44dG0Y0cpYGTkkVuj6alj8/62e7405acc54ee1c308846fadbf0aabb/Screenshot_2025-09-01_at_3.11.07_PM.png)

What is Helicone?

Helicone is an open-source platform that offers an unified AI gateway as well as observability on model requests for teams to build reliable AI apps.

Key Features

⛩️ AI gateway where you could call 100+ LLM providers through the OpenAI SDK format

📷 Model observability to track and analyze requests by cost, error rate, as well as tag LLM requests with metadata, enabling advanced filtering

✍️ Prompt management to compose and iterate prompts, then easily deploy them in any LLM call with the AI Gateway

Who uses Helicone?

Typical Helicone users include:

Engineering teams needing multiple LLM providers unified

Startups that need fast setup and pinpoint cost tracking

Helicone puts a strong focus on its AI gateway, and its observability is not as focused on tracing apps than it is on model requests. Customers include QA wolf, Duolingo, and Singapore Airlines.

How does Helicone compare to LangSmith?

Helicone

LangSmith

AI gateway Access 100+ LLMs in one unified API

Single-turn evals Supports end-to-end evaluation workflows

Multi-turn evals Supports conversation evaluation, including user simulationi

Limited

Custom LLM metrics Use-case specific metrics for single and multi-turn

Limited + heavy setup required

AI playground No-code experimentation workflow

Limited, single-prompts only

Offline evals Run evaluations retrospectively on traces

Error, cost, and latency tracking Track model usage, cost, and errors

Prompt versioning Manage single-text and message-prompts

API support Centralized API to manage evaluations

Helicone focuses on observability on the model layer instead of the framework layer, which is where LangSmith operates with LangChain and LangGraph.

Helicone also has an intuitive UI that is usable for non-technical teams, making it a great alternative for those needing cross-team collaboration, open-source hosting, and working with multiple LLMs.

How popular is Helicone?

Helicone is less popular than Langfuse sitting at 4.4k GitHub stars. However it is popular among startups especially among YC companies. Little data is available on LangSmith but it is likely there are more deployments of LangSmith than Helicone.

Why do companies use Helicone?

Open-source: Being open-source means teams can try it out locally quickly before deciding if a cloud-hosted solution is right for them

Works with multiple LLMs: Helicone is the only contender on this list that has a gateway, which is a big plus for teams valuing this capability

Bottom line: Helicone is the best alternative if you’re working with multiple LLMs and need observability on the model layer instead of the application layer. It is open-source, making it fast and easy to setup to get through data security requirements.

For teams that are operating at the application layer, and need full-fledged LLM-tracing, and evaluations, there are other alternatives more suited.

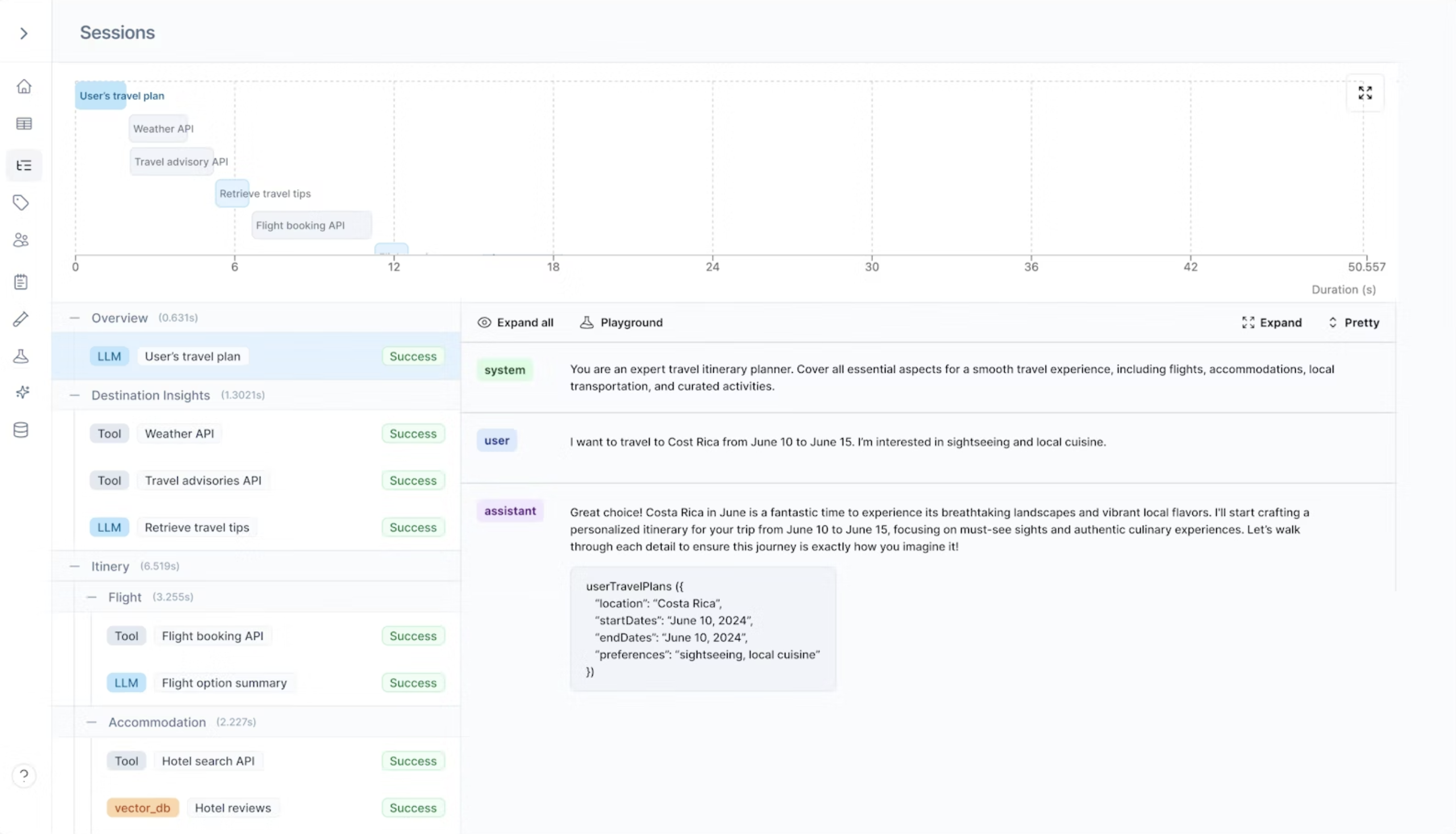

5. Braintrust

Founded: 2023

Most similar to: LangSmith, Langfuse

Typical users: Engineers and product

Typical customers: Startups to mid-market B2Bs

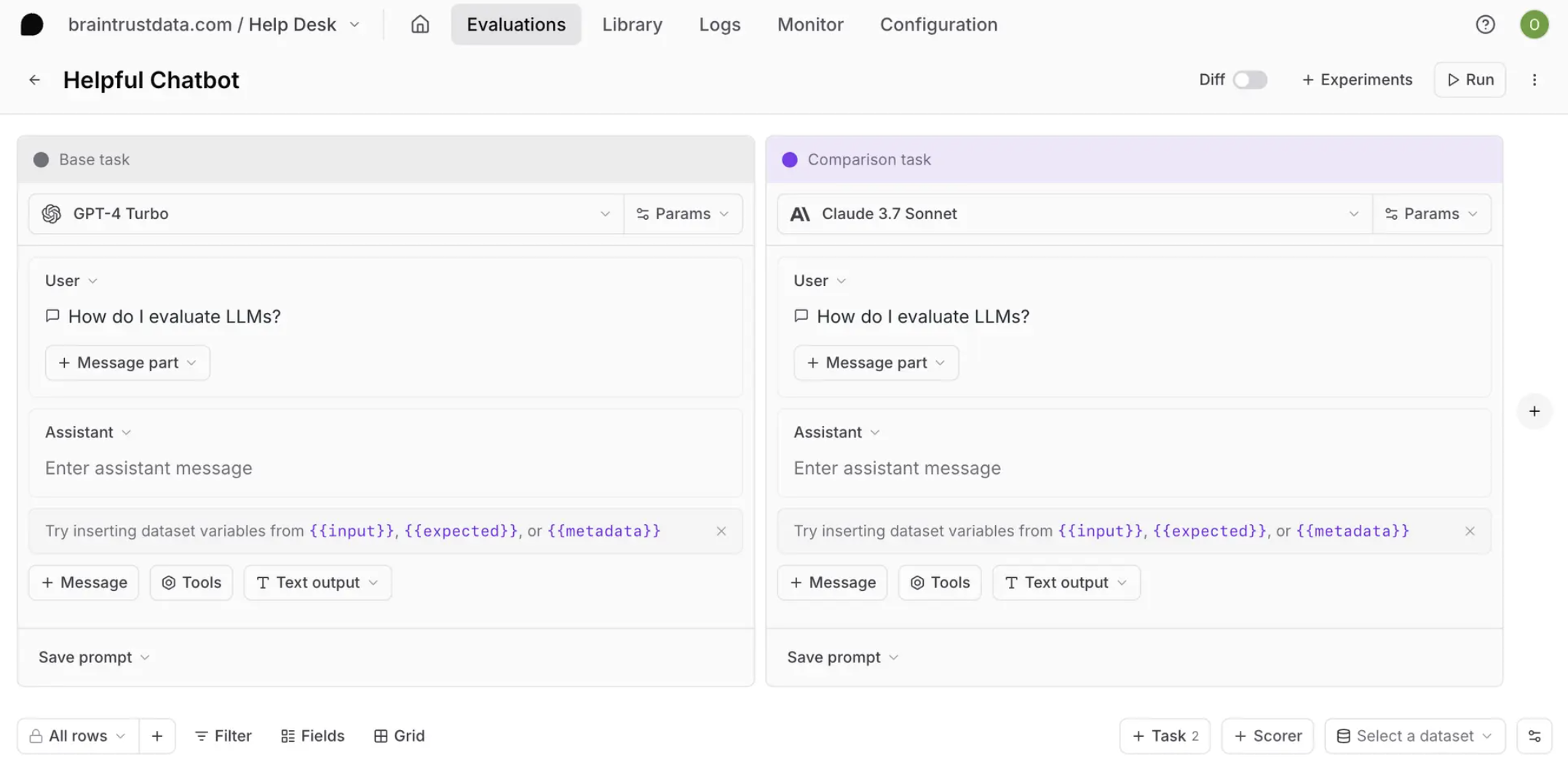

](https://images.ctfassets.net/otwaplf7zuwf/5ePsO8Crn7D7w7z0yAP9Gc/2c4745c5fe0b9bff45af98c7393307e0/Screenshot_2025-09-01_at_3.08.50_PM.png)

What is Braintrust?

Briaintrust Data is a platform for collaborative evaluation of AI apps. It is more non-technical friendly than its peers, with testing more UI driven in a “playground” more than being code-first.

Key Features

📐 Playground is a differentiator between LangSmith and Braintrust. Both playground allows non-technical teams to test different variations of model and prompt combinations without touching code, both Braintrust's have slightly better UI.

⏱️ Tracing with observability is available, with the ability to run evaluations on it, as well as custom metadata logging.

📂 Dataset editor for non-technical teams to contribute to playground testing, no code required.

Who uses Braintrust?

Typical Braintrust users are:

Non-technical teams such as PMs or even external domain experts

Engineering teams for initial setup

Braintrust puts a strong focus on support non-technical workflows and UI design that is not just tailored towards engineers. Customers include Coursera, Notion, and Zapier.

How does Braintrust compare to LangSmith?

Braintrust

LangSmith

Single-turn evals Supports end-to-end evaluation workflows

Multi-turn evals Supports conversation evaluation, including user simulation

Limited

Custom LLM metrics Use-case specific metrics for single and multi-turn

Limited + heavy setup required

AI playground No-code experimentation workflow

Limited, single-prompts only

Offline evals Run evaluations retrospectively on traces

Error, cost, and latency tracking Track model usage, cost, and errors

Prompt versioning Manage single-text and message-prompts

API support Centralized API to manage data

Evaluation playground makes Braintrust a good alternative to LangSmith for users that needs more sophisticated non-technical workflows.

LLM tracing and observability is fairly similar, however teams might find Braintrust’s UI more intuitive than LangSmith’s for analysis.

BrainTrust is more generous seats cap offering unlimited users for $249/month, but has a higher base platform fee for the middle-tier than LangSmith ($39/month).

How popular is Braintrust?

Braintrust is far less popular than LangSmith, largely due to a lack of OSS component. With a lack of community, this also means not a lot of data is available on its adoption.

Why do companies use Braintrust?

Non-technical workflows: Even folks that are outside of your company that have never touched a line of code can collaborate on testing on the playground.

Intuitive UI: More understandable even for those without a technical background, making it more easy for non-technical folks to collaborate.

Bottom line: Braintrust is a great alternative for companies looking for a platform that makes it extremely easy for non-technical teams to test AI apps. However, for more low-level control over evaluations, teams might have better luck looking elsewhere.

Why Confident AI is the Best LangSmith Alternative

Most AI observability platforms including LangSmith are built for engineers debugging production traces. Confident AI is the only evals-first LLM observability platform built for entire teams to prevent issues before deployment. It is adopted by companies like Circle CI, Panasonic, and Amazon.

The difference shows up in who can actually use it. With Confident AI, product managers upload CSV datasets and run evaluations without code. Domain experts annotate traces and align them with evaluation metrics. QA teams set up regression tests in CI/CD through the UI. Engineers maintain full programmatic control via the Evals API, but they're no longer the bottleneck for every testing decision.

LangSmith's observability requires engineering involvement at every step, which slows down iteration and creates handoff friction.

The ROI is measurable. Multi-turn simulations compress 2 to 3 hours of manual conversation testing into under 5 minutes per experiment. Built-in red teaming eliminates separate vendors for AI security testing, saving both cost and integration overhead. The end-to-end, no-code evaluation workflow saves teams an average of 20+ hours per week by consolidating evaluation, safety testing, and observability into one platform instead of stitching together multiple tools.

LangSmith has tracing, but the data is hard to visualize and disjointed across the platform. You can't see evaluation results upfront without creating custom graphs or navigating to another page 10 clicks away. Multi-turn conversation testing requires manual work that Confident AI automates in minutes. Red teaming and safety testing aren't built in, forcing teams to pay for additional security vendors. For teams that need their evaluation insights surfaced clearly and actionable immediately, this workflow friction adds up to significant wasted time.

Confident AI's eval metrics are research-backed and battle-tested by companies like Google and Microsoft through industry adoption. If you're already using DeepEval for local testing, Confident AI integrates seamlessly to extend those workflows to the cloud. The platform is framework-agnostic, supporting OpenTelemetry, OpenAI, Pydantic AI, LangChain, and 10+ other integrations, so you avoid vendor lock-in.

When Confident AI might not be the right fit

If you need fully open-source: Confident AI is cloud-based with enterprise security standards. Confident AI can also be easily self-hosted, but this is not open-source.

If you're all-in on LangChain forever: LangSmith's deep LangChain/LangGraph integration is hard to beat if that's your entire stack and you never plan to change.

Honorable Mentions

Galileo AI, Traceloop, and Gentrace: Which is similar to Arize AI, but with no community and 100% closed-source.

Keywords AI: Which is similar to Helicone and adopted within the startup community.