Let’s imagine you’re building an LLM evaluation framework called DeepEval, and you talk to 20+ users a week. Out of those 20 users, over 15 of them ask this very question:

Which metrics should I use if I need to compare the [fill in the blank here] of different prompts/models?

Clearly, most people is still confused about how each metric works and what use cases they are for, and that’s not good. To make testing your prompts and models more accessible, we ought to use something more intuitive and simple to understand.

So in this article, I’m introducing LLM Arena-as-a-Judge — a novel way to run automated, scaleable, comparison-based LLM-as-a-judge that just tells you which iteration of your LLM app worked best.

With LLM Arena-as-a-Judge, you don’t pick a metric. You pick the better output. That’s it.

TL;DR

In this article, you’ll learn that:

LLM Arena is an Elo rating system based on human feedback, and how to replace humans with LLM judges.

LLM “Arena”-as-a-judge can be extended not just for foundational models but also for A|B testing LLM apps.

DeepEval (100% open-source) makes LLM Arena-as-a-Judge a lot easier to use, simple to setup, in just 10 lines of code.

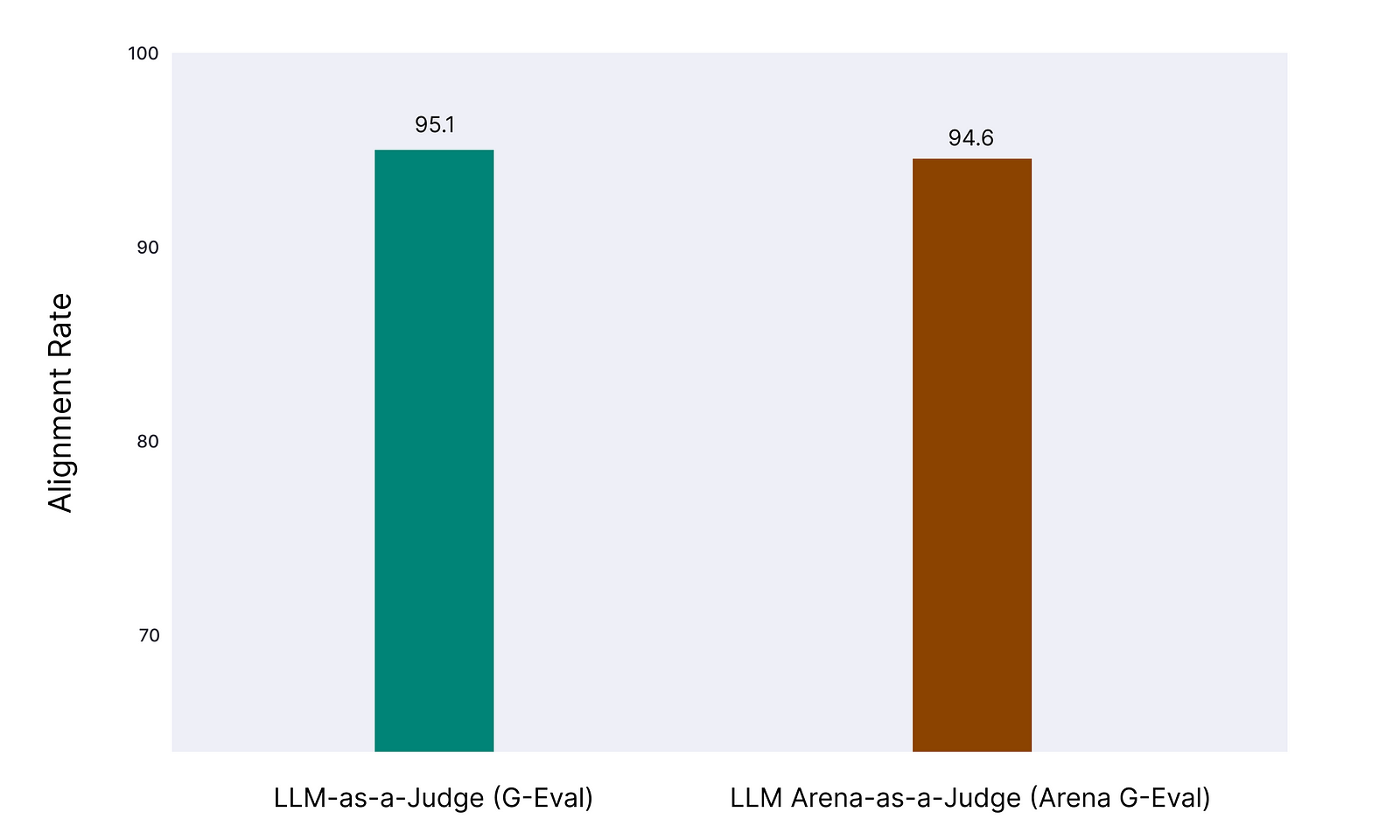

LLM Arena-as-a-judge is vulnerable to common biases, which swapping positions randomly, and borrowing the existing G-Eval algorithm can help mitigate them.

LLM Arena-as-a-Judge is not a replacement for existing LLM-as-a-judge. Arena is easier to setup, and although gives similar agreement rate to humans, it is less flexible than regular LLM-as-a-judge for different use cases.

And there’s so much more below. Ready? Let’s begin.

What is LLM Arena?

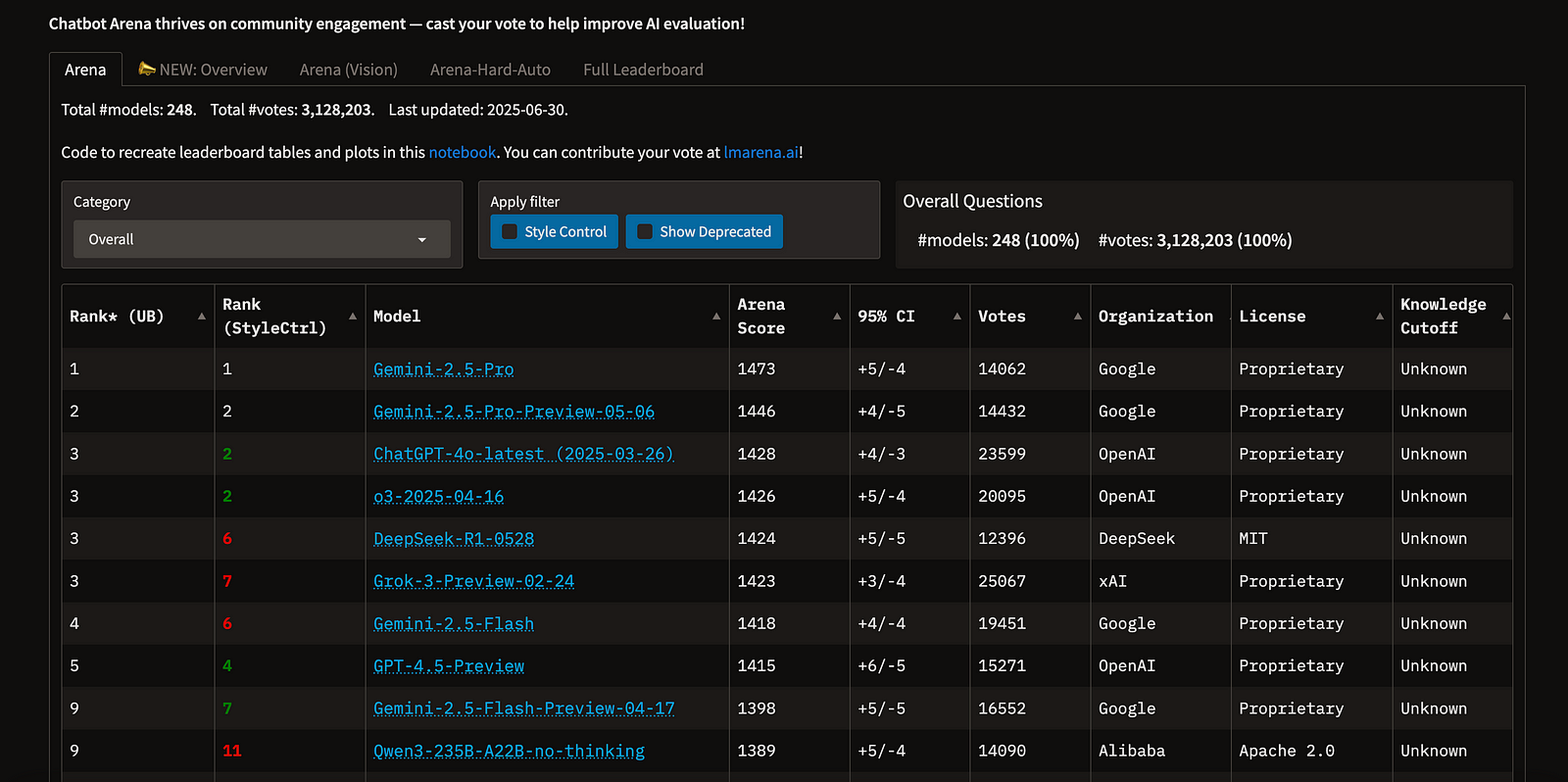

First things first, let’s talk about what LLM Arena is. LLM Arena started as a community-driven benchmark designed to compare the outputs of LLMs in a pairwise format. Inspired by the need for human-like judgment at scale, Arena lets users vote on which model output is “better,” creating a leaderboard of LLMs based on the “Elo” rating system. When one model consistently beats another, its Elo score rises.

Over time, this creates a dynamic, crowd-sourced leaderboard that reflects community preferences across models like GPT-4, Claude, Mistral, and more.

At its core, LLM Arena is both a research tool and a public evaluation platform. Today, LLM Arena is primarily used for:

Researchers to benchmark foundation models (e.g. GPT-4 vs Claude vs Gemini)

Open-source fans use it to track how their models stack up against the big guys

Building leaderboards based on community preferences

Running studies on model alignment and helpfulness

It’s even cited in academic papers and model release blogs (see: Chatbot Arena leaderboard).

But here’s the catch: LLM Arena is a public benchmark. It’s not built for your internal development workflow.

This means:

You can’t plug in your own app, prompt, or model.

You can’t use it to test iterations of your LLM app.

You can’t integrate it into your LLM evaluation pipeline or CI process.

And there’s no real way to do large-scale comparison testing across dozens or hundreds of your own outputs.

In other words, LLM Arena is great for watching the race. But it’s not the right tool if you’re trying to run your own. That’s exactly where LLM Arena-as-a-Judge fits in, and we’re proud to be the first to open-source it at DeepEval.

But first, let’s go through why using LLM arena for LLM evals.

The Eval Platform for AI Quality & Observability

Confident AI is the leading platform to evaluate AI apps on the cloud, powered by DeepEval.

](https://images.ctfassets.net/otwaplf7zuwf/6lfjiOOXM6OGFBPzXKrZDy/c38e3d2d45156242f6b07582775a9f9e/image.png)