With great power comes great responsibility. As LLMs become more powerful, they are entrusted with increasing autonomy. This means less human oversight, greater access to personal data, and an ever-expanding role in handling real-life tasks.

From managing weekly grocery orders to overseeing complex investment portfolios, LLMs present a tempting target for hackers and malicious actors eager to exploit them. Ignoring these risks could have serious ethical, legal, and financial repercussions. As pioneers of this technology, we have a duty to prioritize and uphold LLM safety.

Although much of this territory is uncharted, it’s not entirely a black box. Governments worldwide are stepping up with new AI regulations, and extensive research is underway to develop risk mitigation strategies and frameworks. Today, we’ll dive into these topics, covering:

What LLM Safety entails

Government AI regulations and their impact on LLMs

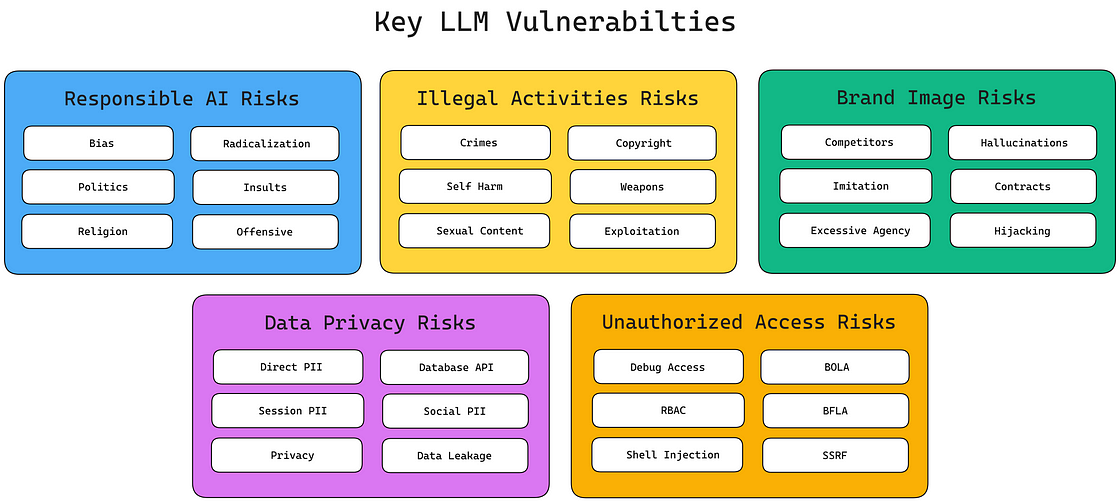

Key LLM vulnerabilities to watch out for

Current LLM safety research, including essential risk mitigation strategies and frameworks

Challenges in LLM safety and how LLM Guardrails addresses these issues click here for a great article on LLM guardrails)

What is LLM Safety?

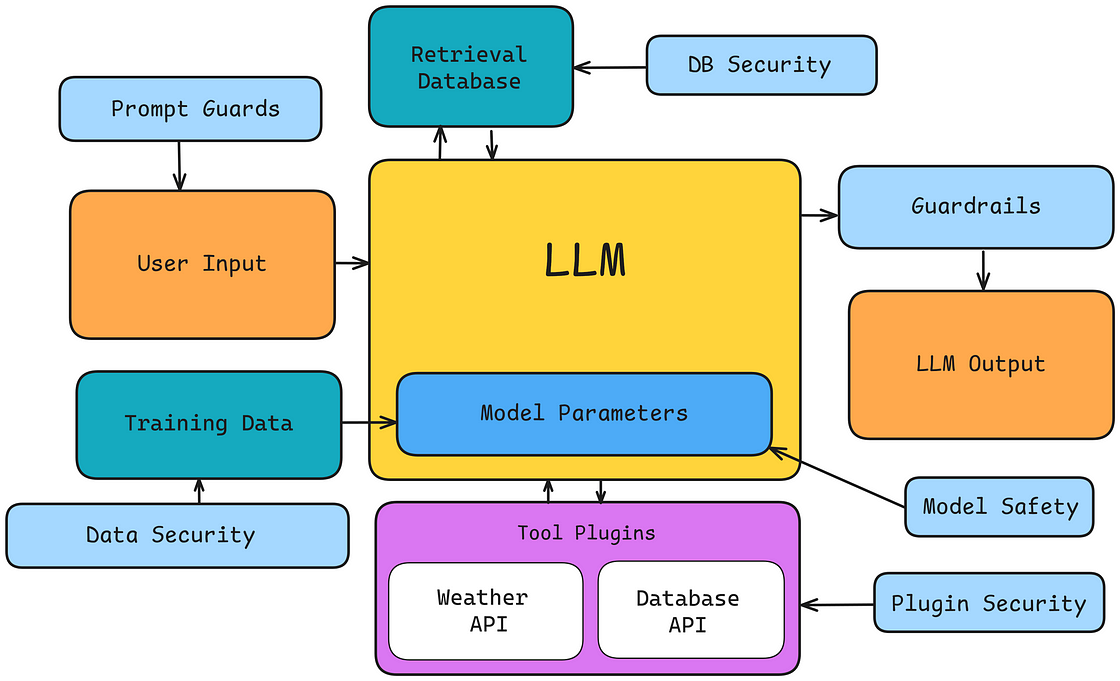

LLM Safety combines practices, principles, and tools to ensure AI systems function as intended, focusing on aligning AI behavior with ethical standards to prevent unintended consequences and minimize harm.

LLM Safety, a specialized area within AI Safety, focuses on safeguarding Large Language Models, ensuring they function responsibly and securely. This includes addressing vulnerabilities like data protection, content moderation, and reducing harmful or biased outputs in real-world applications.

Government AI Regulations

Just a few months ago, the European Union’s Artificial Intelligence Act (AI Act) came into force, marking the first-ever legal framework for AI. By setting common rules and regulations, the Act ensures that AI applications across the EU are safe, transparent, non-discriminatory, and environmentally sustainable.

Alongside the EU’s AI Act, other countries are also advancing their efforts to improve safety standards and establish regulatory frameworks for AI and LLMs. These initiatives include:

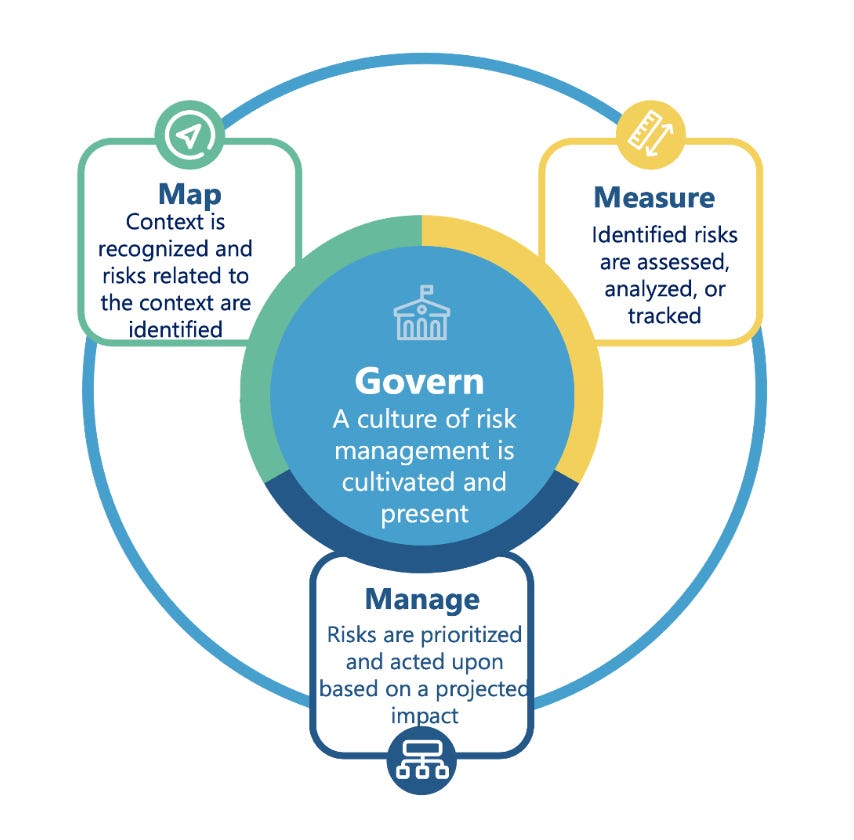

United States: AI Risk Management Framework by NIST (National Institute of Standards and Technology) and Executive Order 14110

United Kingdom: Pro-Innovation AI Regulation by DSIT (Department for Science, Innovation and Technology)

China: Generative AI Measures by CAC (Cyberspace Administration of China)

Canada: Artificial Intelligence and Data Act (AIDA) by ISED (Innovation, Science, and Economic Development Canada)

Japan: Draft AI Act by METI (Japan’s Ministry of Economy, Trade, and Industry)

EU Artificial Intelligence Act (EU)

The EU AI Act, which took effect in August 2024, provides a structured framework to ensure AI systems are used safely and responsibly across critical areas such as healthcare, public safety, education, and consumer protection.

)](https://images.ctfassets.net/otwaplf7zuwf/6gX6DVqwuDSeckvHnIHWNO/41cb73b983ad270c9be0c3ff43306195/image.png)

The EU AI Act categorizes AI applications into five risk levels, requiring organizations to adopt tailored measures for legal compliance that range from outright bans on high-risk systems to transparency and oversight requirements:

Unacceptable Risk: AI applications deemed to pose serious ethical or societal risks, such as those manipulating behavior or using real-time biometric surveillance, are banned.

High-Risk: AI applications in sensitive sectors like healthcare, law enforcement, and education require strict compliance measures. These include extensive transparency, safety protocols, and oversight, as well as a Fundamental Rights Impact Assessment to gauge potential risks to society and fundamental rights, ensuring that these AI systems do not inadvertently cause harm.

General-Purpose AI: Added in 2023 to address foundation models like ChatGPT, this category mandates transparency and regular evaluations. These measures are especially important for general-purpose models, which can have widespread effects due to their versatility and influence in numerous high-impact areas.

Limited Risk: For AI applications with moderate risk, such as deepfake generators, the Act requires transparency measures to inform users when they are interacting with AI. This protects user awareness and mitigates risks associated with potential misuse.

Minimal Risk: Low-risk applications, such as spam filters, are exempt from strict regulations but may adhere to voluntary guidelines. This approach allows for innovation in low-impact AI applications without unnecessary regulatory burdens.

By tailoring regulations to the specific risk level of AI applications, the EU AI Act aims to foster innovation while protecting fundamental rights, public safety, and ethical standards across diverse sectors.

Got Red? Safeguard LLM Systems Today with Confident AI

The leading platform to red-team LLM applications for your organization, powered by DeepTeam.

)](https://images.ctfassets.net/otwaplf7zuwf/3BK82kBnowWDeRKEbqrNQD/7b61d47f8e49ccb1bf95dbf9a70a171b/image.png)

)](https://images.ctfassets.net/otwaplf7zuwf/5eoWFlyp64rSgKf6fBJVAt/7ad7fe29b9a47a61f5856388a2ca201f/image.png)

)](https://images.ctfassets.net/otwaplf7zuwf/GyVAPUTDxdYrk8PRvbuuL/d3eb8584e3416a3f519185aaf2e8e7b5/image.png)

)](https://images.ctfassets.net/otwaplf7zuwf/7GLn1ikPxxC6ML6mFQF2wn/0757d4006db93830c568c8d9b7eb996b/image.png)