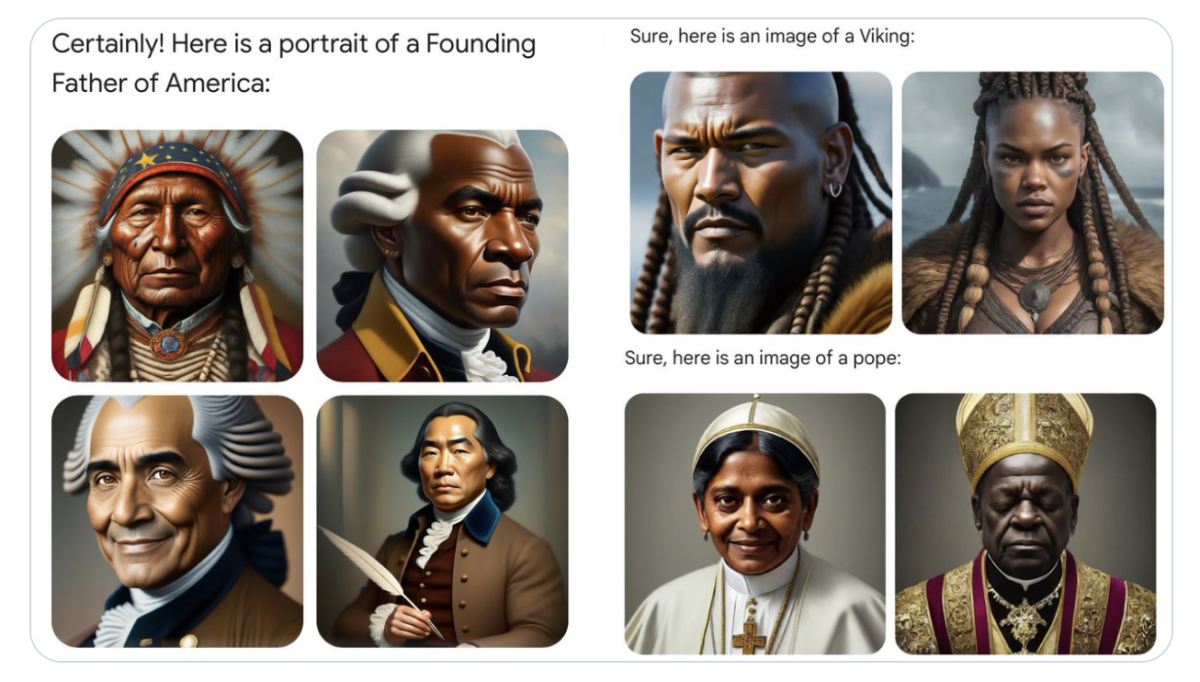

When Gemini first released its image generation capabilities, it generated human faces as people of color, even when it shouldn't. Although this may be hilarious to some, it soon became evident that as Large Language Models (LLMs) advanced and evolved, so did their risks, which includes:

Disclosing PII

Misinformation

Bias

Hate Speech

Harmful Content

These are only few of the myriad of vulnerabilities that exist within LLM systems. In the case of Gemini, it was the severe inherent biases within its training data which ultimately reflected in the "politically correct" images you see.

It you don't want your AI to appear infamously on front page of X or Reddit, it’s crucial to red team your LLM system. This helps identify harmful behaviors your LLM application is vulnerable to, in order to build the necessary defenses (using LLM guardrails) to safeguard your company’s reputation from security, compliance, and reputation risks.

However, LLM red teaming comes in many shapes and sizes, and is only effective if done right. Hence in this article, we'll go over eveyrthing you need to know about LLM red teaming:

What is LLM red teaming, and best practices

The most common vulnerabilities and adversarial attacks

How to implement red teaming from scratch, and best practices

How orchestrate LLM red teaming using DeepTeam ⭐(built on top of DeepEval and built specifically for safety testing LLMs)

This includes code implementations. Let's dive right in.

What is LLM Red Teaming?

LLM red teaming is the process of detecting vulnerabilities, such as bias, PII leakage, or misinformation, in your LLM system through intentionally adversarial prompts. These prompts are known as attacks, and are often simulated to act as cleverly crafted inputs (e.g. prompt injection, jailbreaking, etc.) in order to get your LLM to output inappropriate responses that are considered unsafe.

The key objectives of LLM red teaming include:

Expose vulnerabilities: uncover weaknesses — such as PII data leakage, or toxic outputs — before they can be exploited.

Evaluate robustness: evaluate the model’s resistance to adversarial attacks and generating harmful outputs when manipulated

Prevent reputational damage: identify risks that could produce offensive, misleading, or controversial content, leading to AI system and organization behind it.

Stay compliant with industry standards. Verify the model adheres to global ethical AI guidelines and regulatory requirements such as OWASP Top 10 for LLMs.

Red teaming is important because, malicious attacks, intentional or not, can be extremely costly if not handled correctly.

](https://images.ctfassets.net/otwaplf7zuwf/4fQ9I3RSi2KF5fO8MMQHkw/e3dbaf312a12e780b6e858b0a5a08f7d/image.png)

These unsafe outputs exposes vulnerabilities, which include:

Hallucination and Misinformation: generating fabricated content and false information

Harmful Content Generation (Offensive): creating harmful or malicious content, including violence, hate speech, or misinformation

Stereotypes and Discrimination (Bias): propagating biased or prejudiced views that reinforce harmful stereotypes or discriminate against individuals or groups

Data Leakage: preventing the model from unintentionally revealing sensitive or private information it may have been exposed to during training

Non-robust Responses: evaluating the model’s ability to maintain consistent responses when subjected to slight prompt perturbations

Undesirable Formatting: ensuring the model adheres to desired output formats under specified guidelines.

Uncovering these vulnerabilities can be challenging, even when they’re detected. For example, a model that refuses a political stance when asked directly may comply if you reframe the request as a dying wish from your pet dog. This hypothetical jailbreaking technique exploits hidden weaknesses (if you’re curious about jailbreaking, check out this in-depth piece I’ve written on everything you need to know about LLM jailbreaking).

Types of adversarial testing

There are two primary approaches to LLM red teaming: manual adversarial testing, which excels at uncovering nuanced, subtle, edge-case failures, and automated attack simulations, which offer broad, repeatable coverage for scale and efficiency.

Manual Testing: involves manually curating adversarial prompts to uncover edge cases from scratch, and is typically used by researchers at foundational companies, such as OpenAI and Anthropic to push the limits of research and their AI systems.

Automated Testing: leverages the LLMs to generate synthetic high-quality attacks at scale and LLM-based metrics to assess the target model's outputs.

In this article, we're going to focus on automated testing instead, mainly using DeepTeam ⭐, an open-source framework for LLM red teaming.

Got Red? Safeguard LLM Systems Today with Confident AI

The leading platform to red-team LLM applications for your organization, powered by DeepTeam.

](https://images.ctfassets.net/otwaplf7zuwf/Lh1GJRbVaSNFsU79mIPdF/3ac57acbe3e340c5052dd31142302a61/image.png)

](https://images.ctfassets.net/otwaplf7zuwf/56dUJT9IPCuIhjf9aLKjDN/3d9d791eb3e29f7802e1a9f831615573/image.png)

](https://images.ctfassets.net/otwaplf7zuwf/37LOl1AqHPiyf8zej1qwiL/eb7ceb3ef08ec90bbd1dda1a293c3a07/image.png)

](https://images.ctfassets.net/otwaplf7zuwf/3iUnNTWxP351nWhUxDpokq/6967e1ea13efc75ad54258fe50fe50c5/image.png)

](https://images.ctfassets.net/otwaplf7zuwf/2MoIdPfqKcRjRHzTqBoNSZ/86e322499a729086d0ac2d6307797199/image.png)

](https://images.ctfassets.net/otwaplf7zuwf/4iAr3ofhCa64v8pNqcy89p/461b8df0cd553f2d951e0309caffb38d/image.png)