Let’s set the stage: I’m about to change my prompt template for the 44th time when I get a message from my manager: “Hey Jeff, I hope you’re doing well today. Have you seen the newly open-sourced Mistral model? I’d like you to try it out since I heard gives better results than the LLaMA-2 you’re using.”

Oh no, I think to myself, not again.

This frustrating interruption (and by this I mean the releasing of new models) is why I, as the creator of DeepEval, am here today to teach you how to build an LLM evaluation framework to systematically identify the best hyperparameters for your LLM systems.

Want to be one terminal command away from knowing whether you should be using the newly release Claude-3 Opus model, or which prompt template you should be using? Let’s begin.

What is an LLM Evaluation Framework?

An LLM evaluation framework is a software package that is designed to evaluate and test outputs of LLM systems on a range of different criteria. The performance of an LLM system (which can just be the LLM itself) on different criteria is quantified by LLM evaluation metrics, which uses different scoring methods such as LLM-as-a-judge depending on the task at hand. This process, is know as LLM system evaluation.

These evaluation metrics scores, will ultimately make up your evaluation results, which you can use to identify regressions (which some people call regression testing) in LLM systems over time, or even be used to compare LLM systems with one another.

For example, let's say I’m building a RAG-based, news article summarization chatbot for users to quickly skim through today’s morning news. My LLM evaluation framework would need to have:

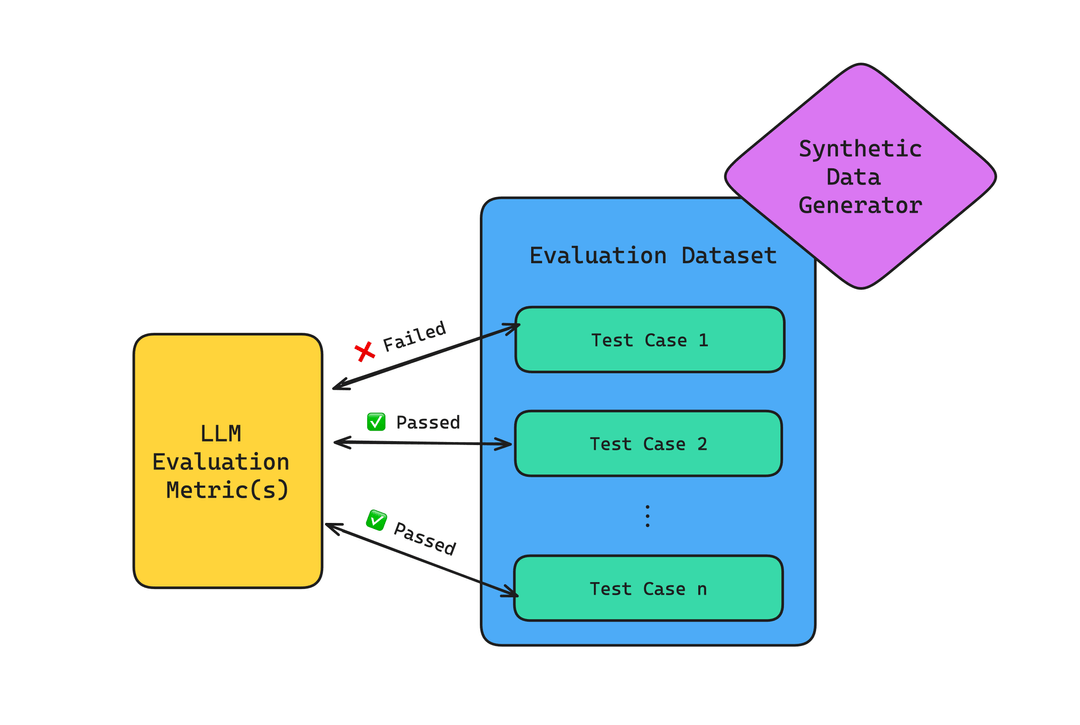

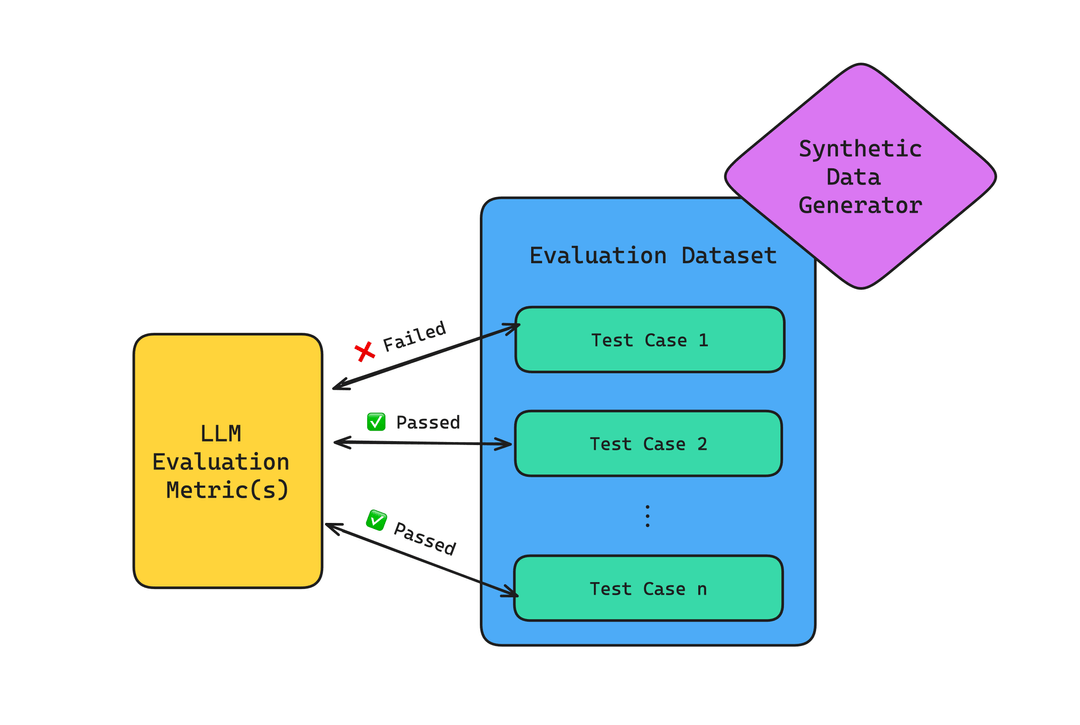

A list of LLM test cases (or an evaluation dataset), which is a set of LLM inputs-outputs pairs, the “evaluatee”.

LLM evaluation metrics, to quantify my chatbot assessment criteria I hope it to perform well on, the “evaluator”.

For this particular example, two appropriate metrics could be the summarization and contextual relevancy metric. The summarization metric will measure whether the summaries are relevant and non-hallucinating, while the contextual relevancy metric will determine whether the my RAG pipeline’s retriever is able to retrieve relevant text chunks for the summary.

Once you have the test cases and necessary LLM evaluation metrics in place, you can easily quantify how different combinations of hyperparameters (such as models and prompt templates) in LLM systems affect your evaluation results.

Seems straightforward, right?

Challenges in Building an LLM Evaluation Framework

Building a robust LLM evaluation framework is tough, which is why it took me a bit more than 5 months to build DeepEval, the open-source LLM evaluation framework.

From working closely with hundreds of open-source users, here were the main two challenges:

LLM evaluation metrics are hard to make accurate and reliable. In fact, it is so tough that I previously dedicated an entire article talking about everything you need to know about LLM evaluation metrics. Most LLM evaluation metrics nowadays are LLM-Evals, which means using LLMs to evaluate LLM outputs. Although LLMs have superior reasoning capabilities that makes them great candidates for evaluating LLM outputs, they can be unreliable at times and must be carefully prompt engineered to provide a reliable score

Evaluation datasets/test cases are hard to make comprehensive. Preparing an evaluation dataset that covers all the edge cases that might appear in a production setting is a difficult and hard to get right task. Unfortunately, it is also a very time-consuming problem, which is why I highly recommend generating synthetic data using LLMs.

So with this in mind, lets walk through how to build your own LLM evaluation framework from scratch.

The Eval Platform for AI Quality & Observability

Confident AI is the leading platform to evaluate AI apps on the cloud, powered by DeepEval.