Scaling New Heights

TGIF! Thank god it’s features, here’s what we shipped this week:

Welcome to our brand new changelog! We’re kicking things off with better cost tracking, reliability improvements, and some serious scalability upgrades.

Changelogs before this point are backfilled!

Added

- Changelog - You’re reading it! Subscribe to never miss a beat.

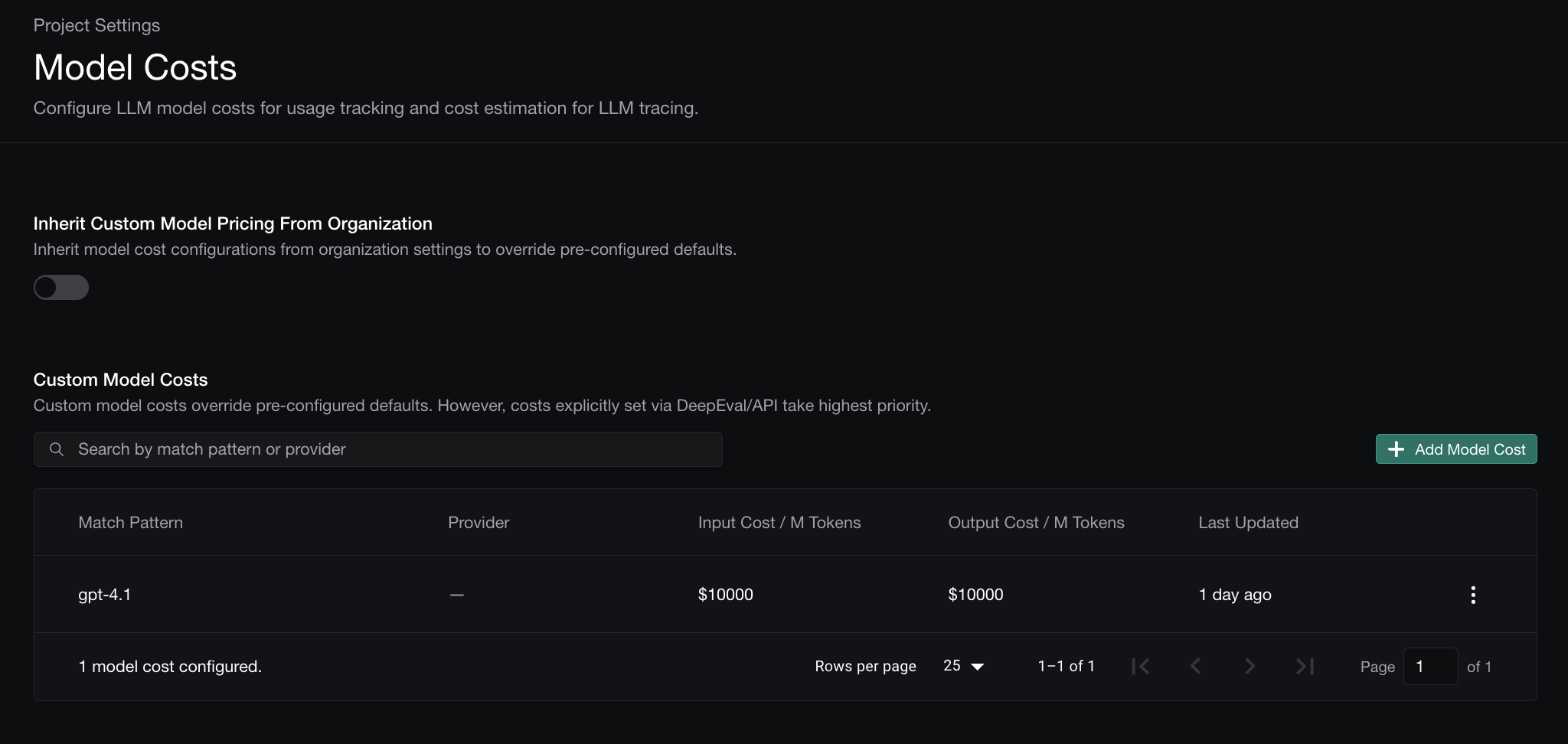

- Custom Model Costs - Set custom cost-per-token for any model in your project settings. Finally, accurate cost tracking for fine-tuned and self-hosted models.

- Request Timeout for AI Connections - Configure timeout limits for your LLM connections. No more hanging requests.

- High-Volume Trace Ingestion - We’ve beefed up our trace handling with buffered ingestion. Traffic spikes? Bring ‘em on.

Changed

- Smoother Experiment Runs - Real-time evaluation progress is now more reliable with improved streaming.

- Annotator Attribution - See who left that annotation. Credit where credit’s due.

- Faster Spans Loading - The spans tab now loads at lightning speed, even for trace-heavy projects.